First Steps Guide

1. First Boot

1.1. Running qlustar-initial-config

After the server has booted the newly installed Qlustar OS, log in as root and start the post-install configuration process by running the command

0 root@cl-head ~ # /usr/sbin/qlustar-initial-config

This will first thoroughly check your network connectivity and then complete the installation by executing the remaining configuration steps as detailed below. During the package update process, you might be asked whether to keep locally modified configuration files. In this case always choose the option

keep the local version currently installed.

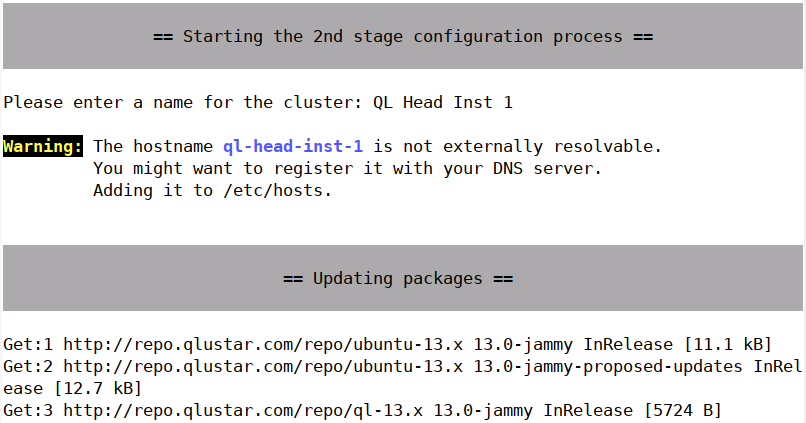

Remaining configuration steps run-through

If your chosen hostname can’t be resolved via DNS, you will see a non-fatal error message reminding you that the hostname should be registered in some (external) name service (typically DNS).

-

Cluster name

First, you will be asked for the name of the new cluster. This can be any string and is used in some places like the Slurm or ganglia configuration.

-

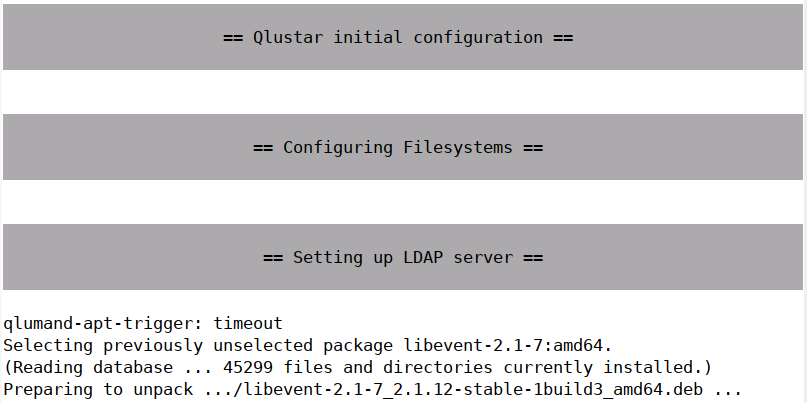

LDAP Setup

Next is the setup of the LDAP infrastructure. Just enter the LDAP admin password when asked for it.

-

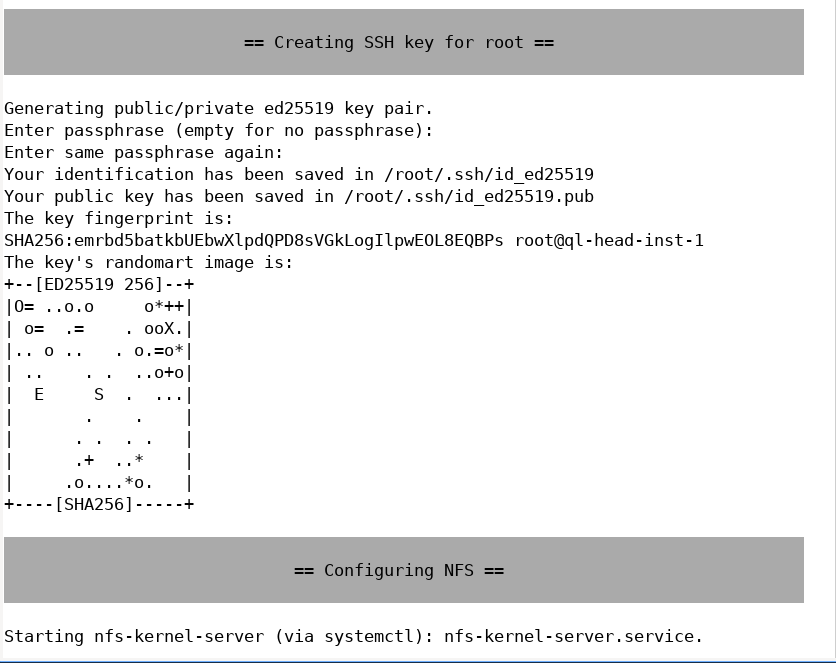

Configuring ssh

An ssh key for the root user is generated next. You can enter an optional pass-phrase for it. This key, will be used to enable login by root on the head-node to any net-boot node of the cluster without specifying a password.

Be aware, that having a non-empty pass-phrase means, that you will have to specify it any time you try to ssh to another host in the cluster. If you don’t want that, work without a pass-phrase.

-

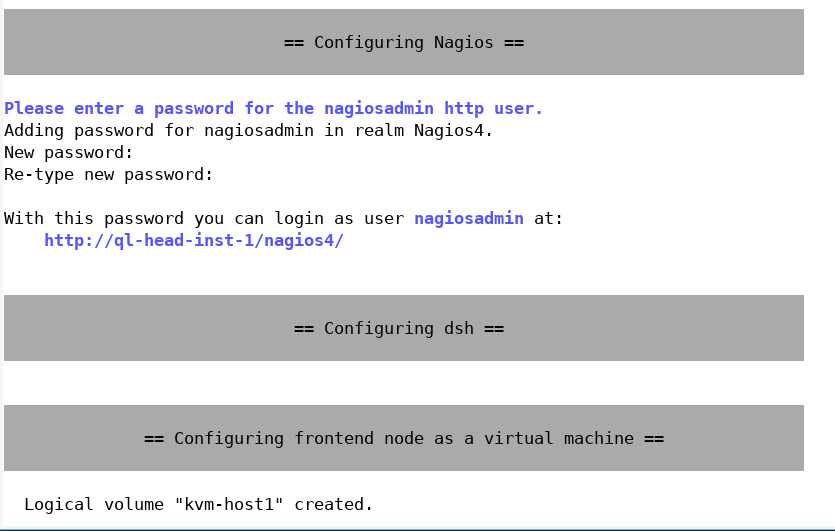

Configuring Nagios

The configuration of Nagios requires you to choose a password for the Nagios admin account. Please type in the password twice.

-

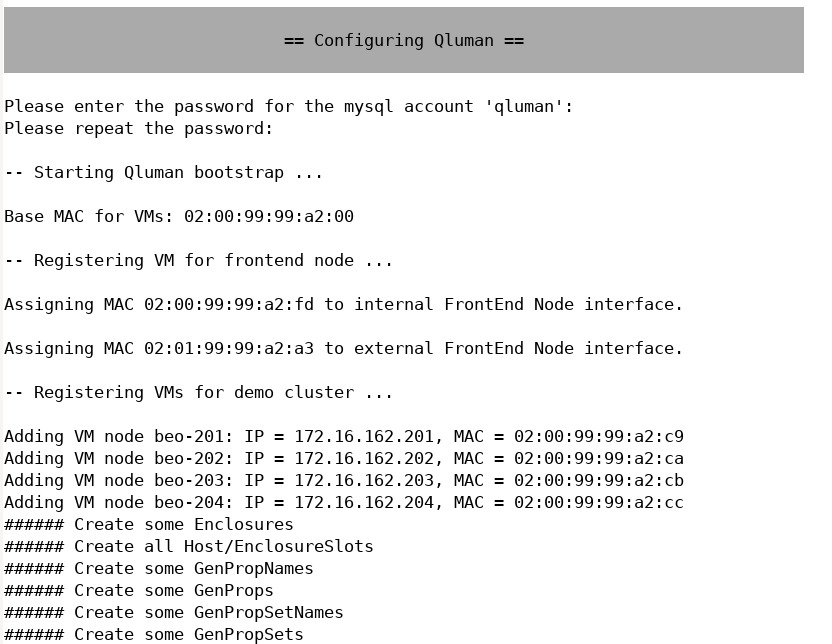

Configuring QluMan

QluMan, the Qlustar management framework, requires a mysql (mariaDB) database. You will be asked for the password of the QluMan DB user next. After entering it, the QluMan database and configuration settings will be initialized. This can take a while, since a number of OS images and chroots (see Adding Software) will be generated during this step.

-

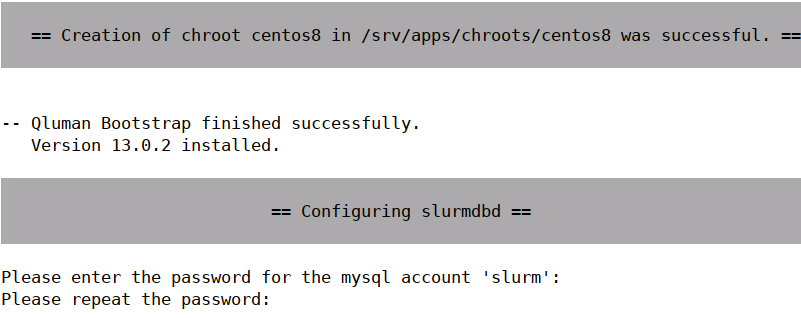

Configuring Slurm

If HPC was selected as a package bundle during installation, Slurm is installed as the cluster resource manager. Its configuration requires the generation of a munge key and the specification of a password for the Slurm mysql account. Enter the chosen password twice when asked for it.

The Slurm database daemon (slurmdbd) is also being configured by this process. Hence, you will be ready to take full advantage of the accounting features of Slurm.

-

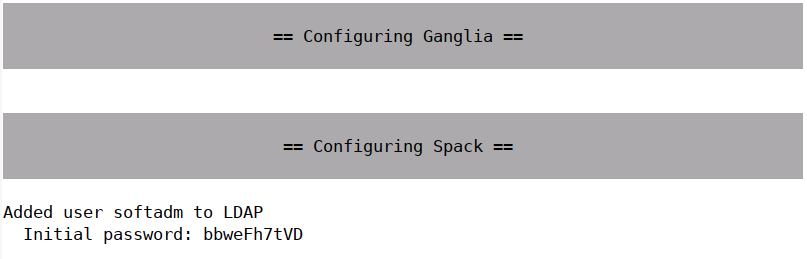

Setting up requirements for Qlustar spack

At this stage a directory

/apps/local/spackis created with customized access permissions to be used as the base path for software packages created via the HPC software packet manager spack. A special user and group softadm are added for the management of this cluster-central spack package repository. Any additional user who is a member of the group softadm will have permissions to manage the spack repo as well. The idea is to allow sharing the management of a central HPC software store between a group of administrators. -

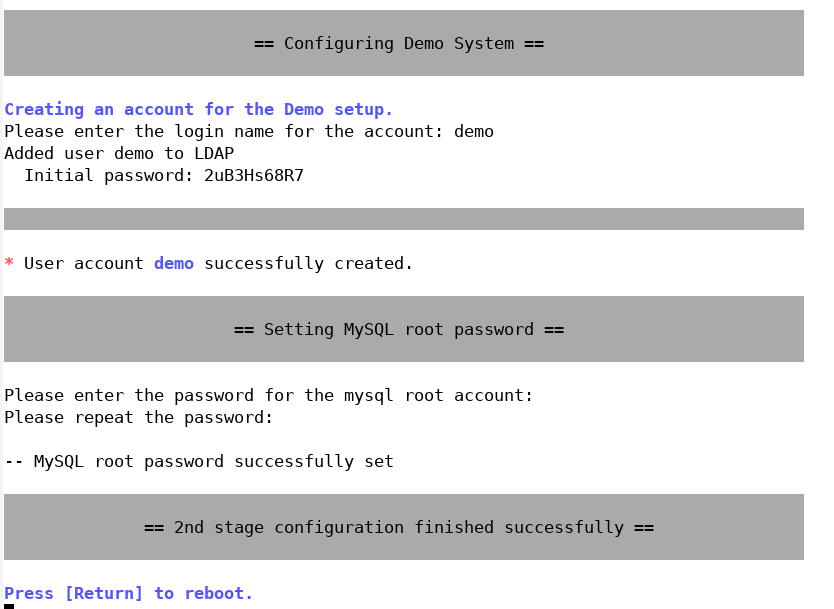

Configuring the virtual Demo Cluster

If you have chosen to setup some virtual demo nodes during installation, you will be asked for the user name of a test account that can be used to explore the cluster. Remember its password as printed on the screen.

1.2. Final Reboot

Reboot again once all the previous steps are complete by pressing Enter. After the head-node is up and running again, test its network connectivity by pinging its public IP address (hostname). Do the same for the virtual front-end node, if you have chosen to configure one. It should have booted as well, after the head-node is up and running. You can try to login to it using ssh.

A test mail should have been sent to the e-mail address(es) you specified during the

installation. If you didn’t receive one, review your settings in /etc/aliases and/or

/etc/postfix/main.cf. In case some of them are wrong, you can execute

0 root@cl-head ~ # dpkg-reconfigure postfix

to modify them.

1.3. Starting the virtual Demo Cluster

If you have chosen to configure a virtual demo-cluster, you can start it by executing the command:

0 root@cl-head ~ # demo-system -a start

and to stop it

0 root@cl-head ~ # demo-system -a stop

These commands use the configuration file /etc/qlustar/vm-configs/demo.conf. If you

find that the (automatically calculated) amount of RAM per VM is not right, you can change the

variable CN_MEM to some other value in that file. The consoles of the virtual nodes (and also

of the virtual front-end node if you chose to set one up) are accessible in a tmux

session. Type

0 root@cl-head ~ # console-fe-vm

to attach to the console session of the virtual FE node and

0 root@cl-head ~ # console-demo-vms

to attach to the console sessions of the virtual demo cluster nodes. Note that the tmux command character is Ctrl-t. To detach from the tmux session, type Ctrl-t d, to switch to the next/previous window type Ctrl-t n / Ctrl-t p. More details on the usage of tmux is available in the corresponding man page. To check whether all nodes are up and running, type

0 root@cl-head ~ # dsh -a uptime

dsh or pdsh can be used to execute arbitrary commands on groups of nodes. Check their man

pages and the corresponding section in the QluMan guide for further information.

|

The virtual FE node is managed by the systemd service unit 0 root@cl-head ~ # service qlustar-fe-node-vm start |

1.4. Installed Services

At this stage, the following services are configured and running on your head-node:

-

Nagios4 (monitoring/alerts) with its web interface at

http://<headnode>/nagios4/(<headnode>is the hostname of the head-node you have chosen during installation). You may login as usernagiosadminwith the password you specified previously. -

Ganglia (monitoring) at

http://<headnode>/ganglia/ -

DHCP/TFTP boot services and DNS name service provided by dnsmasq.

-

NTP time server as client and server

-

NFS server with exports defined in

/etc/exports -

Depending on your choice of software packages:

LDAP (slapd and sssd), Slurm (DB + control daemon), Corosync (HA), Munge (authentification for Slurm), BeeGFS management daemon. Note that among the latter, only Slurm, Munge and BeeGFS are configured automatically during installation. Corosync requires a manual configuration. -

Mail service Postfix

-

MariaDB server (MySQL fork)

-

QluMan server components (Qlustar Management)

|

You shouldn’t install the Ubuntu MySQL server packages on the head-node, since QluMan requires MariaDB and packages of the latter conflict with the MySQL packages. MariaDB is a complete and compatible substitute for MySQL. |

1.5. Adding Software

1.5.1. Background

As explained elsewhere, the RAM-based

root file-system of a Qlustar compute/storage node is typically supplemented by a global

NFS-exported chroot to allow access to software not already contained in the boot images

themselves. During installation, one chroot per

selected edge platform was automatically

created. The chroots are located at /srv/apps/chroots/<chroot name>, where <chroot name> would

be e.g. jammy or centos8. Each of them contains a full-featured installation of the

corresponding Qlustar edge platform. To change into a chroot, convenience bash shell aliases of

the form chroot-<chroot name> are defined for the root user on the head-node. You may use them

as follows:

1.5.2. Ubuntu/Debian

Example for Ubuntu/Jammy, if it was selected at install

0 root@cl-head ~ # chroot-jammy

Once you’re inside a chroot, you can use the standard Debian/Ubuntu tools to control its software packages, e.g.

(jammy) 0 root@cl-head ~ # apt update (jammy) 0 root@cl-head ~ # apt dist-upgrade (jammy) 0 root@cl-head ~ # apt install package (jammy) 0 root@cl-head ~ # exit

1.5.3. AlmaLinux

Example for AlmaLinux 8 (chroot is named centos8 by the installer), if it was selected at install

0 root@cl-head ~ # chroot-centos8

Once you’re inside a chroot, you can use the standard CentOS tools to control its software packages, e.g.

(centos8) 0 root@cl-head ~ # yum update (centos8) 0 root@cl-head ~ # yum install package (centos8) 0 root@cl-head ~ # exit

|

The Qlustar AlmaLinux edge platform integrates three package sources via the corresponding yum

repository config files below

All three repositories are enabled per default, so you can easily install packages from any of them. |

|

We dropped the OpenHPC repository in Qlustar 13. In previous Qlustar versions, OpenHPC packages were only used for end-user related software like OpenMPI and scientifc libraries. Starting from Qlustar 13, spack is used as a package manager for this purpose (see below). The official OpenHPC repository may still be added manually to Qlustar AlmaLinux 8 (centos8) chroots if desired. |

1.5.4. Comments

The nice thing about this overlay mechanism is that software from packages installed in a particular chroot will be available instantaneously on all compute/storage nodes that are configured to use that chroot.

|

Apart from the chroot, there is usually no need to install additional packages on the head-node itself, unless you want to add functionality specifically running just there. Be aware, that software packages installed directly on the head-node will not be visible cluster-wide. |

1.5.5. Spack Package Manager

Qlustar 13 has added the integration of the

Spack Package Manager to

support an optimized HPC stack with a vast number of pre-configured applications and

libraries. The local spack package store on Qlustar is located at the fixed path

/apps/local/spack which is an NFS exported directory. Per default it is mounted on all

available nodes of the cluster. For the management of this software stack, the special user and

group softadm have been created at installation time. The following description assumes that

you have selected the creation of an FE node during install.

|

If your head-node has less than 16GB of RAM, it is advisable to stop the virtual demo cluster before starting the installation process below. This process consumes a lot of memory on the virtual FE node and might otherwise lead to a crash of the demo node VMs or the FE node VM itself. |

To initialize the stack, login as user softadm on the FE node using ssh. Once logged in, execute the following:

0 cl-fe:~ $ spack compiler find spack install gcc@14.2.0 target=x86_64 spack compiler add $(spack location -i gcc@14.2.0)

This will compile the newest gcc compiler currently supported by spack with all its dependencies and can take more than 1 hour depending on your hardware. Now proceed to compile the newest OpenMPI (currently version 5.x.y) with its dependencies and finally the popular HPL Linpack benchmark.

0 cl-fe:~ $ spack install openmpi %gcc@14.2.0 spack install openblas threads=openmp %gcc@14.2.0 spack install hpl ^openmpi@5 %gcc@14.2.0

You should now have OpenMPI installed and an MPI version of HPL built with it. These will be used later to compile and run simple MPI programs as well as the HPL benchmark by submitting jobs to the installed workload manager Slurm.

|

We specified the explicit dependency of the hpl package on OpenMPI version 5 here, because per default, it requests the most recent OpenMPI 4 version. |

1.6. Running the Cluster Manager QluMan

1.6.1. Generating a one time token for the first admin login

The Qlustar management GUI qluman-qt uses public/private keys for both encryption and authentication of its connection with the QluMan server processes. For this to work, there needs to be an exchange of public keys between the GUI client and the QluMan server. Later, adding additional QluMan admin users and generating the required one-time login token can be done via the GUI. But for the first login of the default admin user, it must be accomplished using a root shell on the head-node as follows:

0 root@cl-head ~ # qluman-cli --gencert Generating one-time login token for user 'admin': Cluster = Qlustar Cluster Pubkey = b')V}bnnb*:/lb$20mb)EX=+T0V?Q}4XnO]JC]}hNM' beosrv-c: cl-head:6001 192.168.55.162 Enter new pin for one-time token: Server infos and one-time login token for user 'admin': ---[ CUT FROM HERE ]--- 0000020fc2MAAVvrsgiFAtCsPQ-vwdyGXvcAOSyei6AsDCOUhf7wXvOq7ALmZVn2qruinS ZJJohmiRwABN1zfPgcekPettiT4hOlT0Dm3ffbzB88v3VcHpHtKzRVCTMfB9YtbopIAeli FO82sJOZhhY_Cq85lIKfEVyEuUKzu6sbkVbCY-nrUAtL1f_xhsjwxJR2kY39l8qVo-KO6M zy19kCmE77O908PWF4Qd-bw2uAcB7Prx0VXFcJ9_PoQS1MWmUUm-Eq8JnfaylrQ-eWsLHW OAkLeNu0nyWrCEwhjp-Xd67erT581G_6h8xh0_Trj9NyOwxK71voWcpVjGfrT9JYHWOUly tu2xS0n5-pEeqEm_rlOOazauxznVHF_HlRrReGN8LEzY9ue5ogyDXEfnrR4sZce0c1YHX9 OJyPS0I1C8AZeQsMM5R5s-yoDTRq8mZQ_p-PjaZrLWmvvueaTWTKJygUf7OQJca5TV--QS 3P4avk7zGie7mcVGXhJKFD8cNSJCnv5Qbitw== ---[ TO HERE ]---

The token can also be saved directly to a file using the -o <filename> option. The user the

token is for can be specified by the -u <username> option like this:

0 root@cl-head ~ # qluman-cli --gencert -u admin -o ~/token Generating one-time login token for user 'admin': Cluster = Qlustar Cluster Pubkey = b')V}bnnb*:/lb$20mb)EX=+T0V?Q}4XnO]JC]}hNM' beosrv-c: cl-head:6001 192.168.55.162 Enter new pin for one-time token: Server infos and one-time login token for user 'admin' saved as '/root/token'

The server infos and one-time login token are protected by the pin you just entered. This is important when the data is sent via unencrypted channels (e.g. email or chat programs) to users or when it is stored on a shared filesystem like NFS. The pin does not need to be a strong password. It is only used to make it non-trivial to use an intercepted token.

|

The token can only be used once. So once you use it yourself, it becomes useless to anybody else. On the other hand, if somebody intercepts the token, guesses the pin and uses it for a connection, it will no longer work for you. If that happened, you’d know something went wrong. |

1.6.2. Starting the QluMan GUI on a workstation from a container

Singularity container images with the QluMan GUI are available

on our Download page. They are the recommended way to run

qluman-qt. It makes it really easy to start the GUI as a non-root user on any machine with

Singularity (or apptainer) installed (minimum Singularity version is

3.2 for QluMan 13 containers): Just download the desired version of the image, check its

sha256sum, make it executable and execute it (assuming you saved it as

$HOME/qluman-13.7.2-singularity.sqsh):

0 user@workstation ~ $ sha256sum $HOME/qluman-13.7.2-singularity.sqsh 0 user@workstation ~ $ chmod 755 $HOME/qluman-13.7.2-singularity.sqsh 0 user@workstation ~ $ $HOME/qluman-13.7.2-singularity.sqsh

This should bring up the GUI. Using the one-time token generated as explained above, you will now be able to add the cluster to the list of available connections.

The changelog of the QluMan version inside the container may be inspected by executing the following command:

0 user@workstation ~ $ singularity exec $HOME/qluman-13.7.2-singularity.sqsh \ zless /usr/share/doc/qluman-qt-13/changelog.gz

|

The version of the QluMan packages/containers on the workstation should be the same as on the head-node(s) to ensure correct operation. Running a newer GUI version with an older server often works as well, but there is no guarantee and support for such combinations. |

1.6.3. Running the QluMan GUI

Per default, the Qlustar management GUI qluman-qt is not installed on any node of the cluster. This is because the installation on the head-node (or a chroot) pulls and installs a lot of other packages that qluman-qt depends on, which will slow down updates. If you have the possibility, run qluman-qt on your workstation (see above) and work from there. If you really must have it available on the head-node, you may install it there like any other package:

0 root@cl-head ~ # apt install qluman-qt

Then you can launch it remotely on the head-node per ssh (with X11 forwarding enabled /

-X option) as follows:

0 user@workstation ~ $ ssh -X root@servername qluman-qt

1.7. Creating Users

Adding users and groups can be conveniently done using the QluMan GUI. Please consult the corresponding section in the QluMan guide.

1.8. Compiling an MPI program

MPI (Message Passing Interface) is the de facto standard for distributed parallel programming on Linux clusters. The suggested MPI variant in Qlustar is OpenMPI which should be installed if you successfully followed the steps above.

You can test the correct installation of MPI with two small hello world test programs (one in C, the other one in FORTRAN90) as the test user you created earlier. Login on the front-end node as this test user and first load the OpenMPI spack package just compiled:

0 testuser@cl-front ~ $ eval $(spack load --sh openmpi arch=$(spack arch))

Then you can compile as follows

0 testuser@cl-front ~ $ mpicc -o hello-world-c hello-world.c 0 testuser@cl-front ~ $ mpif90 -o hello-world-f hello-world.f90

After this you should have created two executables. Check it with

0 testuser@cl-front ~ $ ls -l hello-world-?

Now you’re prepared to test the queuing system with these two programs.

1.9. Running an MPI Job

Before running a multi-node MPI job via Slurm on the demo nodes, the Slurm config might need to be updated with the exact RAM values available to these nodes for Slurm jobs. This is due to the fact that Qlustar nodes report their available RAM during the boot process to the qlumand on the head-node, and these values can be different to the ones originally assumed by the installer. To accomplish this, write the Slurm config as explained here if necessary.

It is also possible, that the demo nodes will still be in the Slurm state DRAINED after

this. To check and possibly change their state, use the

Slurm Node State

Management dialog of the QluMan GUI. If the nodes are indeed in state DRAINED, first restart

slurmd on the nodes and then undrain them using this dialog.

Once all this is done and the nodes are in state IDLE you can proceed. Still being logged in as the test user and assuming at least two demo nodes are started, you can submit the two hello world programs created previously to Slurm as follows:

0 testuser@cl-front ~ $ salloc -N 2 --ntasks-per-node=2 --mem=100 -p demo mpirun hello-world-c

This will run the job interactively on 2 nodes with 2 processes each (total of 4 processes). You should obtain an output like this:

salloc: Granted job allocation 1 salloc: Nodes beo-[201-202] are ready for job Hello world from process 0 of 4 Hello world from process 1 of 4 Hello world from process 2 of 4 Hello world from process 3 of 4 salloc: Relinquishing job allocation 1

|

We have launched the MPI job with OpenMPI’s mpirun, rather than with Slurm’s srun. The latter should be used with OpenMPI versions < 5. Starting with version 5, using OpenMPI’s mpirun is the recommended way of starting MPI programs compiled with OpenMPI. |

Similarly, the F90 version can be submitted as a batch job using the script

hello-world-f90-slurm.sh (to check the output, execute cat slurm-<job#>.out after the job

has finished):

0 testuser@cl-front ~ $ sbatch -N 2 --ntasks-per-node=2 --mem=100 -p demo hello-world-f90-slurm.sh

1.10. Running the Linpack benchmark

The Linpack benchmark is used to classify supercomputers in the Top 500 list. That’s why on most clusters, it’s probably run as one of the first parallel programs to check functionality, stability and performance. If you have completed all the steps above, you will have created an optimized version of Linpack (using a current version of the OpenBlas library) for your CPU type. Qlustar comes with a script to auto-generate the necessary input file for a Linpack run given the number of nodes, processes per node and total amount of RAM for the run.

The test user has some pre-defined shell aliases to simplify the submission of Linpack

jobs. Type alias to see what’s available. They are defined in $HOME/.bash/alias. Example

submission (assuming you have 4 running demo nodes):

0 testuser@cl-front ~ $ linp-4-demo-nodes

Check that the job is started (output should be similar):

0 testuser@cl-front ~ $ squeue JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON) 27 demo linstres test R 2:46 4 beo-[201-204]

Now ssh to one of the nodes in the NODELIST and check with top that Linpack is running at

full steam, like:

0 testuser@beo-201 ~ $ top PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND 18307 test 20 0 354m 280m 2764 R 100 28.0 6:42.92 xhpl 18306 test 20 0 354m 294m 2764 R 99 29.3 6:45.09 xhpl

You can monitor the output of each Linpack run in the files:

$HOME/bench/hpl/<arch>/job-<jobid>-*/job-<jobid>-*-<run#>.out where <arch> is the spack

architecture of the nodes, <jobid> is the Slurm JOBID (see the squeue command above) and

<run#> is an integer starting from 1. The way the script is designed, it will run

indefinitely, restarting Linpack in an infinite loop. So to stop it, you need to cancel the

job like

0 testuser@cl-front ~ $ scancel <jobid>