Config Classes

Overview

Config Classes manage configurations that are too complex to fit into the key + value scheme

used by properties. Therefore, there is no common interface to configure all classes. Instead,

each class has its own configuration dialog, presenting the specific options it

provides. Furthermore, some classes depend on sub-classes (e.g. Boot Configs depend on

Qlustar Images). Only the top-level Config Classes are directly assignable to a Config

Set or a host. Sub-classes are assigned indirectly via their parent class. Most of the

functional subsystems of Qlustar have a dedicated Config Class. Currently, there are five of

them: Network, Boot, DHCP, Disk, and Slurm Configs (Slurm is optional) complemented

by a single sub-class, Qlustar Images. Please note that the Network Configs has already

been described in a previous chapter

Writing Config Files

Many of the configurations managed in the QluMan GUI via Config Classes and sub-classes are

translated into automatically generated configuration files located in the filesystem of the

head-node(s). While QluMan configuration options are usually saved in the QluMan database

immediately after they have been entered in the GUI, the write process of the real

configuration files on disk is a separate step, that needs to be explicitly initiated and

confirmed.

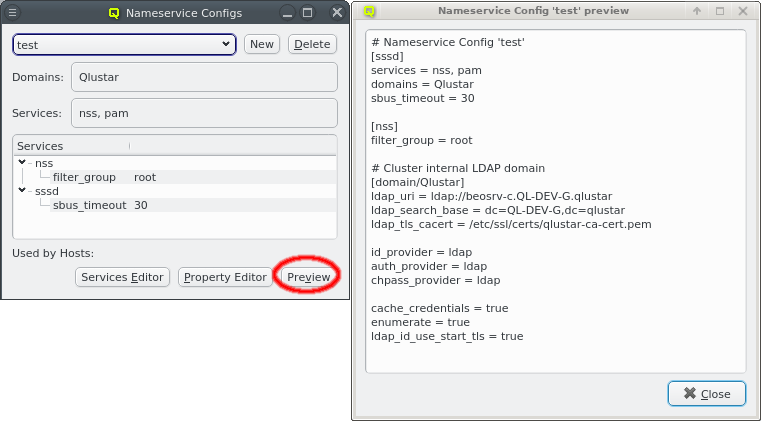

Each configuration dialog of a Config Class has a Preview button that opens the Write

Files window with its own config files already expanded. If a Config Class has no pending

changes, the Preview button becomes a View button, while its function remains the

same.

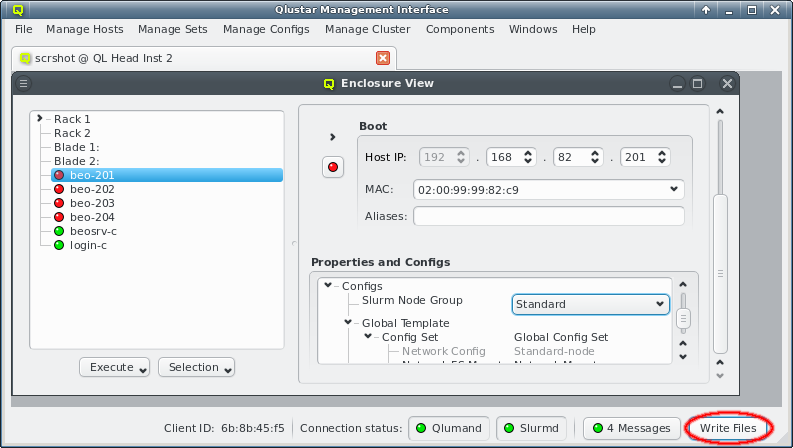

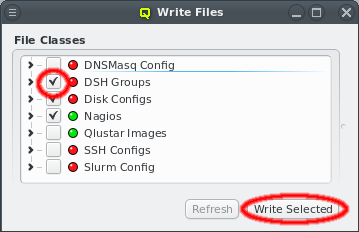

The Write Files window can also be opened from or via the

Write Files button at the bottom right of the main window. This button is an indicator

for the presence of pending changes: It is grayed out if there aren’t any, and fully visible

otherwise.

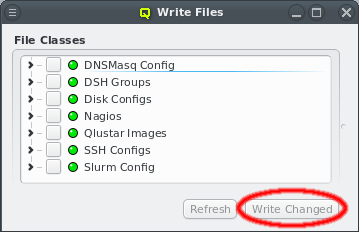

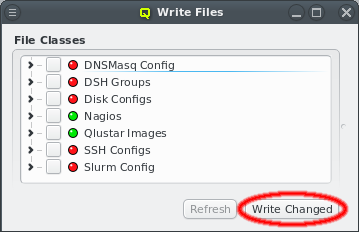

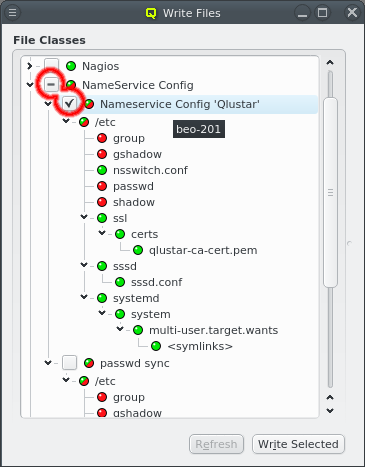

When the Write Files window is opened, on the left it shows the list of all QluMan Config

Classes that may be written. Each Config Class has a status LED. It is red if there are

changes pending to be written, otherwise green. The files of all Config Classes with pending

changes can be written by clicking the Write Changed button at the bottom. It

will be grayed out if there are no changes.

Config Classes can also be written individually by setting the check-mark before each

class. This converts the button at the bottom to Write Selected. Pressing it will

then write the files of all checked classes regardless of whether they have changes or not.

|

Writing a |

|

The actual write command is performed via the Qlustar RXengine. This allows for consistent management of multiple head-nodes e.g. in a high-availability configuration. |

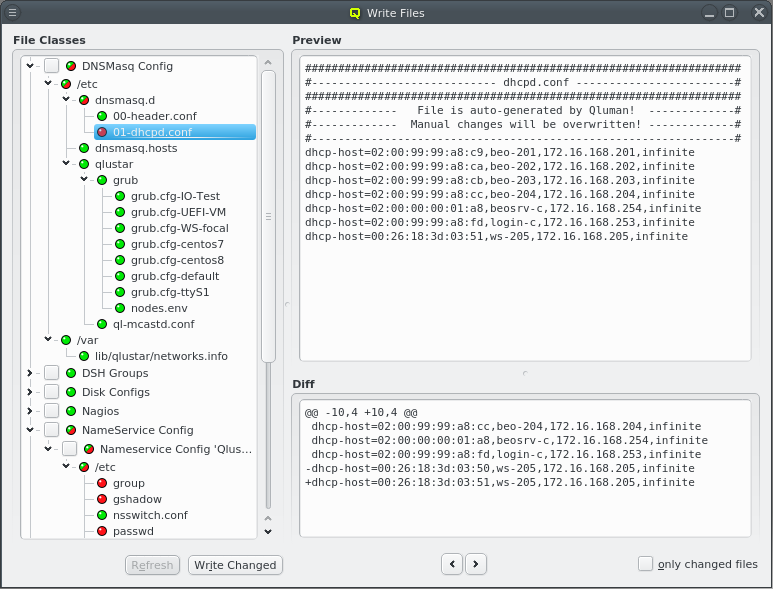

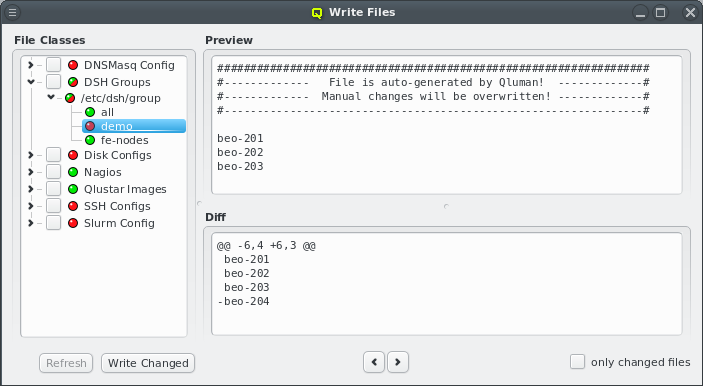

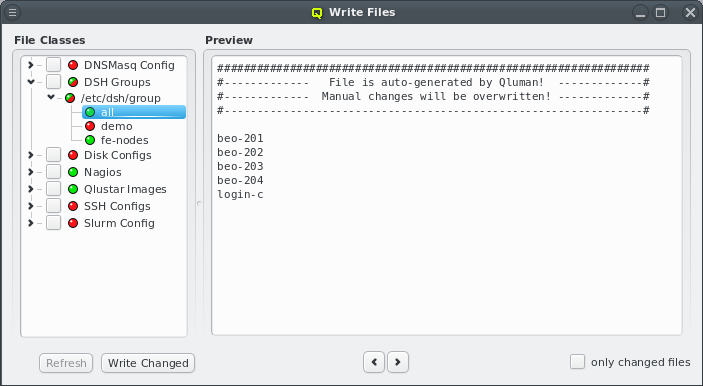

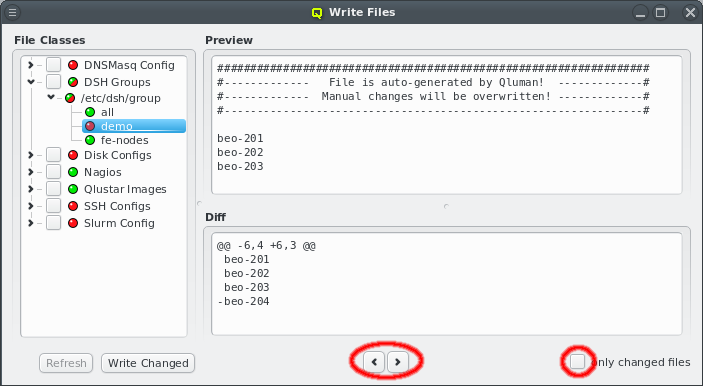

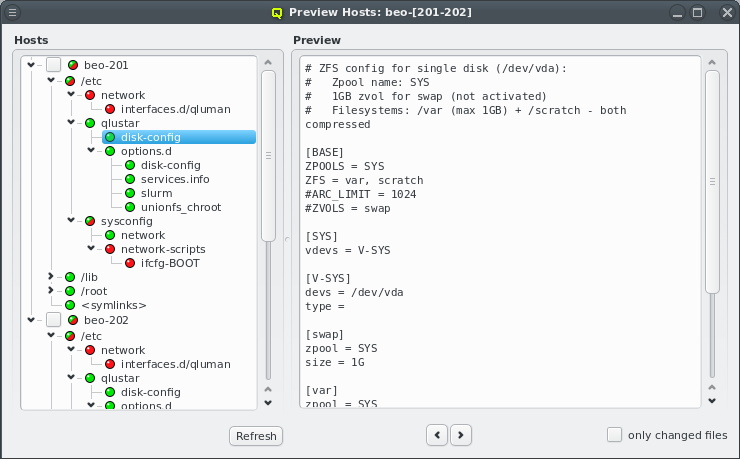

Before writing the generated files for each Config Class, they can be inspected by expanding

their entry in the tree view. Under the hood, this expansion initiates a request by the GUI to

the QluMan server, asking to send the generated files together with a diff against the current

files on disk. For the latter to work, the execd on the Headnode needs to be up and running.

The generated files are shown in a tree structure where nodes represent directories and leafs the individual files. For compactness, directories with only one entry are combined

into a single node. Each entry has its own status LED. It’s red if there are changes pending to be written, otherwise green. A red-green LED is shown if some files in a directory have changes and some do not. Selecting a file will show its contents on the right. If changes are pending, a diff of the changes will also be shown below that.

In some cases different files or just different contents are written to different hosts, e.g. in the Nameservice Config config class. In such cases, expanding the top-level node will reveal a second level of check-boxes with individual directory structures below each. They represent a different config for the class and hovering over a check-box line shows the hostlist this config will be written to. Clicking on one of the 2nd level check boxes will select or deselect the corresponding config for writing. In that case, the 1st level check-box indicates a partial selection with a dash.

|

The Write Changed button will only write those instances of such config classes that have changes unless they are explicitly checked forcing a write. This requires that the config class has been inspected before. Otherwise the detailed info about changes is not available in which case all instances of the config class will be written. |

Besides selecting files from the tree, there is also a second method of navigating between files. At the bottom of the right side, there are two arrow buttons that will switch to the previous and next file in the tree respectively. This allows to quickly browse through all files with single clicks without having to move the mouse. Per default, the Prev and Next buttons will cycle through all files. After checking the Only changed files checkbox, only files with pending changes will be switched to.

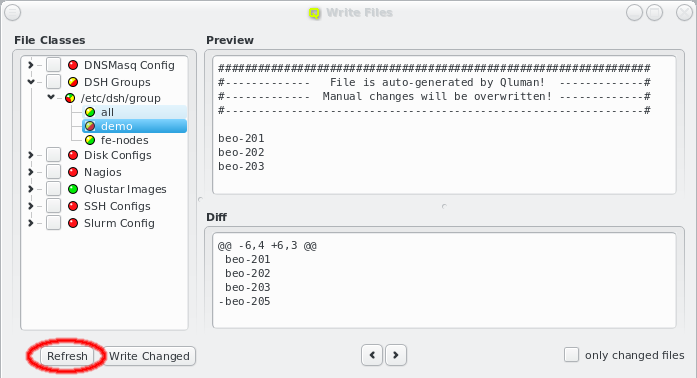

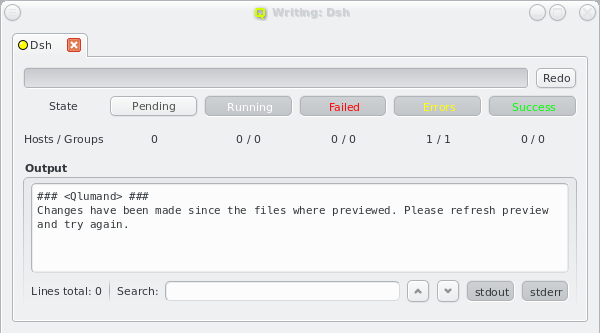

While the Write Files window is open, further changes may have been made to the cluster

configuration, either by the current user or another one. The Write Files window will detect

this. As a result, a yellow component will be added to all LEDs and the Refresh

button at the bottom be activated . Until the latter is clicked, the displayed information will

not reflect the latest changes and trying to write will also fail with an error message. This

is to prevent the activation of files with a content that is different from what has been

previewed.

|

Generating the files for each This delay reduces the load on the server if multiple changes are made within a short time. The

downside of it is that the LEDs can turn red or yellow for a short time, even though no actual

change exists. Clicking the Refresh button in this situation will abort the delay

and generate the files for each |

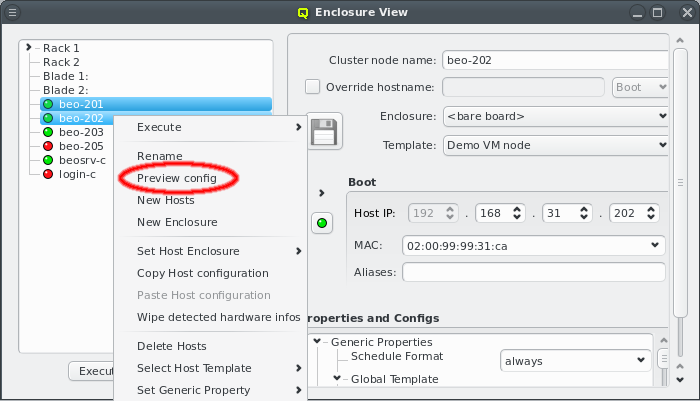

Host-specific Configs

Various configurations managed in the QluMan GUI via Config Classes and sub-classes

translate into automatically generated configuration files for the individual hosts. In the

pre-systemd phase of their boot process, these files will be sent to them and written by their

execd. At this stage, there is no general update mechanism concerning these files for running

nodes and changes only take effect during the next boot. A preview of the generated configs can

be initiated by selecting Preview config from the host’s context-menu. More than

one host may be selected for this.

|

Changes to the current config files of a host will only be shown if the host is online. If the host is offline (for example due to network problems) but not powered down, possible changes might not be shown. |

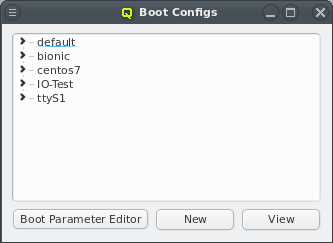

Boot Configs

The Boot Config dialog allows to define settings for the PXE/tftp boot server. A boot

configuration determines which Qlustar OS image is delivered to a

node, and optionally permits the specification of PXELinux commands and/or Linux kernel

parameters. When opened, the Boot Config window shows a collapsed tree-list of all boot

configs currently defined, sorted by their names.

|

Note that the |

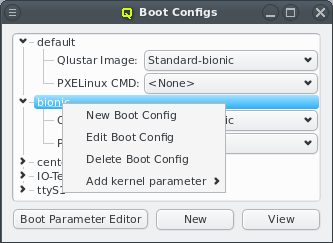

By expanding a Boot Config item, the configured Qlustar image, PXELinux command, and kernel

parameters become visible. You can change any of the values, by simply selecting a different

option from the drop-down menus. In case of kernel parameters, you can also directly edit the

entry and save the result by pressing Enter. Furthermore, it is possible to add

multiple kernel parameters or remove them through the context-menu. Each selected kernel

parameter will be added to the kernel command line.

The context-menu also lets you create new Boot Configs and edit or delete an

existing one. Alternatively, a new Boot Config can be created by clicking the

New button at the bottom of the dialog. Both, the context-menu and the button

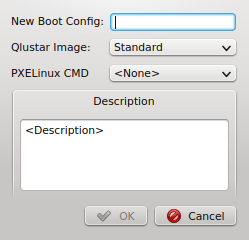

bring up the New Boot Config dialog. Simply enter the name and description for the new

config, select a Qlustar image and (optionally) a PXELinux command. Finally press

OK to create it. The new config will then appear in the Boot Config window and

will be ready for use.

Pressing the Boot Parameter Editor button at the bottom of the dialog, will bring up a small edit dialog, where kernel parameters can be created, edited, or deleted.

Disk Configs

Qlustar has a powerful mechanism to manage the configuration of disks on a node. It basically allows for any automatic setup of your hard drives including any ZFS/zpool variant, kernel software RAID (md) and LVM setups.

Since the OS of a Qlustar net-boot node is always running from RAM, a disk-less configuration is obviously also possible. Valid disk configurations require definitions for two filesystems /var and /scratch, swap space is optional (see examples). To permit the initial formatting of a new disk configuration on a node, it must have assigned the Schedule Format: always generic property during the initial boot.

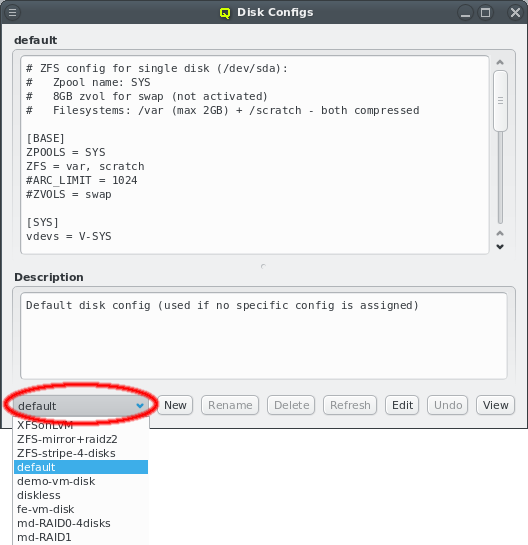

Disk configurations can be managed using the Disk Configs dialog accessible from the main

menu . You can select the config to be viewed/edited from the

drop-down menu at the bottom left. A couple of example configurations are created during the

installation. Note that there are two special configs: (a) disk-less (not editable or

deletable) and (b) default (editable but not deletable). The default config is used for any

node that doesn’t have a specific assignment to a disk config (via a Host Template, config

set).

The configuration itself can be edited in the text field at the top of the dialog. New configs can be created by choosing New disk config from the drop-down menu. As usual, enter the name of the new config in the text field and fill in the contents and description.

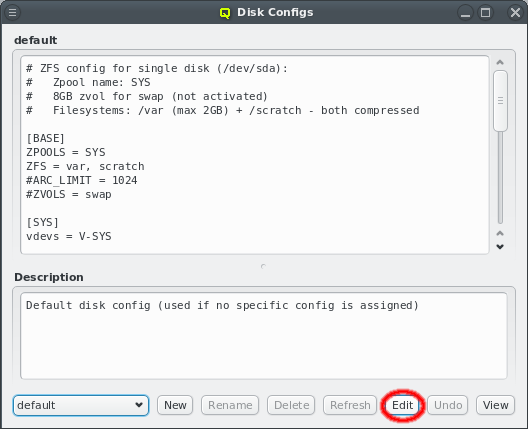

To prevent multiple QluMan users from editing the same config simultaneously and overwriting each others changes accidentally, a lock must be acquired for the template by clicking the Edit button. If another user is already editing the config, the button will be ghosted and the tool-tip will show which user is holding a lock for it.

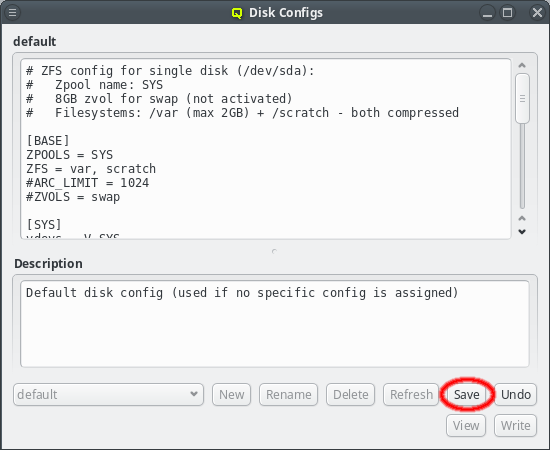

After having finished editing a template, don’t forget to save your changes by clicking the Save button. It will be ghosted, if there is nothing to save. You can undo all your changes up to the last time the template was saved by clicking the Undo button. In case another admin has made changes to a disk config while you are viewing or editing it, the Refresh button will become enabled. By clicking it, the updated disk config is shown and you loose any unsaved changes you have already made in your own edit field. To delete a disk config click the Delete button.

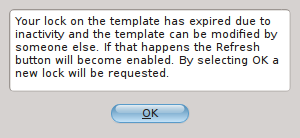

The template lock expires automatically after some time without activity so that the template is not dead-locked if someone forgets to release the lock. In such a case an info dialog will pop up to notify you about it. By selecting OK a new lock will be requested. If another user is starting to edit the template at exactly that time though, the request will fail and an error dialog will inform you of the failure.

Network Filesystem Exports/Mounts

QluMan also supports the configuration and management of Network Filesystem (FS) and bind mounts for cluster nodes. The setup for this consists of two parts:

-

For a network FS, a

Filesystem Exportsresource must be defined using the dialog at Manage Cluster→Filesystem Exports. -

A

Network FS Mountsconfig must be created using the dialog at .

Such a config may contain multiple network and bind mount definitions. As with other config classes, once defined, it can be assigned to nodes through the Global or a Host Template, Config Set or direct assignment.

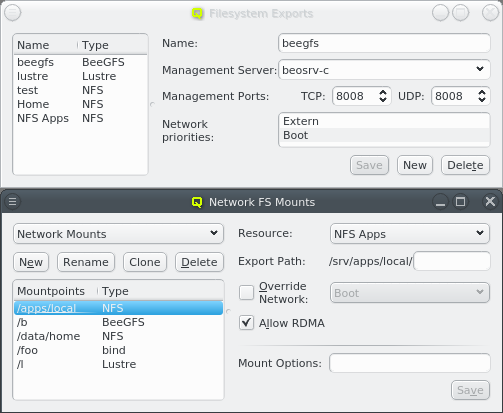

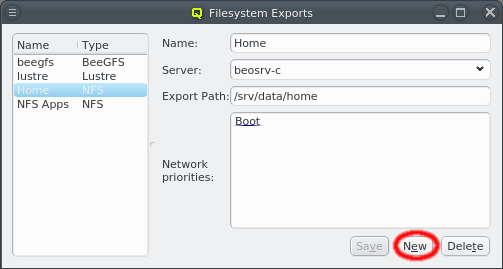

Filesystem Exports

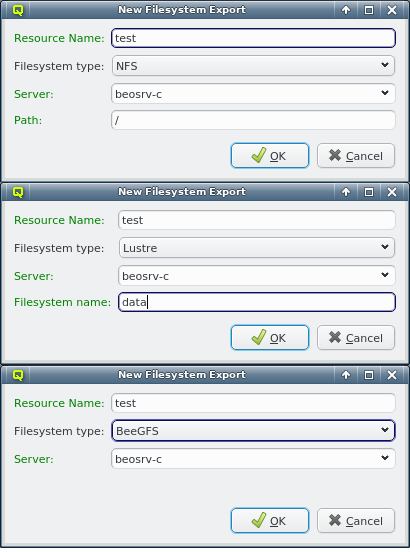

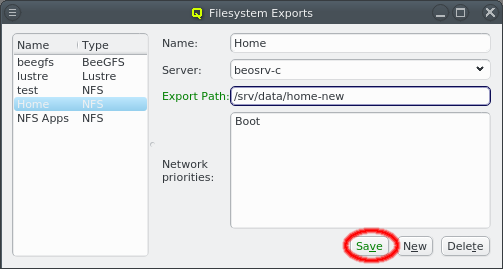

The Filesystem Exports dialog shows the list of exported filesystems by name and FS

type. Selecting an entry will show the details for this FS export on the right. A new

Filesystem Exports resource can be added by clicking the New button. This

requires choosing a unique name that will be used inside QluMan to identify the resource. The

Resource Name field will turn green if the entered name is unique. QluMan currently supports

three types of network filesystems: NFS, Lustre and BeeGFS. The FS type of the resource

can be selected from the drop-down menu.

Next the server exporting the FS has to be selected. The default is beosrv-c, the cluster-internal hostname of the head-node, as the most likely server to export a FS. Using the drop-down menu, the server can be selected from a list of servers already used for other exports. To use a new server, the name has to be entered manually. It can be any hostname known to Qluman. The Server label will turn green if the entered name is a known host. This includes all nodes configured in the Enclosure View and any cluster-external host defined in .

|

For a |

|

For a |

The remaining options depend on the selected FS type. In case of NFS, the path of the FS to

be exported on the server has to be entered. Because the path will later be used in a systemd

mount unit file, there are some restrictions on the syntax. For example the path must start

with a "/" and must not have a trailing "/". The Path label will turn green if

the entered path is acceptable, otherwise it will turn red.

For a Lustre resource, the Lustre FS name has to be specified. Lustre limits this name to

eight characters and again, to avoid complications in the systemd mount unit file later, only

alphanumeric characters and some punctuation will be accepted.

In the case of BeeGFS, you have the option to define the TCP and UDP ports on which the

management server listens for this FS resource. If the management server manages just one

BeeGFS FS, the default ports are usually fine.

Once all fields are entered correctly, the OK button will be enabled and the

export definition can be added. It will then appear in the Filesystem Exports window.

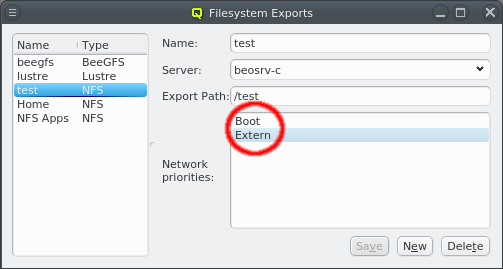

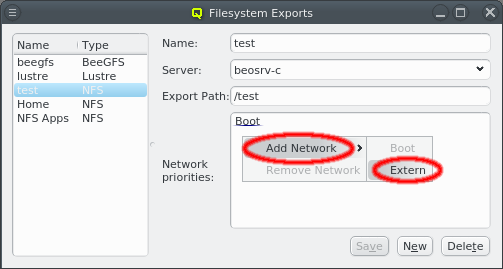

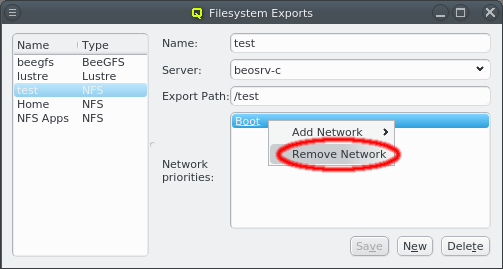

Qluman automatically adds the networks available on the selected server(s) to the Network

priorities. Later, when a node boots and requests its config files from the head-node, the

networks available on the client are checked against this list and the first common entry is

used for the network path via which the FS will be mounted. Shown entries can be removed or

additional networks added from the context-menu. Entries can also be moved up or down using

drag&drop. This is useful e.g. to ensure that an NFS export is mounted via Infiniband/RDMA on

all hosts that are connected to the IB fabric and via Ethernet on nodes without IB.

|

If the selected server is cluster-external, it will obviously not have a choice of network priorities. |

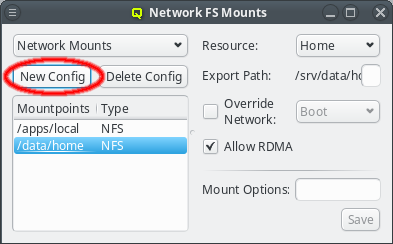

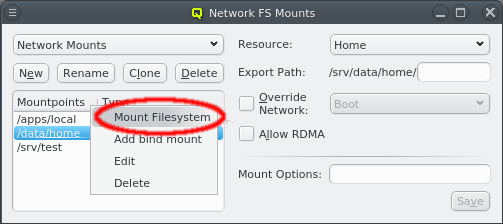

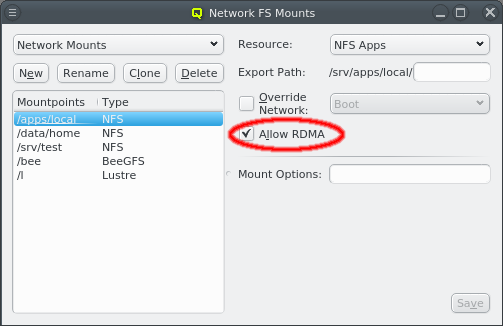

Network Filesystem Mounts

Once Filesystem Exports have been defined, they can be used to configure Network FS Mounts

configs. Each config is a collection of filesystems mounts combined with their mount

options. As usual, such a config can be assigned to hosts either directly or indirectly through

a template. Only one Network FS Mounts config can be assigned per host, so all mounts that

should be available on the booted node must be added to it. Click the New Config

button to create a new Network FSMounts config.

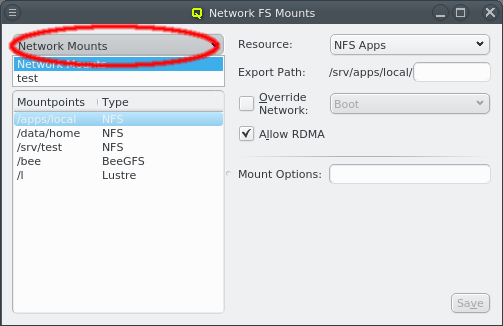

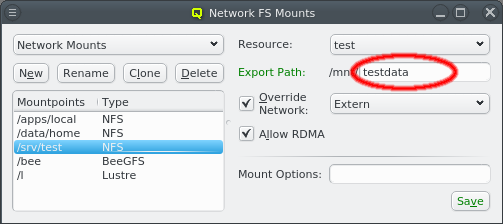

A newly created Network FS Mounts config will be automatically selected for viewing and

editing. Previously defined configs may be selected from the drop-down menu in the top

left. Below that, the list of mountpoints for the selected config is shown along with the FS

type for each mount. Selecting one of the mountpoints will show its configuration details on

the right.

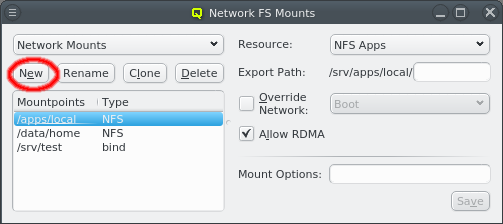

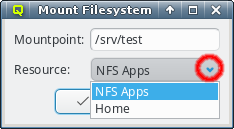

A mount definition can be deleted or a new one added to the config from the context-menu. To

define a new one, enter the path where the FS should be mounted in the Mount Filesystem

dialog. Also select one of the Filesystem Exports resources declared earlier from the

drop-down menu. In most cases this information is already sufficient. The next time when a node

assigned to this Network FS Mounts config boots, it will mount this FS.

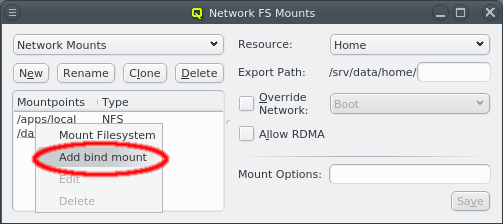

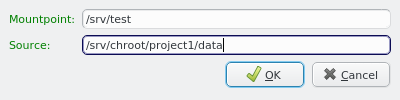

A bind mount can be added in a similar way. However, instead of selecting an external resource to be mounted, the source path of the bind has to be specified. QluMan is unable to verify the existence of the specified path, so it is worth to double check before adding the bind mount config.

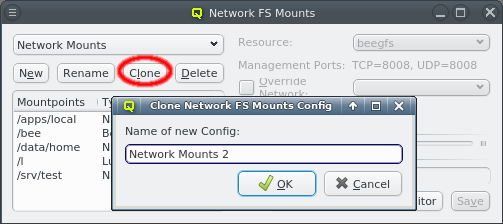

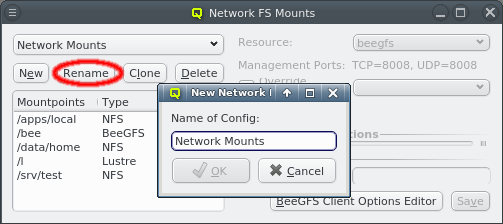

The name of a Network FS Mounts config can be changed by clicking the Rename button.

Sometimes one wants to mount an additional Network FS on a particular node. But since each host

can only ever have a single Network FS Mounts config, a new config must be created for this

host even if all other required mounts are the same as on other nodes. To simplify this, the

Clone button can be clicked to create a clone of an existing config. The new config can

then be edited to include the additional Network FS.

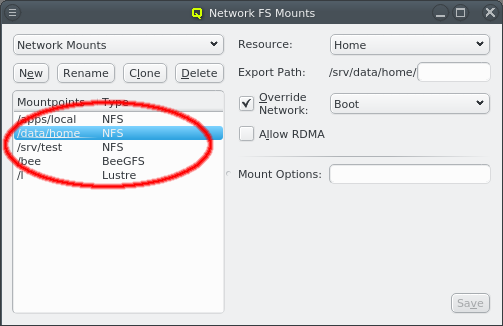

Advanced common mount options

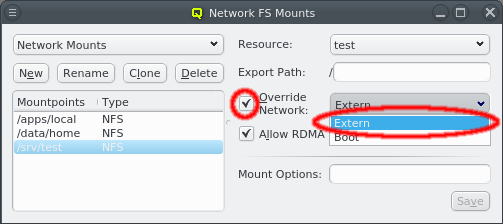

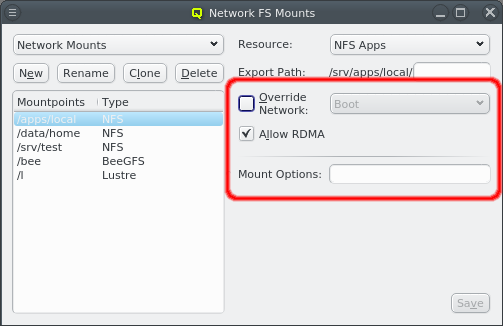

To set special options for a FS mount, first select the corresponding mountpoint from the list on the left. Once selected, there are advanced common options that can be set for all FS types (except bind mounts which have less options).

-

The automatic selection of the network used to mount the FS may be overridden. First the override must be activated by setting the check-mark for Override Network. A network can then be selected from the drop-down menu to force the mount to use this particular one regardless of what the network priorities of the associated export resource say.

-

Qluman will automatically detect if an IB network is being used to mount a Network FS and will use RDMA (remote direct memory access) for improved performance at lower CPU load. To mount a Network FS without using RDMA that feature has to be disabled for the mount by clearing the Allow RDMA checkbox.

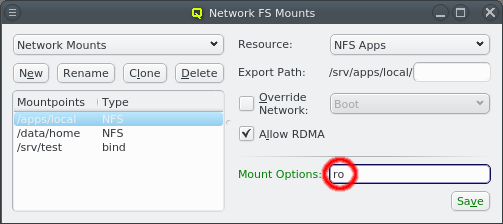

-

Last, any option that the mount command accepts for a mount can be set in the Mount Options field. There are too many of them to explain them all here. Please refer to

man mountfor the full list of possible options and their meaning.

After editing either the the mount options, be sure to press Enter, or click the Save button to save the changes.

|

Filesystems are only mounted on boot. Any changes made to a |

Advanced NFS mount options

For NFS filesystems a sub-directory can be added to the Source Path to mount

just a part of the exported FS.

There are also a number of custom mount options specific to NFS. Please refer to man nfs

for the full list of possible options and their meaning. After editing either the source path

or the mount options, be sure to press Enter, or click the Save button to save the

changes.

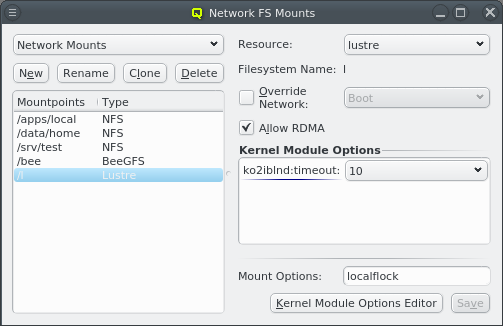

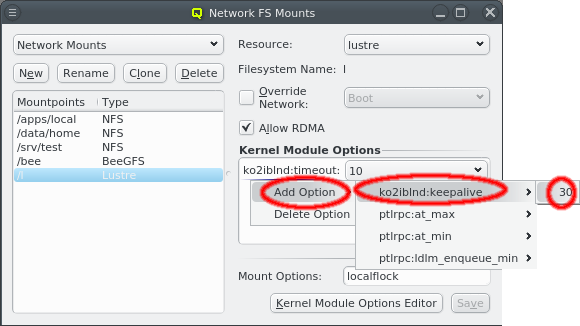

Advanced Lustre mount options

For Lustre filesystems, some advanced settings may be set via kernel module

parameters. QluMan pre-defines commonly used parameters together with their suggested default

values. They may be added using the context-menu in the Kernel Module Options box. Additional options or values can be added using the Kernel Module Options

Editor. This works the same way as for generic properties. New options

must take the form module_name:option_name. Please refer to the Lustre documentation for a

list of available parameters and their meaning.

|

Per default, new |

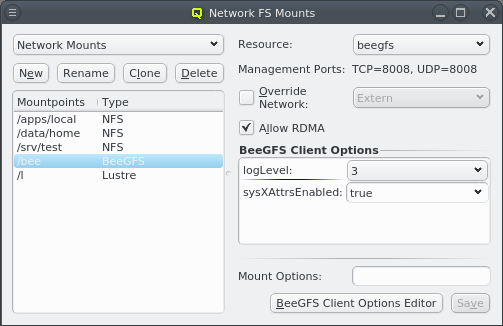

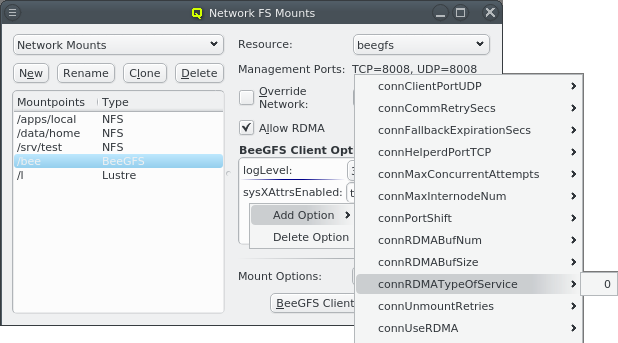

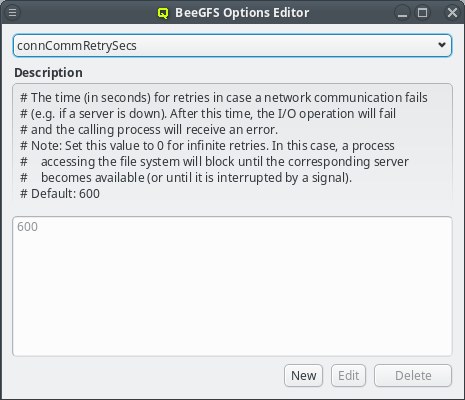

Advanced BeeGFS mount options

BeeGFS clients allow a lot of customization. For most options, the default values are

sufficient and don’t have to be explicitly set. Anything diverging from the defaults, can be

added via the BeeGFS Client Options box. The most likely options to add are

quotaEnabled (to enable the support of quota), sysACLsEnabled (to enable the support of

POSIX ACLs) and sysXAttrEnabled (to enable the support of extended attributes).

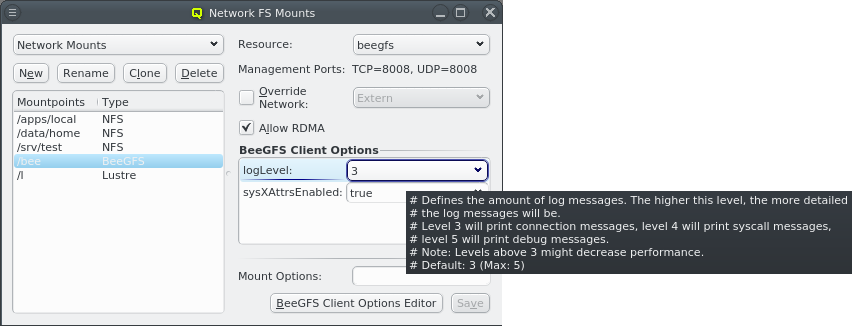

The pre-defined BeeGFS client options shown by QluMan are automatically generated from the

example configuration file distributed in the BeeGFS packages. Each option has a description

that can be seen as a tool-tip when hovering over an option that was already selected. The same

description is also shown in the BeeGFS Client Options Editor for the option that is selected

there. The editor can be opened by clicking the BeeGFS Client Options Editor

button and works the same way as for generic properties.

For options where a default value is provided in the example config file, this value will be

pre-defined and immutable in QluMan’s BeeGFS Client Options Editor. In case of boolean

options, both true and false will be pre-defined regardless of the default. For other

options, additional values must be added using the editor, before they can be assigned to a

BeeGFS mount config entry.

|

Options without a default, like e.g. |

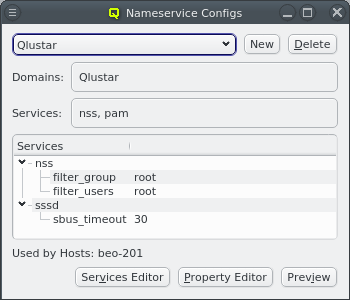

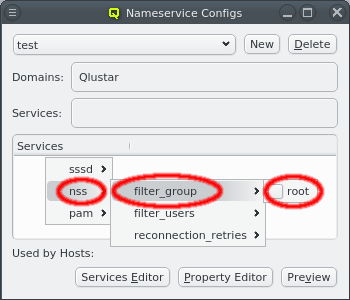

Nameservice Configs

Qlustar supports LDAP back-ends to authenticate users/groups via the system security services

daemon (sssd) and its NSS/PAM interfaces. Different sssd configurations can be used for

different groups of hosts in the cluster, or none at all. To manage these configs, select

from the main menu which opens the Nameservice

Configs window.

Like all other config classes, a Nameservice Config can be assigned to hosts through the Global

or a specific Host Template or by assigning a Config Set/Config Class directly to a host. For

hosts with such an assignment, sssd will be configured and started automatically during boot.

|

During installation, the Qlustar Nameservice Config has already been created. For clusters without the need for external Nameservice Providers this is all that is required to support the cluster-internal LDAP users/groups. Assign this config to all nodes on which users should be allowed to login with their password (typically FE nodes) and leave other nodes without a Nameservice Config assigned. |

|

During the boot phase of a host with no assigned Nameservice Config, Since Qlustar cluster nodes are configured with ssh host-based authentification, passwords are

not needed on those nodes for normal users, hence password changes are irrelevant on these

nodes. On the other hand, when users/groups are added via QluMan, this mechanism requires an

explicit write of the Nameservice Configs class as an additional step to initiate a

corresponding update of the |

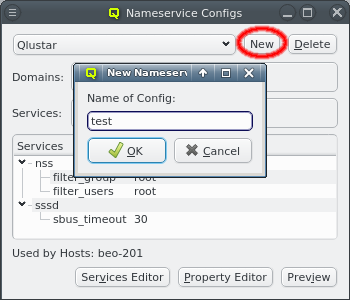

A new Nameservice Config can be created by clicking New and entering the name of the new config. For a working setup, you will have to add at least one domain as well as services and their options.

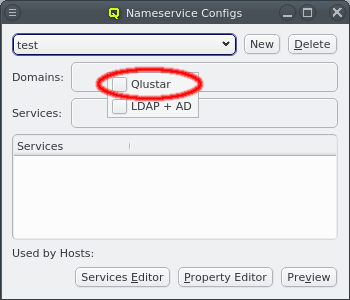

Domains can be added or removed through the context-menu available when the cursor is over the Domains box. It shows a list of the configured Nameservice Providers. The checkmark in front of each entry indicates whether the corresponding provider is selected for this config. Clicking it will toggle the checkmark to either add or remove the provider.

Services can be added to the config via the context-menu of the Services box. Both nss and pam are required for user authentication to work. Other sssd services must first be added manually by clicking the Services Editor and then the New button. In this editor, services can be selected and removed by clicking the Delete button.

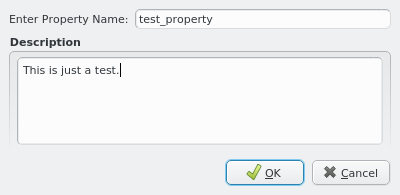

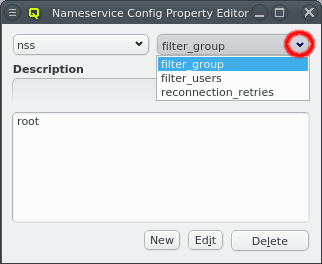

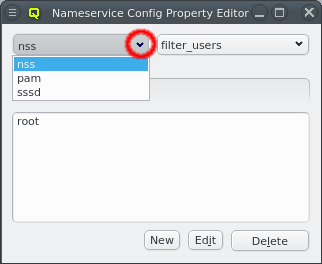

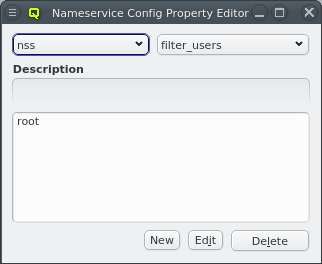

Services can be configured by adding options via the context-menu for the Services tree-view at the bottom. You might notice a sub-menu for sssd. This sets options for the sssd daemon itself and is not really a service. Additional config options can be added by clicking the Property Editor button to open the Nameservice Config Property Editor.

The latter is a bit more complex than other property editors as options are specific to the different services. First select the service you want to work on and then the property for which you want to add, remove or edit values. If the property does not exist yet, click the New button to create it. Consult the sssd man pages for valid properties/values. The property name can be edited by clicking the Edit button. Properties may be deleted via the Delete button.

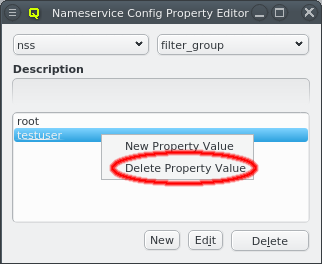

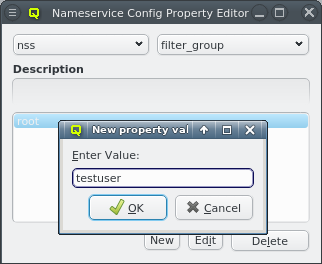

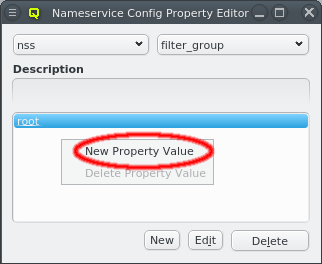

Once the service and property have been selected, the property values can be edited. A new value may be added by selecting New Property Value from the context-menu of the values box at the bottom. The same context-menu also allows deleting selected values for the property.

The full sssd config including all domains and services can be previewed by clicking the

Preview button. This will show the resulting sssd.conf of hosts being assigned to this

Nameservice Config.

Qluman Timers

Qluman timers allow the execution of commands on nodes at preset times. Commands can be repeated at regular intervals and for a limited duration. For timers with a duration, a second command can be executed at the end of the duration interval. Commands can also be defined to execute during or after completion of the boot process for actions that should run at those specific times.

|

Like other config classes, Qluman timer configs apply only to net-boot nodes but not the cluster head-node(s). Although they may be assigned to a host with generic property/value Host Role/Head Node (which defines a head-node), the assignment will have no effect. |

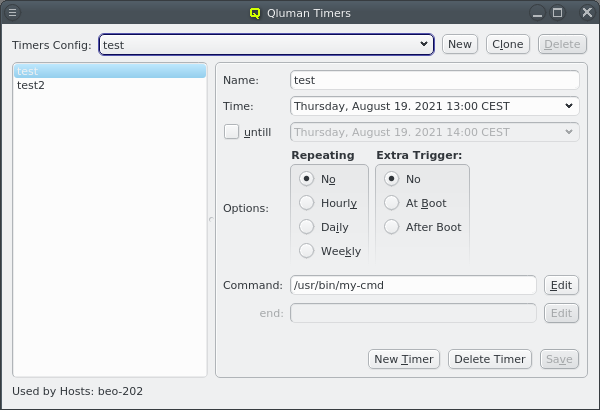

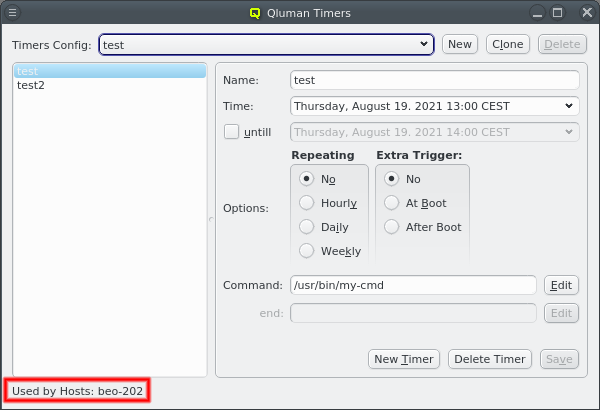

Qluman Timers Config

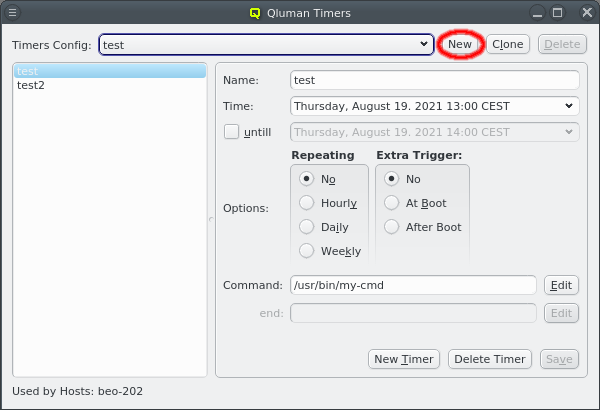

Qluman timer configs are an additive Config Class. This means that more than one config can

be assigned to a host through one of the standard methods (template/direct assignment) as long

as it’s the same method. Example: Assigning a timer config to a host directly, negates any timer

config defined in the host template. However, two configs may be directly assigned.

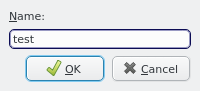

A new config can be created by clicking the New button and entering a name for the new config. An existing config can also be cloned to create a new one with copies of the timers of that config. This way they can be modified without having to recreate them all.

In general, QluMan configs may only be be deleted when they are not in use. Therefore, the Delete button will only be available if that is the case. If a config is in use, the bottom left shows the corresponding config set or hosts to which it is assigned.

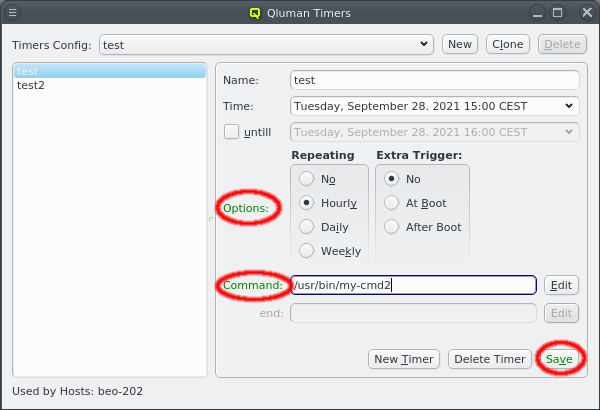

A config can be selected from the drop-down menu. Once selected, the timers in that config are listed at the left. The properties of the selected timer are shown at the right and can be edited. Changes made to the timer will be highlighted in green if valid, yellow if incomplete and red if invalid. To save the changes click the Save button.

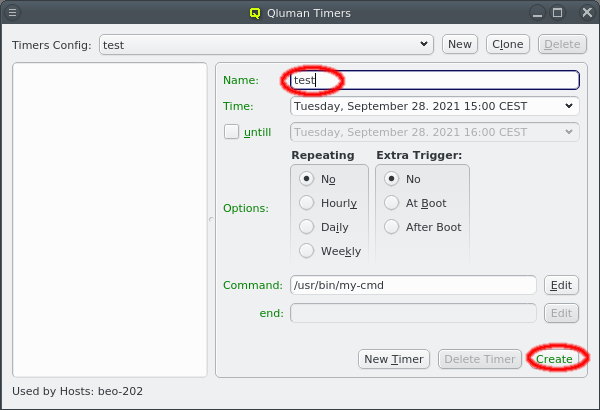

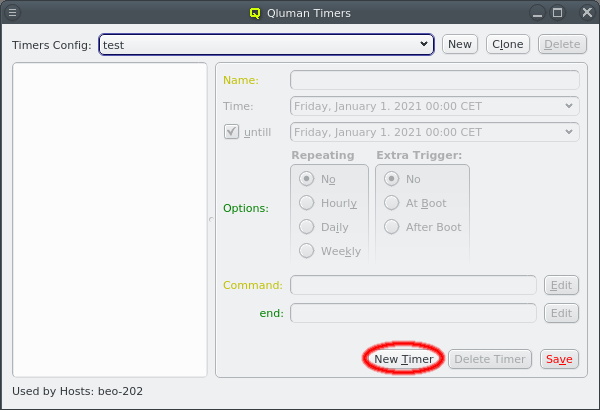

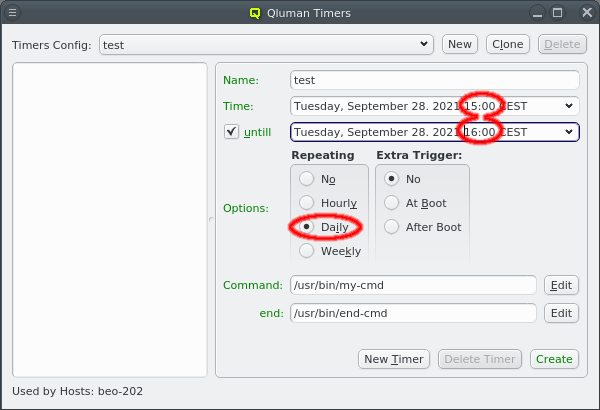

Creating a new timer

Once a config is created, timers can be added to it by clicking the New Timer button at the bottom. This will reset the input form on the right to allow entering the name of a new timer and set its properties. Click the Create button once the desired settings are made.

Editing the time for a timer

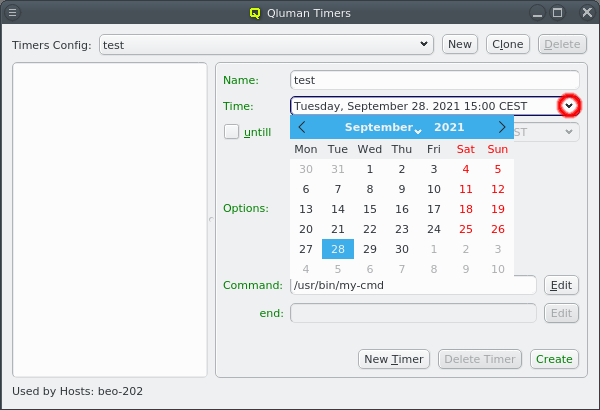

The time at which the command will be executed can be edited directly by typing in a date and time. When moving the cursor to any part of the date or time, the up and down cursor keys can be used to increment or decrement the selected field. The date may also be selected from a calendar by opening the drop down menu.

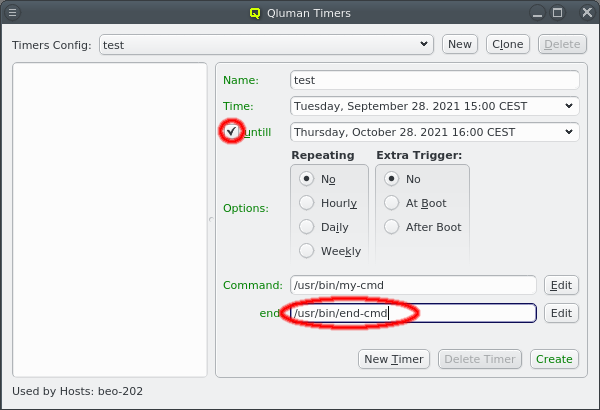

A timer can have a duration by checking the until checkmark and setting a time at which the timer will end. This allows to define a command at the bottom which should run at the end of the duration entered. Usage example: Run a ZFS pool scrub for a limited time every night, then pause the scrub and continue it the next night.

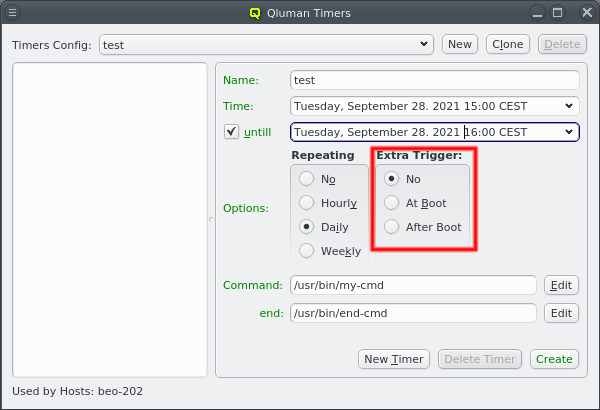

Besides the start and end time, a timer can also be triggered when a node boots. This allows the command to be executed on nodes that where down when a timer was supposed to be started. By selecting At boot the command will be executed during the boot process before systemd is started. At that time no services will be running and no filesystems will be mounted besides the system itself. The other option is selecting After Boot to run the command at the end of the boot process when all services are up and all filesystems are mounted.

Repeating timers

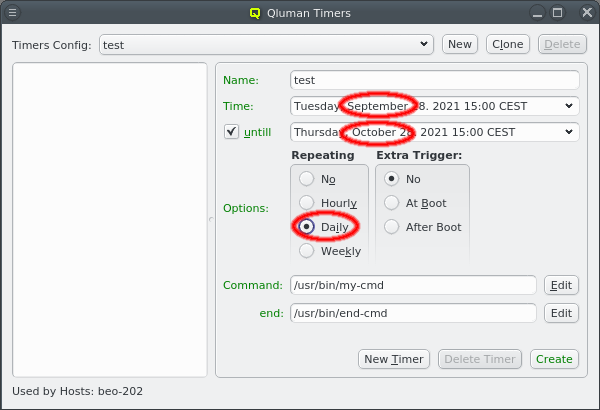

A timer can be set to repeat hourly, daily or weekly. In the simple case of a timer with just a start time, this does what is expected: An hourly repeating timer e.g. will execute the given command at the start time and again an hour later etc. as long as the node is up.

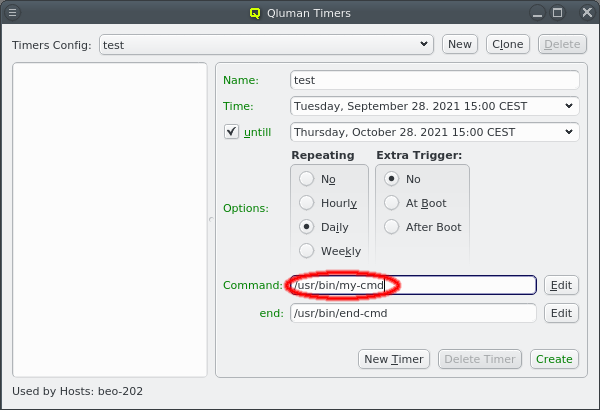

Things get slightly more complex for repeating timers with a duration. There are two cases to distinguish, best explained with examples.

Case 1: The duration is less than the interval at which the timer will repeat as shown in the screenshot on the left. In this example, the timer is set to run for one hour on September 28th and repeat daily. This means, it will run again for one hour on the September 29th starting at 15:00h and the same every following day. The command and end command will be executed each day at 15:00h and 16:00h respectively.

Case 2: The duration is longer than the repeating interval. In the example with the screenshot on the right, the timer is set to start at 15:00h on September 28th to be run daily until it ends at 15:00h on October 28th. Here the command will be executed every day at 15:00h but the end command will only be executed once on the final day.

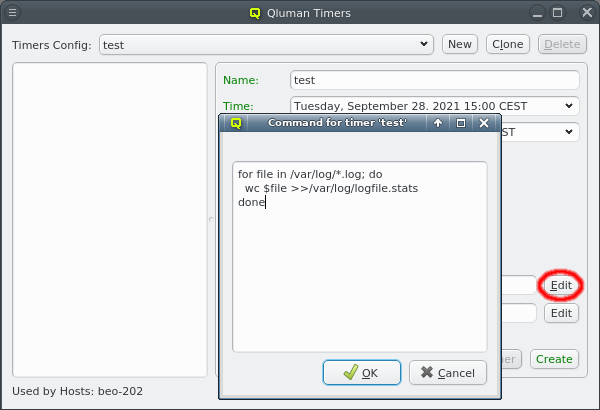

Editing the command for timers

The commands associated with a timer are executed using the system shell. As such it can be a simple command or a shell script. Short commands can be entered directly in the mask. For longer commands and complex scripts click the Edit button at the right to open a larger editor window. The same applies to the end command.

Removing timers

When a timer config is unassigned from a node, one intuitively expects that all timers of this

config will be deleted on the node, once the Qluman Timers section in the Write Files dialog

is written. However, this is not the case due to the intrinsic nature of the Write Files

mechanism. To delete the timers on this node, you should create an empty timer config (possibly

called Cleanup for later reuse) and assign it to the node. After that, the explicit write of

the Cleanup timer config in Write Files will remove the timer on the node. You can

double-check this by inspecting the local timer directory /var/lib/qluman/monitoring on the

node. The assignment of the Cleanup timer config can be removed afterwards.

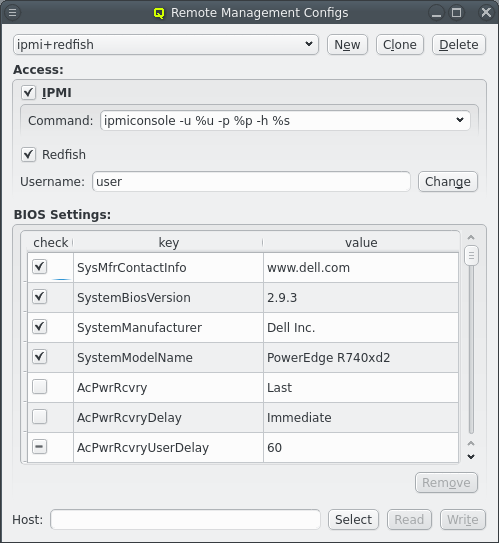

Remote Management Configs

Cluster nodes having a so-called Baseboard Management Controller (BMC) with IPMI support allow

for powerful hardware remote management. A QluMan Remote Management config defines the type

of a host’s BMC and the credentials (user/password) to access it. This permits the use of power

management functions for hosts with configured BMCs in the execution engine. In case the node’s BMC

additionally has Redfish support, a Remote Management config may also include BIOS settings of

the host. They can be read from one template host and then be used to validate and/or correct

the BIOS settings of other hosts with the same hardware.

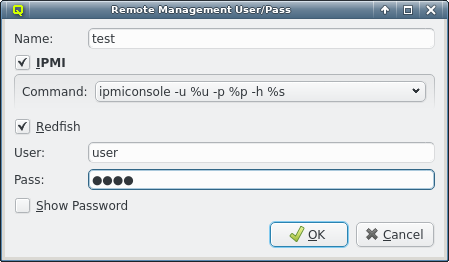

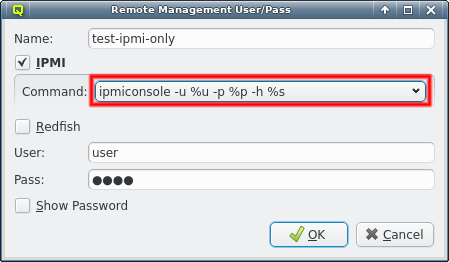

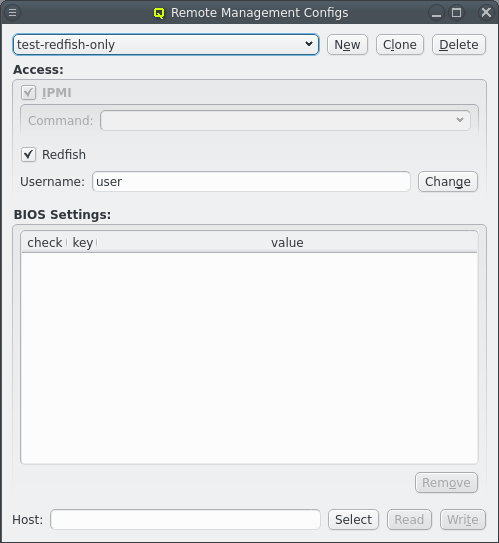

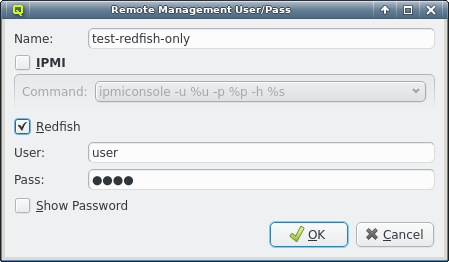

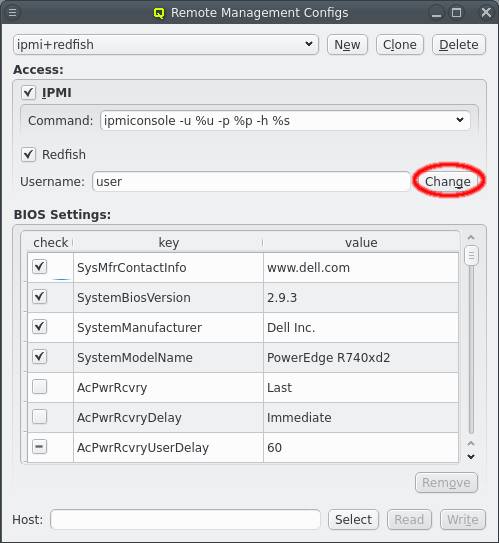

A new Remote Management config can be created by clicking the New button. A name must then be given to it and either IPMI, Redfish or both must be selected as the interface type.

For IPMI, there are different tools to access the interface depending on the hardware model. Some hardware also requires extra command line options for the tools to function. The most common cases are pre-defined and accessible from the drop-down menu. If additional ones are required, a new command can be entered directly. IPMI is used for console access from the shell via the console-login utility and as legacy fallback for power management of hardware that does not support Redfish.

The other access type is Redfish. It can be used without IPMI as fallback, but in most cases this is not advisable, since it would remove access to the remote console from the shell for hosts using this config. Accessing the remote console via Redfish is currently not supported.

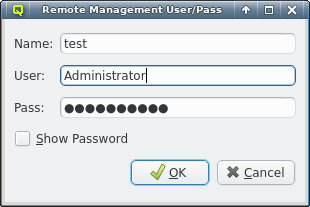

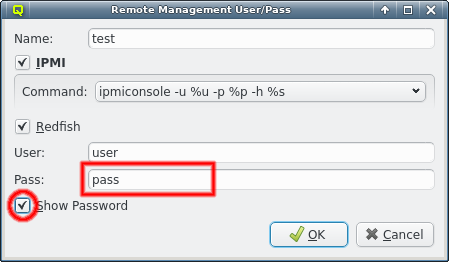

The usual config will have both IPMI and Redfish support. Both access protocols require login credentials consisting of a username and a password. For security reasons, the password is not shown by default but it can be made visible by checking the Show Password checkbox. The username and password can be changed later by clicking the Change button and then updating them in the Remote Management User/Pass dialog.

|

Some functionality provided by Remote Management configs is still experimental and may or may not work for you. While there is an official specification for Redfish, hardware vendors leave a lot of their implementation details undocumented. Furthermore, vendors implement many different versions of the specification and often interpret them differently. IPMI console access and power management usually function pretty reliably, but updating BIOS settings is not guaranteed to work. |

BIOS Settings

Besides configuring the remote console and power management for hosts, a Remote Management config can also control their BIOS settings if the BMC has Redfish support. When a new config with Redfish support is created, the BIOS settings portion of the config is initially empty. The specific config required for a host is highly hardware specific and impossible for QluMan to create on its own. Instead, the BIOS settings may be read from a host that was configured manually using the vendor provided interface and can then be used to configure other nodes with the same hardware in the cluster.

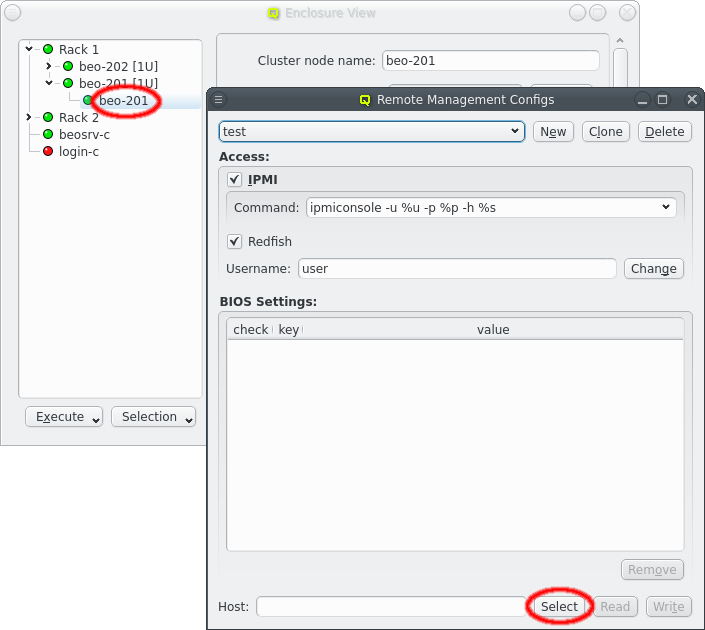

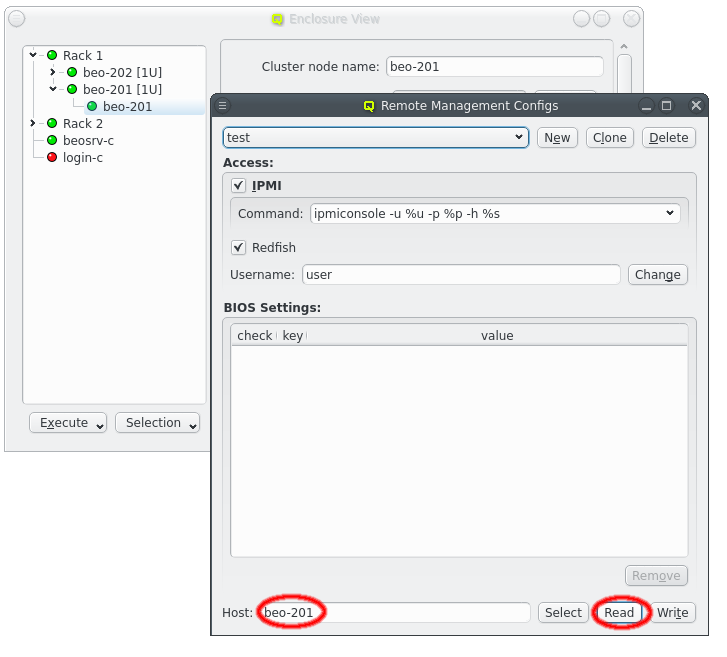

To read the BIOS settings from a host, it has to be specified first. This can be done by selecting a host in the Enclosure View and then clicking the Select button in the Remote Management Configs dialog. Alternatively the name of the host can be entered directly.

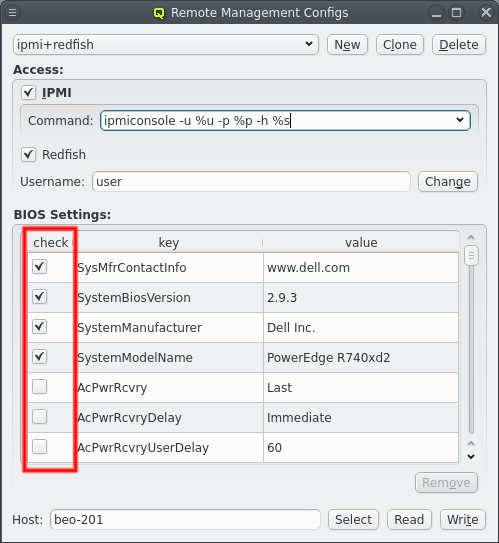

Once a host has been specified, its BIOS settings can be loaded from the BMC by clicking the Read button. On success, this will add the settings of the host to the active Remote Management config and display them in a table as key-value pairs.

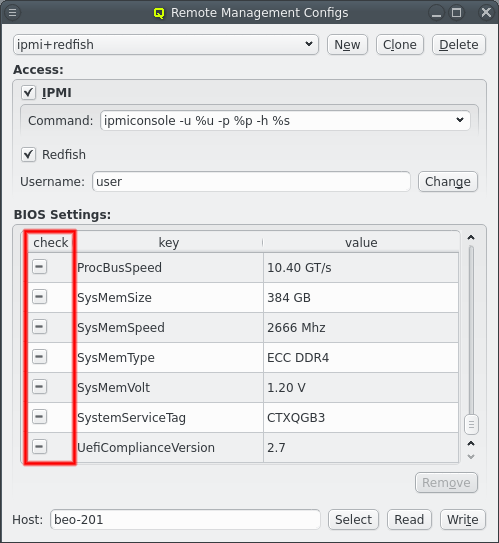

BIOS settings are classified into three categories reflected in the check column. Some settings are specific to the vendor and hardware model. They can be used to identify the hardware type and make sure the configured BIOS settings are not written to a host with different hardware. Such entries need to be marked with a checkmark in the check column.

The second class of settings are purely informational and provide information about the actual hardware detected in the system, e.g. the amount of memory. These settings can not be written to and attempting to change them will result in errors. They may be marked with a dash in the check column indicating that QluMan should check that the value matches what the host reports. Often though, these settings are meaningless for the user and just clutter up the config. Unwanted entries can therefore be selected and then be removed from the config by clicking the Remove button.

Once BIOS Settings are as desired, they can be tested by writing them to a host using the Write button. As a first test, the settings should be written back to the host they were read from. This will check whether writing BIOS settings is allowed and working at all. As a second test, the settings should be written to a different host, preferably one with different BIOS settings. This will test if modification of BIOS settings works.

|

Some implementations may ignore settings that are unchanged. Writing back the original settings to the host will then not be a meaningful test. |

Updating BIOS Settings

When a Remote Management Config with Redfish support and BIOS Settings is assigned to a host, QluMan can probe the host during boot and validate or even update its BIOS settings. By default, it will read them out at boot time, compare them to the configured settings and then keep on booting. Even a failure during read out is ignored. This should be harmless in all cases.

The action taken during boot can be configured by assigning the generic property BIOS Settings to the host via one of the known methods. Then one of three actions can be chosen.

-

The Ignore action simply does nothing. It’s the same as if no Remote Management config with BIOS Settings were assigned to the host. Select this option if you want to disable any BIOS Settings feature.

-

Validate is the default action. It will try to read the BIOS settings during boot and compare them to the configured settings. Differences between the configured and actual BIOS settings will be logged in

/var/log/qluman/qlumand.log. -

Like with Validate, if the Update action is chosen, the BIOS settings will be read and compared during boot. If any changes are detected, QluMan will attempt to update the BIOS setting of the node to match the configured settings. After the update, the host will be rebooted so it will run with the corrected settings afterwards.

|

Writing the BIOS settings to hosts is still experimental and sometimes fails. Since the host is rebooted after the update attempt, it is possible that hosts get stuck in an endless reboot loop. For some hosts updating the BIOS via Redfish may have to be explicitly enabled or might simply work a bit differently. Furthermore, not all BIOS settings can be over-written and might have to be removed from the config. It is advised to test this on an individual host before enabling it on a larger number of them. |