QluMan Guide

1. Introduction

1.1. Qlustar Clusters

A Qlustar cluster is designed to boot and manage compute and/or storage nodes (hosts) over the network and make them run a minimal OS (Operating System) image in RAM. Local disks (if present) are only used to preserve log files across boots and for temporary storage (e.g. for compute jobs). Hence all Qlustar cluster nodes apart from head-nodes are always state-less.

One or more head-nodes deliver the OS boot images to the nodes. Additionally, a small NFS share containing part of the configuration space for the nodes is exported from one of the head-nodes. Optionally, the RAM-based root FS (file-system) can be supplemented by a global UnionFS chroot to support software not already contained in the boot images themselves. The head-node(s) of the cluster typically provides TFTP/PXE boot services, DHCP service, NIS service and/or slurm resource management etc. to the cluster.

The management of these and all cluster-related components of a Qlustar installation in general

can easily be accomplished through a single administration interface: QluMan, the Qlustar

Management interface. The QluMan GUI is multi-user as well as multi-cluster capable:

Different users are allowed to work simultaneously with the GUI. Changes made by one user are

updated and visible in real-time in the windows opened by all the other users. On the other

hand, it is possible to manage a virtually unlimited number of clusters within a single

instance of the QluMan GUI at the same time. Each cluster is shown in a tab or in a separate

main window.

1.2. Overview of basic Setup Principles

A central part of Qlustar are its pre-configured modular OS images. Different nodes may have

different hardware or need to provide specific and varying functionality/services. Therefore,

to optimize the use of hardware resources and increase stability/security, Qlustar does not

come with just one boot image that covers every use-case. Instead, a number of image modules

with different software components are provided from which individual custom OS images can be

created as needed. A Qlustar OS image just contains what is actually required to accomplish the

tasks of a node, nothing more. See below for more details about configuring

OS images.

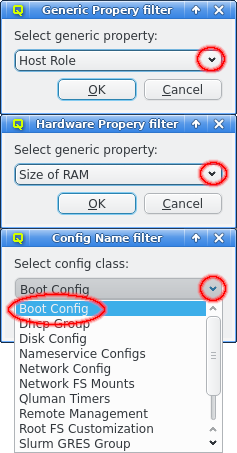

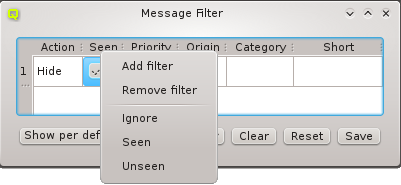

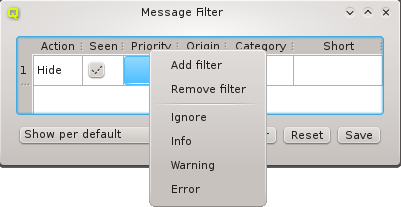

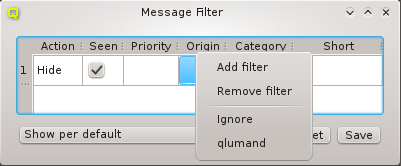

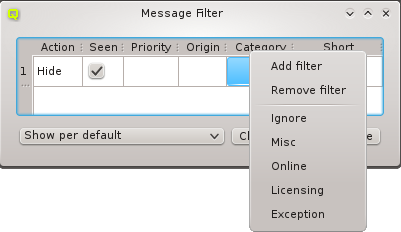

But providing different OS images is still not enough for a flexible yet easily manageable cluster: A node booting a generated image also receives extra configuration options via DHCP, via qlumand and via NFS at boot time, thus allowing to fine-tune the OS configuration at run-time. E.g. it is possible to determine how the local disks are to be used (if any are present), whether additional services like OpenSM or samba should be enabled/disabled and a lot more. Four different configuration/property categories exist in QluMan:

-

Generic-Properties are simple on/off options or key+value pairs applicable to groups of nodes, e.g. to flag the reformatting of the local disks at the next boot, add SMTP mail functionality, etc.

-

Config Classes handle more complex configurations like boot/disk configs, DHCP, etc.

-

Hardware-Properties are not used to configure the nodes themselves but describe their hardware configuration and are of importance e.g. for the slurm workload manager and/or inventory management.

Of course, one can configure every host in a cluster individually. But in most clusters, there are large groups of hosts that need to be configured identically. However, even if there are several groups, they might share only some properties/configurations, but not all of them. To provide a simple handling for such scenarios, while at the same time maintaining maximum flexibility, QluMan allows to combine generic properties, hardware properties and config classes each into sets.

For settings that apply to all hosts of a cluster, there are global sets: A global Generic

Property set, a global Hardware Property set and a global Config set.

Additionally, it is possible to combine exactly one Generic Property set, one Hardware Property

set and one Config set into a Host Template. Assigning a Host Template to a group of hosts

allows to specify all of their specific properties and configuration settings with a single

mouse-click.

For situations where flexibility is required (e.g. one host in a group has a slightly different hardware configuration than all the others), it is also possible to override or extend the settings defined in the chosen Host Template, by assigning either one of the sets and/or individual properties/config classes directly to a host. In case of conflicts, values from individual properties/config classes have highest priority, followed by set values, then the Host Template values and finally the global values. The Enclosure View presents a nice graphical representation of this hierarchy of settings for each host. For more details on this, see Configuring Hosts.

2. Cluster Connections

2.1. Connecting to a Cluster

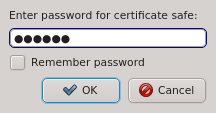

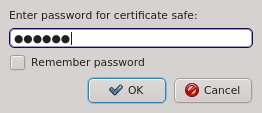

When starting qluman-qt, it requests the password for your certificate safe. This safe

holds the login information for your clusters together with the private keys for the

corresponding QluMan user account. The password for the certificate safe is required on every

start and whenever changes to the safe need to be written. You can have the client remember the

password for the duration it is running by checking the Remember password check-box. Without

enabling this, you will have to input the password again, whenever changes to the safe need to

be written. If you are starting qluman-qt for the first time and therefore have no

certificate safe yet, this dialog is skipped and an empty Connect Cluster dialog opens

directly. See Adding a new Cluster below about how to add a new cluster.

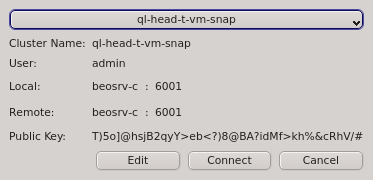

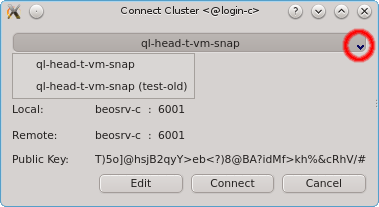

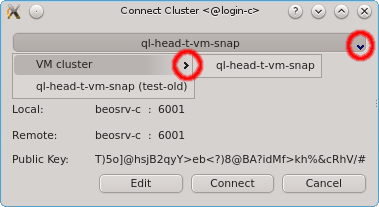

Having entered the correct password for the certificate safe the Connect Cluster dialog

opens. The last cluster used will be pre-selected but a different cluster can be selected from

the drop-down menu. Click the Connect button to connect to the selected

cluster. If this is the first time you connect to this cluster, the client generates a random

public/private key pair. These keys will eventually be used for permanent authentification of

the chosen user with this cluster. Following this, a connection to the server is made with an

attempt to authenticate the client using the one-time token. On success, the server stores the

public key of the client for future logins and the client stores both the private and public

keys in the certificate safe. This finalizes the initial handshake.

|

The GUI client asks for the password of the certificate safe to store the generated public/private key pair. It will only do so, when you initially connect with a one-time token. For future connections, it will use the stored key pair to connect and authenticate. The safe contents will then not be changed again. |

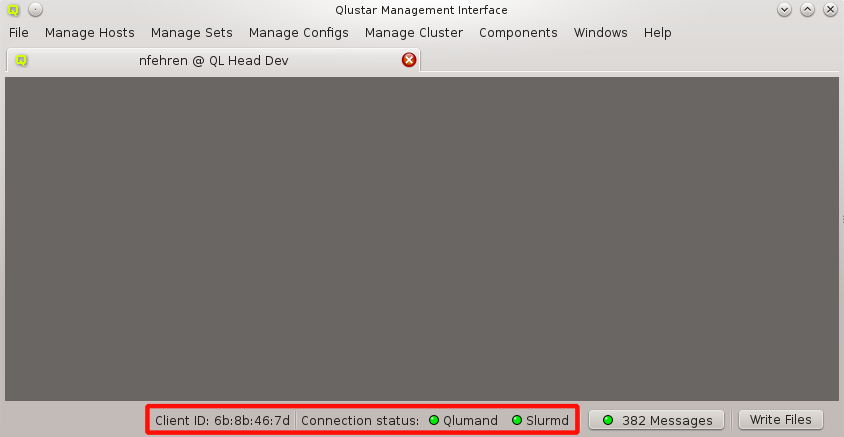

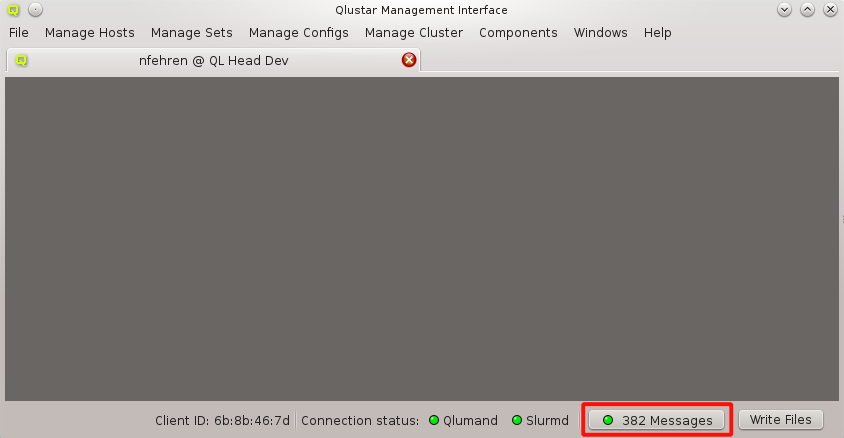

2.1.1. Connection Status

The status of the network connection between a GUI session and the relevant QluMan server

components (qlumand, qluman-slurmd, etc.) is displayed by LEDs in the status bar of the main

QluMan window. The QluNet internal network client ID is also listed there.

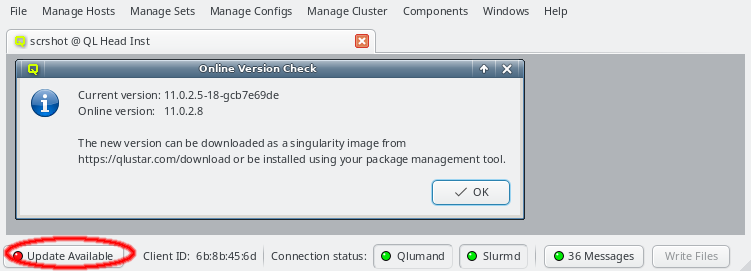

2.1.2. Online Version Check

When starting up, the QluMan GUI will check whether there is a newer QluMan version available for download. If a newer version is found, or the major version currently in use is no longer supported, a button Update Available will appear in the lower left corner of the main window. Clicking on it will show more details about the version currently in use and the one available for download.

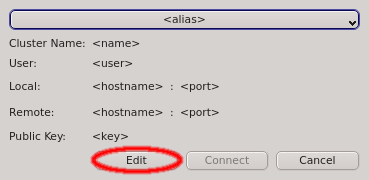

2.2. Managing Clusters

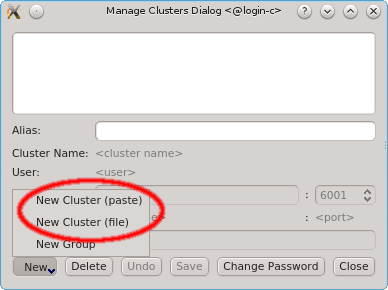

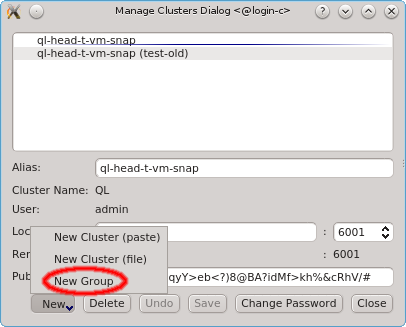

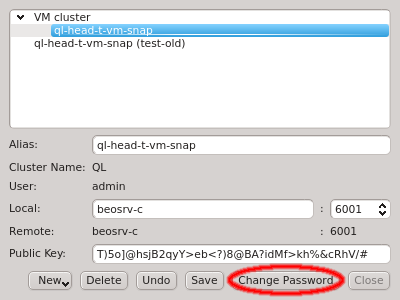

The Manage Clusters dialog manages all your accounts on different clusters or as different

users on the same cluster. It allows adding new cluster connections, editing existing and

removing obsolete ones as well as changing the password for the certificate safe. It can be

opened by clicking Edit in the Connect Cluster dialog.

2.2.1. Adding a new Cluster

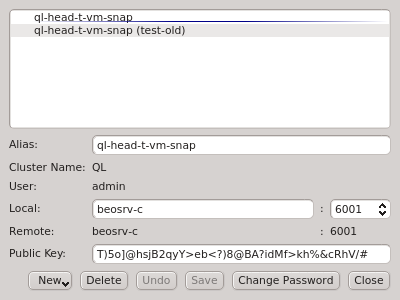

To add a new cluster click the New button and select New Cluster (paste) or New Cluster (file) from the menu depending on whether you want to paste the one-time token or load it from a file. If you don’t have a one-time token for the cluster see Generating the Auth Token.

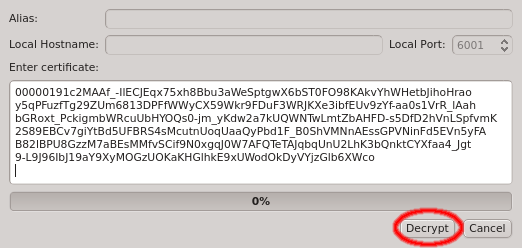

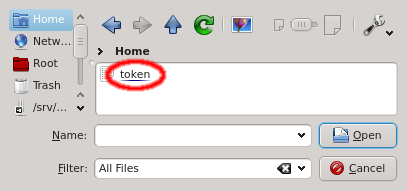

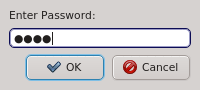

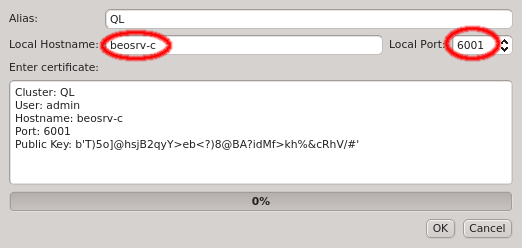

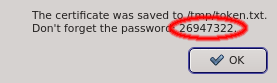

Paste the one-time token data into the dialog and click Decrypt or select the file containing the token. When asked for the password, enter the pin

that was used when creating the token (in case you didn’t generate the token yourself, you

should have been told the pin by your main cluster administrator). The dialog should then show

the cluster/head-node infos that where packed into the one-time token. If you started

qluman-qt on your workstation, then you might have to change the Local Hostname to use the

external hostname of the head-node. Similarly, if you changed the port for qlumand or if you’re

connecting via port forwarding, you have to adjust that too. The Alias is the name this

cluster will be shown as, when using the drop-down menu in the Connect Cluster dialog. Click

Ok to add the cluster connection.

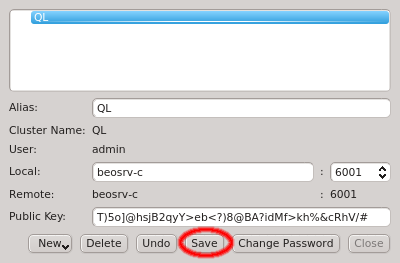

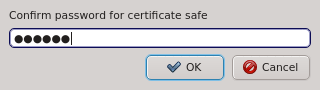

After adding the new cluster select Save to save the changes. If this is your first cluster then it will create the certificate safe and ask you to enter and confirm a password. Otherwise it will ask for the existing password unless the Remember password check-box was enabled.

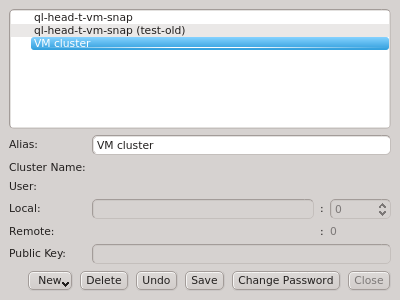

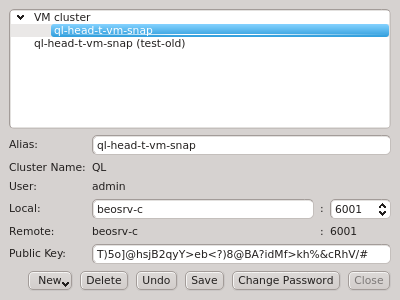

2.2.2. Sorting multiple clusters

If multiple cluster connections are registered, the corresponding entries can be reordered

using drag&drop. This allows to move them to the desired location in the list. Clusters can

also be grouped in sub-menus by first creating a new group (sub_menu) and then dragging cluster

entries into it. The tree structure of the Manage Clusters dialog will be reflected in the

drop-down menu

of the Connect Cluster dialog. This allows a nicely structured layout when dealing with a

larger number of clusters as e.g. in the case of service providers. Standard cluster admins

will most likely not need this feature.

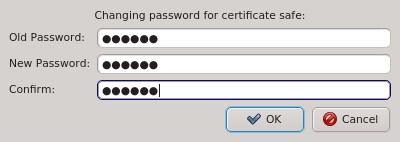

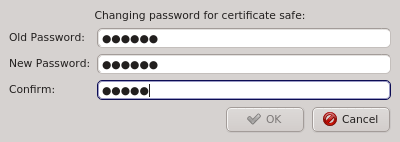

2.2.3. Changing the certificate safe password

The Manage Clusters dialog allows changing the password for the certificate safe. This

requires entering the old password for the safe as well as the new password and a confirmation

of the new password. The Ok button will only be selectable if the new password

and confirmation matches.

3. Cluster Network Setup

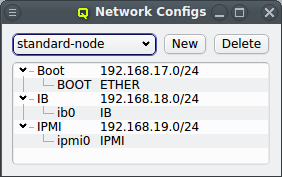

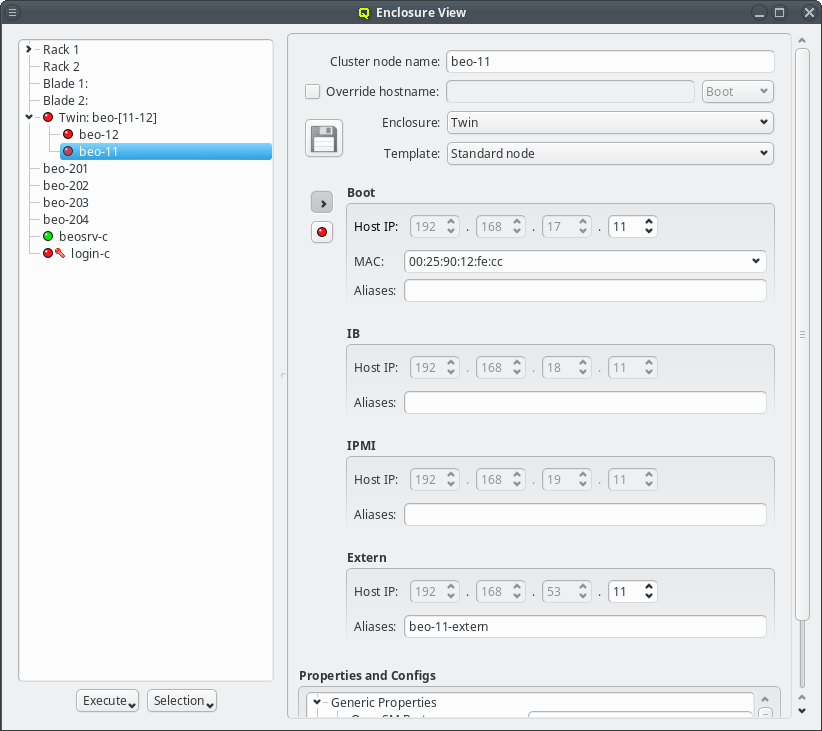

An arbitrary number of networks of different types and with different properties can be configured for a cluster. There is a network config class that allows networks to be grouped in any number of network configs. The idea is, that any such network config reflects the network adapter configuration of a single or a group of nodes. Finally such a network config can be assigned to Config Sets or directly to cluster nodes. According to the chosen network config for a node, individual node network properties like IP or MAC addresses can then be assigned to the node in the Enclosure View.

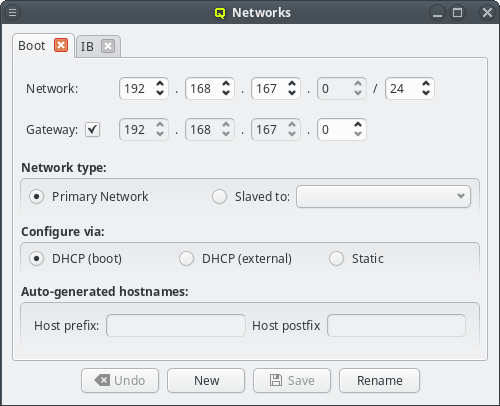

3.1. Network Definitions

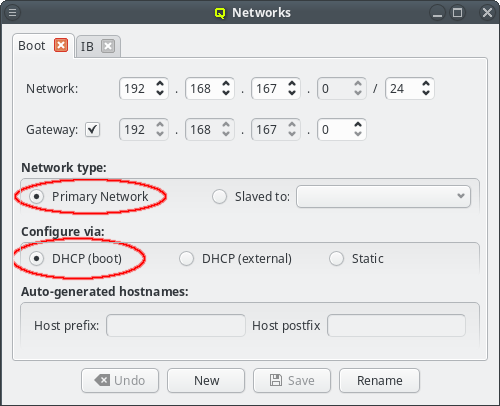

During the installation of Qlustar, the basic configuration parameters for the cluster network had to be entered. Often, additional networks need to be defined later. This can be accomplished within the networks dialog selectable via from the main windows menu.

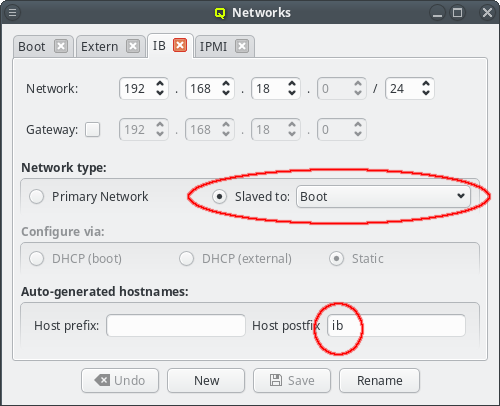

The Networks window displays all defined networks each in a separate tab. Each tab shows all the configurable parameter that define the corresponding network. The base settings of a network are its IP address and netmask as well as an optional gateway address. QluMan distinguishes two types of networks: Primary Networks and Slave Networks.

A primary network is one that determines the IP address of a host using one of the options listed in the Configure via section of the dialog. The available options are:

- DHCP (boot)

-

Configuration via the DHCP server running on the head-node. This allows the host to boot over the network using PXE. Every cluster must have one such network and it is created automatically during the Qlustar installation process according to the data provided from the installer.

- DHCP (external)

-

Configuration by an external DHCP server not under the control of QluMan. This option only makes sense for nodes that have an adapter connected to a cluster-external network, like e.g. a cluster FrontEnd node.

- Static

-

Static configuration for each host individually. Select Static for this method. The last two options are usually used for the external networks of the head-node and login nodes.

A slave network on the other hand, is tied to a primary network. The idea is, that the IP of a host in the slave network is determined by mapping the host part of the IP in the primary network into the network range of the slave network. In most cases, this means that the host IP of the primary and slave networks end in the same number. This is a convenient feature, mostly used for Infiniband and IPMI networks. It saves you from registering/managing additional MAC/IP addresses and makes IPs easily recognizable as belonging to the same host.

|

This mechanism requires the netmask of the slave network to be at least as large as the primary network it is slaved to. Hence, the GUI prevents smaller values to be selected. |

Usually, the head-node(s) have a NIC in all QluMan-defined networks. But this is not always the case. Qluman also supports nodes that have networks not connected to the head-node(s). They can also boot the Qlustar node OS from that network, provided they have a gateway that can reach the head-node(s).

For this case the network should be configured as Static in the Configure via section and the Routed via in the Network type section must be set to the network via which the gateway will connect to the head-node(s).

|

A correct Routed via setting is critical for the ql-mcastd server. With a wrong value it will not listen for requests on that network. |

Configuring the Boot network

Qlustar uses network-booting via PXE to start nearly all hosts in the cluster. The only

system(s) that can not be network-booted is the head-node(s) itself. Every cluster should

therefore have a network that is a Primary Network and configured via

DHCP (boot). Such a network is created by the Qlustar installer and called

Boot. Changes to this network are rarely necessary but if changes were made, all nodes must

be rebooted to get the updated configuration.

|

Changing the network address or mask may also require additional manual changes in the config of hosts booting from disk, specifically the head-node itself. |

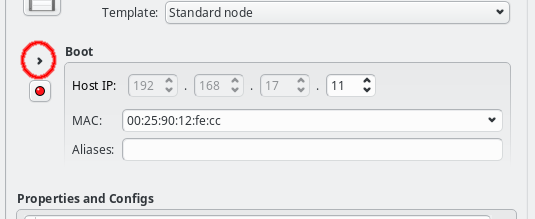

Individual IP addresses and MACs of a host can be configured in the Enclosure View, once the host has been assigned to a network config. As with other settings/configs, this assignment can also be done in the Enclosure View by assigning a network config in one of the usual ways (direct assignment, host or global template).

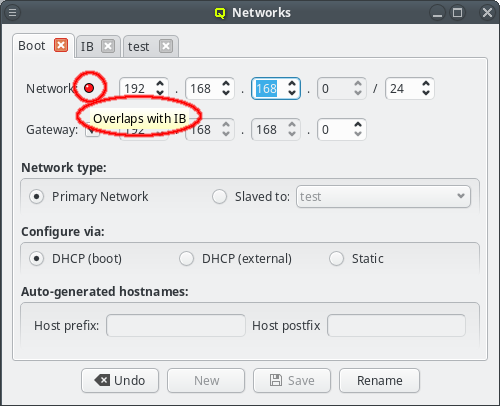

The GUI has some safeguards to prevent invalid network configurations. For example different networks must not overlap. Attempting to change the network address so that it overlaps another network won’t be accepted: The Save button at the bottom of the window will be disabled and a red LED icon will appear with a tool-tip explaining why the selected configuration is not allowed.

When changing the network IP address or netmask, the IP addresses of all hosts configured to be in that network will be remapped to reflect the changed values. This requires that a new netmask is large enough, so that the resulting network range can include all existing hosts in the cluster. Therefore, the GUI won’t let you pick anything too small. If there are unused address ranges in the existing network and you need a smaller netmask than currently selectable, you will first have to change some host addresses so that all of them combined occupy a small enough subset of the current network.

Changing the network address IP will automatically remap the cluster internal Head IP address

as well, while changing the netmask will not. Note, that the Qlustar convention, to use the

second last IP of the cluster network as the Head IP, is obviously not a requirement. Hence,

this is not done automatically when changing the netmask. Furthermore, changing the Head IP

involves some additional steps without which the nodes in the cluster won’t function or even

boot. The reason is that the Head IP also appears in the Global DHCP Template and may have

been added to other templates too. These templates are simple, freely editable text blobs. A

change of the network definitions will not change them, so you need to check and adjust each of

them manually.

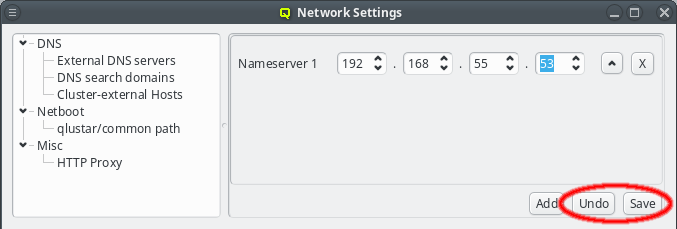

Changes to the networks definition have wide-ranging effects. To prevent accidental changes or booting hosts while in between configurations any changes to the network are not saved instantly. Instead the Save button at the bottom of the window needs to be clicked to confirm the changes. Alternatively, the Undo button can be used to revert any changes to the last saved values. Any changes to a network must be saved or reverted before switching tabs or closing the window.

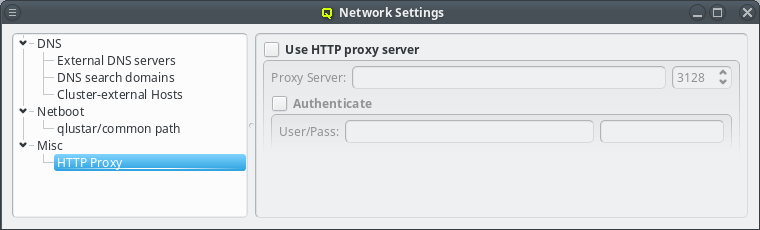

If the head-node does not have direct access to the Internet, a HTTP proxy must be configured. QluMan uses this proxy to download packages from the Qlustar repository, when creating a new chroot. The proxy can be configured under (Other Network Settings).

Configuring slave networks

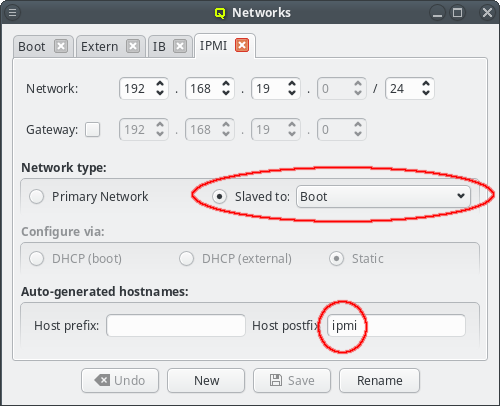

For convenience, in a cluster with Infiniband and/or IPMI, the corresponding networks are usually setup to mirror the Boot network: If the Boot IP of a host ends in .10 then the Infiniband and IPMI IPs will usually also end in .10. Within QluMan, this relationship can be set up by configuring these special networks as a slave to the Boot network: In the tab of the network under consideration, simply select the network to be slaved to from the drop-down menu.

In a slave network, the IP of a host is always derived from its IP in the network it is slaved to. During the initial part of a node’s boot process, the qluman-execd writes the resulting static slave network information into the relevant configuration file, so that the adapter will later be configured via standard OS methods. Hence, the IPs in the slave network don’t need to be set for each host individually.

The hostnames corresponding to the IPs in the slave network are also under control of this

mechanism. The name of a host in a slave network will be auto generated using the QluMan node

name of the host as the stem and adding a prefix and/or postfix separated by a dash to it. The

default Infiniband network setup for example has a postfix of ib, meaning a host named

beo-01 will be reachable on the Infiniband network as beo-01-ib. Such pre-/post-fixes may be

set/changed in the configuration dialog of the network.

|

Hostnames generated by QluMan are added to the DHCP (boot network only), NIS and ssh configs allowing them to be used within the cluster where necessary. |

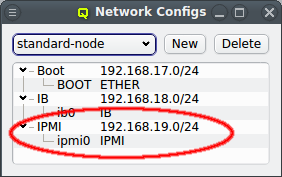

3.2. Network Configs

As part of the above mentioned reimplementation of QluMan network configuration management, a

new config class Network Config has been added. It allows combining multiple network

definitions (as described above) and link each of them to a

physical adapter. Like any other config class, such a Network Config may then be assigned to

the Global Template, Host Templates, Config Sets or individually to hosts. Every host must have

exactly one assigned Network Config which must match its hardware (adapter names).

|

Hosts with different types of network adapters may need different Network Configs even if they are connected to the same networks, because the hardware specific network adapter name of each NIC can differ between these hosts. |

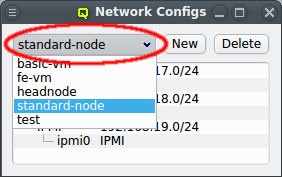

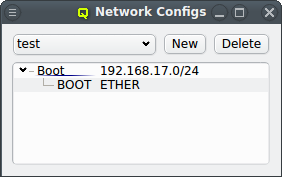

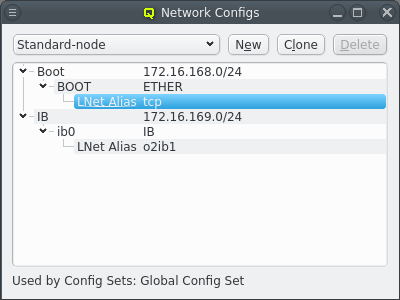

There can be any number of Network Configs, but only one is shown at a time in the corresponding dialog. To view or edit a different Network Config, select the desired entry from the drop-down menu.

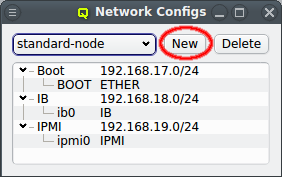

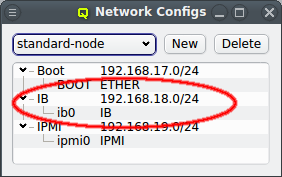

The configuration of the selected Network Config is shown as a tree. The top-level items of the tree list the defined network definitions: Both the name and the network/mask of the corresponding network are shown for each entry. Below each network definition, the NIC information (device name and network type) for that network is displayed. QluMan currently supports three types of NICs: ETHER for Ethernet, IB for Infiniband/OmniPath and IPMI.

3.3. Managing Network Configs

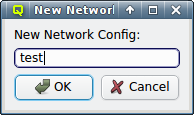

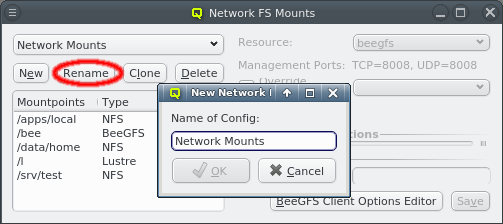

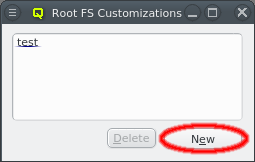

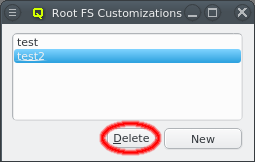

A new Network Config can be created by clicking the New button. This opens a dialog asking for the name of the new Config. Entering an unused name and pressing the Ok button will create it and select the new entry in the Networks Configs dialog. Initially this will be empty.

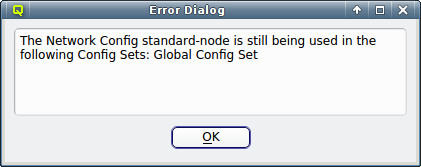

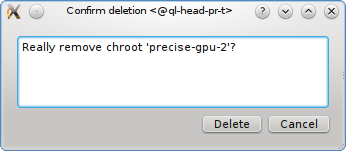

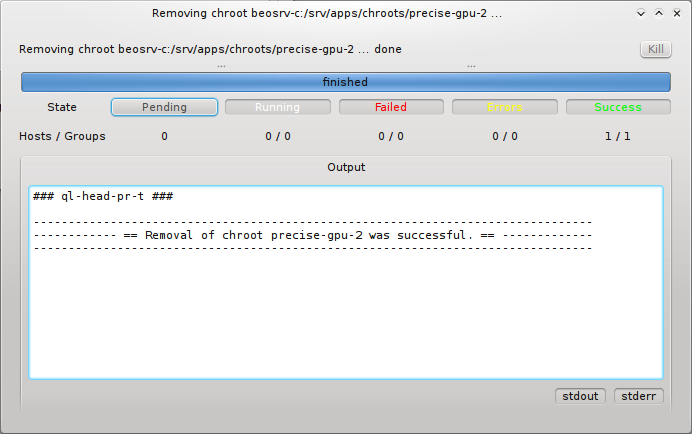

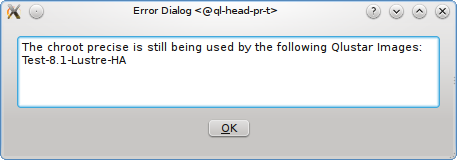

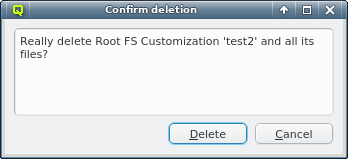

A Network Config may be deleted by clicking the Delete button. It can only be deleted, if it is no longer directly assigned to a host or included in a Config Set. Otherwise an error dialog will pop up describing the locations where it is still in use.

3.3.1. Adding a Network

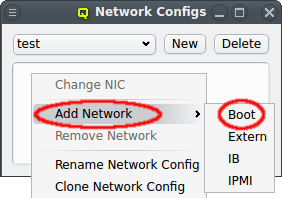

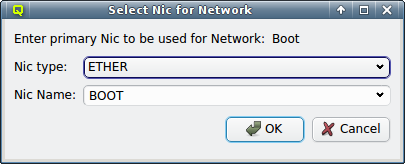

A network can be added to the Network Config by selecting a network definition from the entries below Add Network in the context menu. This opens a dialog where the type and name of the NIC for this network may be selected.

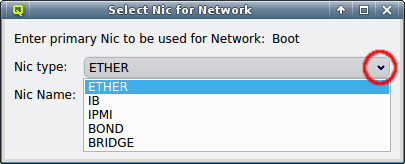

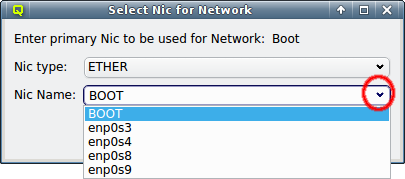

The NIC type should be selected first using the corresponding drop-down menu. A selection of valid types is available to choose from. The drop-down menu for the NIC name lists all the previously used names of the same type for easy selection. A new name can also be entered directly, in case the NIC has a name not previously encountered.

|

The name for Ethernet adapters is generated by systemd according to the way the Ethernet chip is wired into or where the network card is inserted on the mainboard (in case of add-on cards). This mechanism generates names that are predictable even when another NIC fails or a new one is added later on. It guarantees that the same name is used for a NIC on every boot. |

|

The special name |

The default name for Infiniband adapters is the kernel name of the IP-over-IB device, which

usually has the form ib<N> starting with ib0. Even simpler, the name for IPMI adapters is

ipmi0.

After selecting the NIC type and name, click OK and the new entry will appear in the Network Config dialog. If more networks are needed, simply repeat the procedure above for each of them.

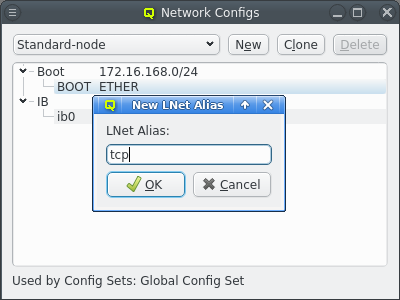

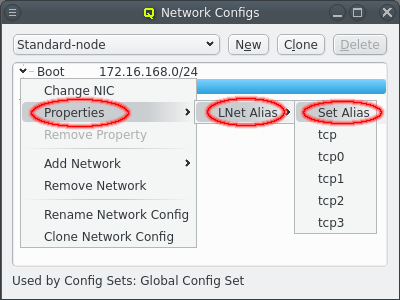

3.4. Lustre LNet Alias

Starting with QluMan 13.2.0, the Lustre configuration options were extended to include settings for bringing up LNet on any Lustre node including servers. This works as follows:

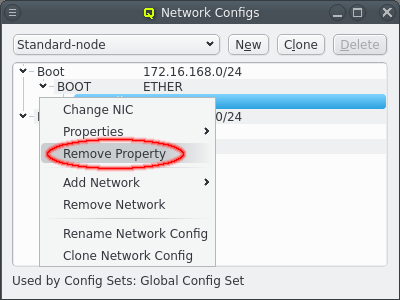

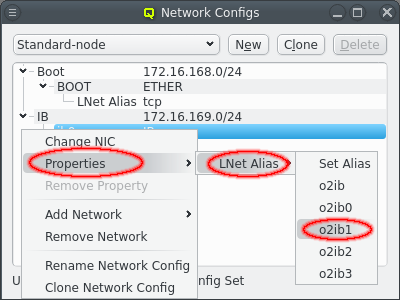

In a Network Config, an LNet alias can be added for each NIC via the context-menu . This opens up a dialog asking for the name of the alias to add. An LNet alias for Ethernet NICs must start with tcp while for Infiniband adapters it must start with o2ib. In both cases it may optionally be followed by a number. For convenience, the context-menu also includes preset aliases sufficient for most configurations. An LNet alias can be removed again by choosing Remove Property from the context-menu.

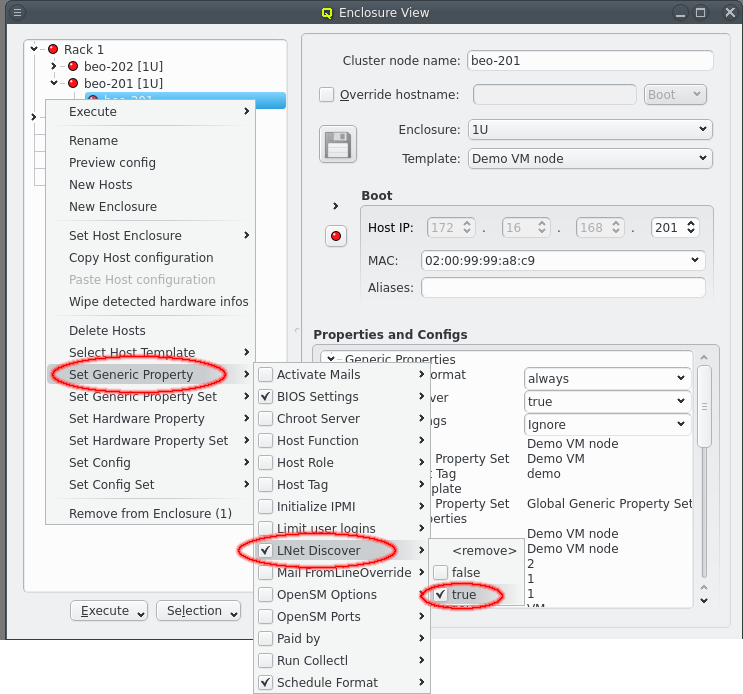

A new boolean Generic Property LNet Discover was introduced and can be added to a Generic Property Set or assigned directly to a host. This property controls whether LNet on a host should auto-discover other Lustre NIDs in the network and is only relevant for multi-rail configurations. For some non multi-rail physical network topologies this has to be explicitly disabled to guarantee stable operation.

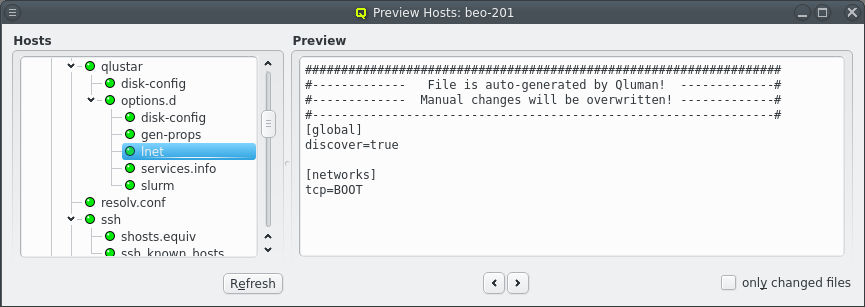

If a Network Config with LNet aliases is assigned to a host, a config file is generated for

that host upon boot at /etc/qlustar/options.d/lnet and Lustre LNet is configured accordingly.

|

For Lustre clients, setting an LNet alias remains optional and LNet aliases tcp and o2ib0 are still generated automatically via a modprobe file for the NIC used to mount the Lustre Filesystem. This method is less flexible, to be deprecated and may fail when Lustre filesystems are mounted from different NICs on the same node. |

3.5. Host specific Network Settings

When all the networks have been defined and required Network Configs were created and assigned to a host through a template or directly, the final step of the network configuration involves the host’s individual settings.

They are displayed by selecting the host in the Enclosure View. For each network the host belongs to, the Host IP, MAC address (where applicable) and optional host aliases are shown and can be set or changed.

If the host already got a Network Config assigned at its creation time, either from the Global Template, by setting a Host Template or by copying the config from an existing host, then the boot network will already have a Host IP and MAC address filled in. Both of these are required for the host to be able to boot from the network and to receive the correct configuration at boot.

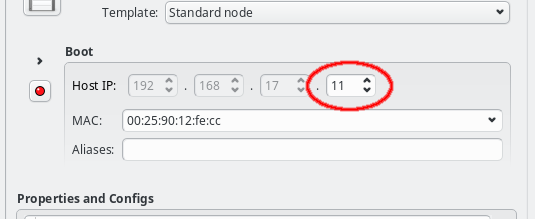

The Host IP can be entered directly or changed using the up or down arrows. Only the host part of the IP can be changed in this way, its network part is fixed and grayed out: The GUI ensures that only IPs being part of the corresponding network can be entered. If no Host IP has been manually set for the host yet, then the lowest IP in the network will be suggested and the Host IP will be color coded to indicate unsaved changes. More info about color coding and how to save changes can be found in this section.

|

The Host IP for slave networks is auto-generated by mapping the host’s IP in the master network into the slave, such that the last digits of the IP are identical in both networks. It can therefore not be edited. |

For networks that use DHCP(boot) to configure the network adapter, the correct MAC address

must be given. It must be entered as six hexadecimal bytes separated by ":". For example:

00:25:90:12:fe:cc. Again, color coding of the MAC label will show whether the

entered MAC address is valid, although most invalid input is rejected by the GUI outright. For

networks defined as DHCP(external), the MAC field is purely informational and not used by

QluMan.

The last part of a host’s network settings are optional host aliases. These are simply alternative names under which the host can be reached and which will be added to the NIS database. Aliases are entered as a space-separated list of hostnames and must be unique. For performance reasons, the uniqueness is not fully checked by the GUI, so care must be taken to avoid collisions.

3.6. Names of a Host

A host can have multiple names. Typically there is at least one name for each network it is

connected to. The primary name of a host in QluMan is its Cluster node name, which is its

name shown in the Enclosure View tree. By convention and default, the head-node is named

beosrv-c and the FrontEnd node login-c. Note, that these are their names in the

cluster-internal boot network and not their real hostname (displayed by the hostname

command). Per default, compute nodes are named beo-<N> with <N> being a two-digit running

number and their Cluster node name will also be used as their real hostname.

|

We strongly advise to keep the head-node |

A host’s Cluster node name will always resolve to its IP in the boot network. It is also used

as the stem, when the name of the host in networks slaved to the boot network is generated with

the configured pre-/suffix of the slave. E.g. per default, the name in the IPMI network has a

suffix of ipmi, which means that a host with Cluster node name beo-01 will become

beo-01-ipmi in the IPMI network.

Sometimes the generated names are inconvenient to remember, or the network does not have generated names at all, when it is neither the boot nor a slave network (e.g. the external network of the FrontEnd node). In such cases, a host can be given additional names by defining host aliases for it.

Even stronger than an alias is the hostname override. The hostname override does not just add

an additional name for the host, but also makes it the real hostname that is displayed by the

hostname command) and will appear on the shell prompt, in logfiles or outgoing mails from

that host. This is commonly used for FrontEnd nodes, so that the visible name matches the

external name of the host that is used to connect to it.

3.7. Infiniband Network

For most practical purposes, Infiniband (IB) adapters need to be configured with an IP address (IPoIB) just like Ethernet adapters. If you have chosen to configure an IB network during installation, this section is mostly about how to review or change the initial settings. If not, a network definition for IB has to be created in the Networks dialog. There, a network IP address and a netmask can be chosen for the IBoIB Network.

The Infiniband network must not collide with any other network. This is prevented automatically in the settings dialog. It is convenient to define the IB network as a slave to the boot network. Then the IB IP of each host is computed by mapping the host part of its Boot IP to the IB network and no further configuration is necessary. Example: If a host’s boot network IP address is 192.168.17.100, the corresponding slaved IB IP address will become 192.168.18.100.

|

This mechanism requires the IB netmask to be at least as large as the Boot Network netmask. Hence, smaller values won’t be selectable. |

In order to have the IB adapter of a node configured correctly during the boot process, the network definition must also be added to the Network Config used by the host. It is not uncommon, that a cluster consists of hosts with IB and hosts without. In such cases, multiple Network Configs must be created (at least one with IB and one without IB) and assigned to the different hosts in one of the standard ways (via templates or directly). If the Network Config for a host includes a NIC of type IB, during its boot process, the necessary Infiniband kernel modules will be loaded and IP-over-IB will be set up with the IP mapping configured in the network definition.

3.7.1. Activating/configuring OpenSM

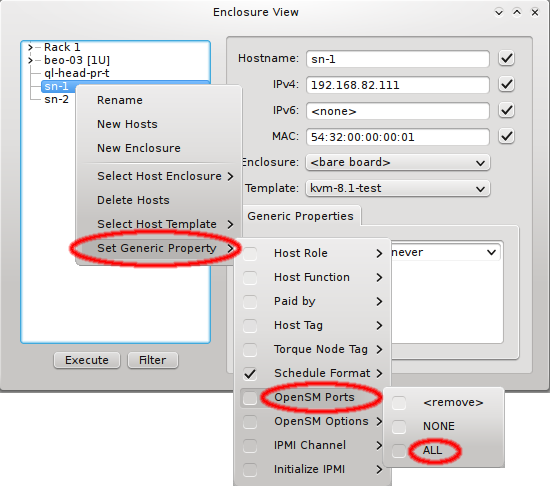

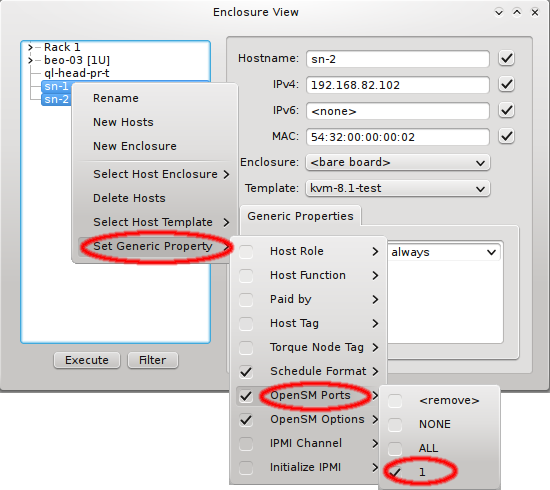

In an IB fabric, at least one node (or switch) has to run a subnet manager process that manages the IB routing tables. Qlustar provides OpenSM for this task. If the head-node is also part of the IB network, it’s usually best to configure it to run OpenSM. This might have been chosen during installation, in which case there is nothing more to be done. If not, you have the option to run OpenSM on ordinary nodes too.

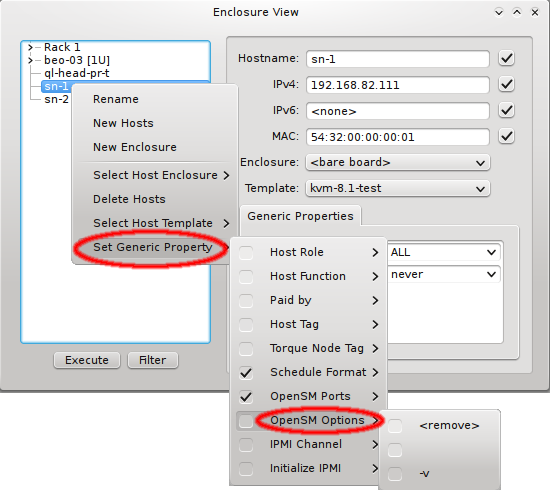

In this case, it is advisable to run OpenSM on two or three nodes (not more) for redundancy reasons. It is therefore best, to configure this directly for the chosen hosts, rather than using a Host Template or generic property set. After selecting the host(s) where OpenSM should run in the Enclosure View, open the context menu and select . The next time the host(s) boots, the OpenSM daemon will be started on all its Infiniband ports.

If a host has more than one IB port, OpenSM can also be configured to run only on a specific

one rather than on all of them. The port can be specified by its number or by its unique ID. As

this is an uncommon configuration and the unique ID is unknown beforehand, there is no preset

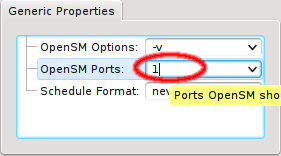

value for this. To create a new value, first select an existing value, e.g. ALL, for the

generic property OpenSM Ports. You can then edit the value in the Generic Properties box of

a host. Editing the line and pressing Enter will create the new value. Beware

that this will only affect one shown host. To assign the new value to other hosts, select them

and then change the OpenSM Ports property through the context menu.

In some circumstances, it might be necessary to run OpenSM with extra options. This can also be

configured via Generic Properties. The only preset value is the empty

string, so you need to create a new value for the options you require. First add the empty

value of the generic property OpenSM Options to one host. Then edit the value to your

requirements and press Enter to create it. Finally add/change the OpenSM Options

generic property for all relevant hosts.

3.8. IPMI settings

Configuring IPMI is similar to Infiniband and also involves multiple steps, because there are a number of options to set. If you have chosen to configure an IPMI network during installation, a larger part of this section is about how to review or change the initial settings. If not, a network definition for IPMI has to be created in the Networks dialog.

There, an IPMI network address and netmask can be chosen. The IPMI network must not collide with any other network. This is prevented automatically in the settings dialog. By making the network a slave to the boot network, the IPMI IP of each host is computed by mapping the host part of its Boot IP to the IPMI Network. Example: If a host’s boot network IP address is 192.168.17.100, the corresponding slaved IPMI IP address will become 192.168.19.100.

Just as in the case of an IB adapter, the network definition for IPMI must be added to the Network Config used by the host. It is not uncommon, that a cluster consists of hosts with IPMI and hosts without. In such cases, multiple Network Configs must be created (at least one with IPMI and one without IPMI) and assigned to the different hosts in one of the standard ways (via templates or directly). If the Network Config for a host includes a NIC of type IPMI, the node is ready for monitoring its temperature and fan speeds.

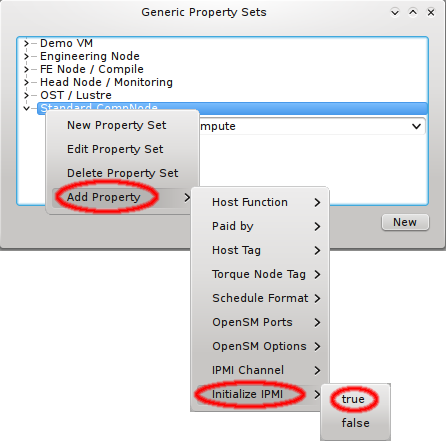

Enabling IPMI nodes for remote control involves one more setting: The generic property

Initialize IPMI. Per default, the settings of the IPMI cards are not touched by Qlustar as

they retain their configuration across boot. However, if the Initialize IPMI generic property

is assigned and set to true, the IPMI card network settings of the corresponding host will be

set every time it boots. Changing the value of this property to true and after booting back

to false, allows a one-time setup of the card’s network properties.

3.9. Global Network Settings

Some network settings can not be assigned to a group of nodes but relate to the cluster as a whole and how it connects to the outside world. This includes the configuration of the DNS and an optional HTTP Proxy. To configure these global network settings, select .

3.9.1. DNS (Domain Name System)

DNS has a hierarchical design. Each correctly configured computer knows about a DNS server that will handle local requests. Should the request fall outside the scope of the local server, it will ask the next higher server in the hierarchy. Starting with Qlustar 11, local DNS requests are handled by dnsmasq which will answer requests concerning any QluMan configured node automatically. The handling of requests about external hosts are affected by three QluMan settings: The external DNS servers, the DNS search domains and Cluster-external Hosts.

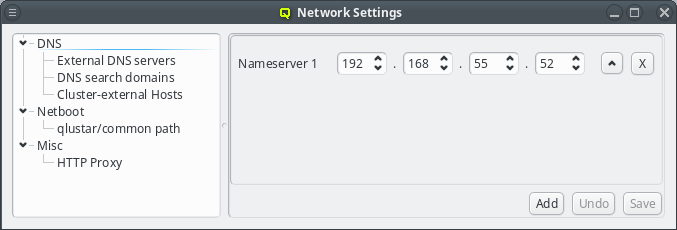

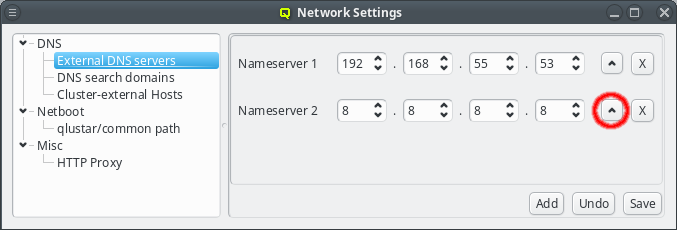

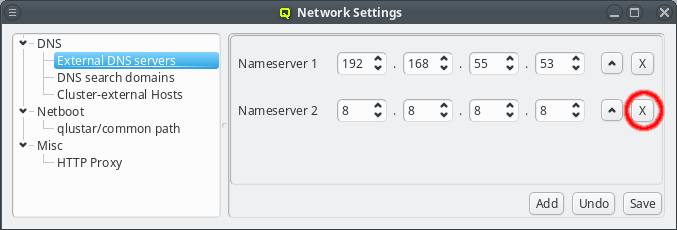

3.9.1.1. External DNS servers

External DNS servers will be needed to resolve any DNS request about hosts outside of the cluster. Since this is used to resolve hostnames into IP addresses a nameserver can not be identified by its hostname, but must be specified by its IP address. This are usually servers maintained by the local IT department or by your internet service provider (ISP). There are also public DNS servers anyone can use as a fallback, for example Googles public DNS server (IP 8.8.8.8).

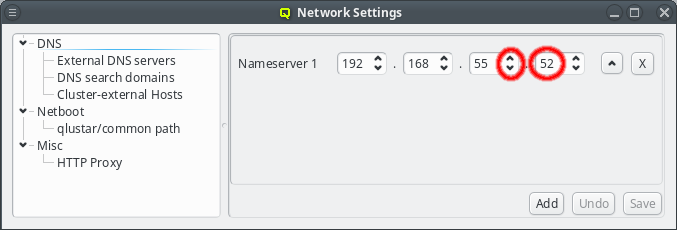

The nameserver specified during the installation process should already be set unless you upgraded from Qlustar 10.1. If the IP of the nameserver changes, it can be edited by either pressing the up/down arrows next to each part of the IP or by clicking at the number and entering it directly. Once the correct IP address has been entered it needs to be saved by either pressing Enter, or by clicking the Save button. The Undo button reverts the nameserver entry to the last saved IP.

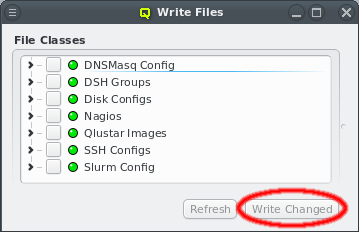

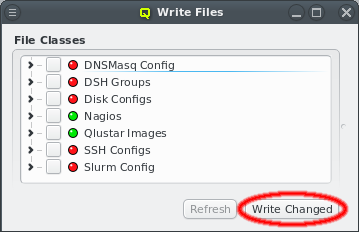

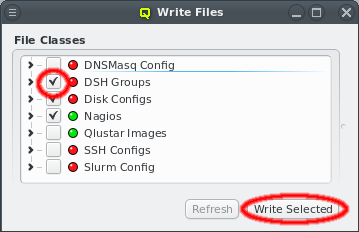

|

Saving changes in this dialog doesn’t activate the new config immediately but only saves them

in the QluMan database. To finally activate them, the |

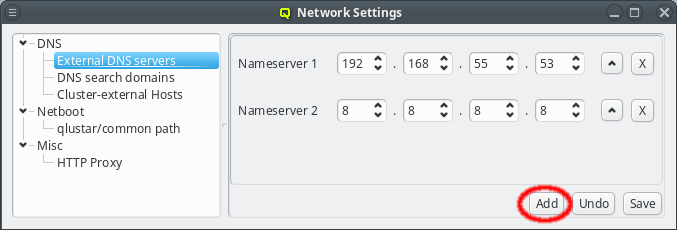

For redundancy purposes more than one nameserver can be set. To add an additional nameserver click the Add button. This will add a new nameserver entry to the GUI defaulting to Googles public DNS server. The entry may then be edited as described above. Use this also when upgrading from a previous version of Qlustar to add the first nameserver.

When a DNS request cannot be answered locally, the external name-servers will be asked one by one in the order shown in the GUI. This order can be changed by pressing the Up button next to the nameserver. This will move the respective nameserver up one position in the list.

When a server is no longer valid or wanted, it can be removed by pressing the X button next to the nameserver.

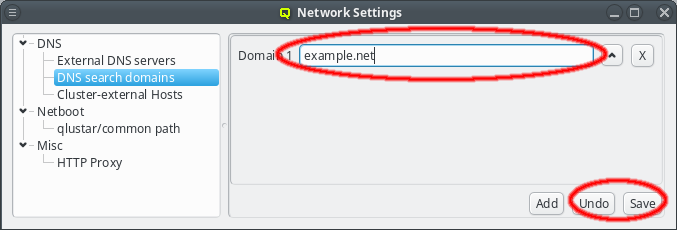

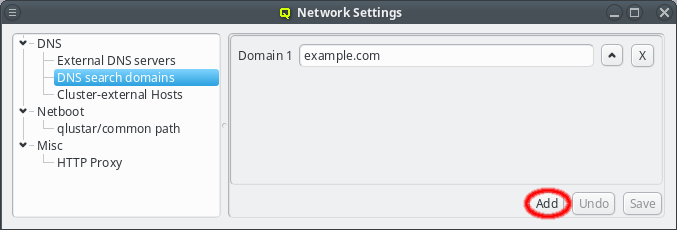

3.9.1.2. DNS search domains

A DNS search domain is what the DNS service will use to resolve hostnames that are not fully qualified. A fully qualified domain name is one that can be resolved by working down from the root domain (which is just an empty string) and eventually ending up with an IP address. In less technical terms, it’s one that ends in a top-level-domain such as .de, .net, .org, .com, etc..

In practice, whenever a device tries to resolve a hostname that can not be resolved as is, the resolver code appends search domains to the hostname and tries the resulting names one by one to see if it resolves then. The list of search domains usually contains at least the main domain of the organization the cluster is located at, but often also sub-domains of it. Example: The search domain list contains my-department.my-firm.com and my-firm.com. A look-up for the host mailserv will then first try mailserv as is. If that fails, mailserv.my-department.my-firm.com is tried and if that also fails, finally mailserv.my-firm.com. This mechanism allows using the shorter hostname to refer to some hosts that are outside the cluster.

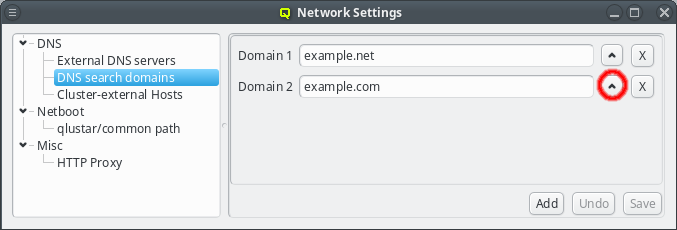

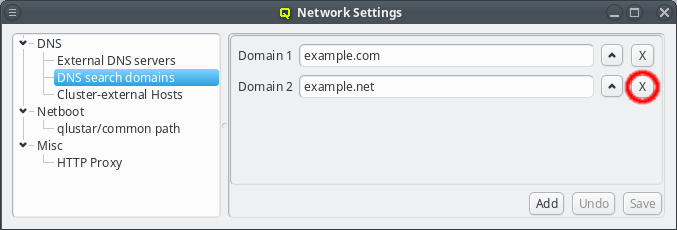

A search domain may be edited by clicking the text-field for the domain. As soon as changes are made, the Undo and Save buttons will become enabled. Changes are saved by either pressing Enter, or by clicking the Save button. An additional search domain can be included by clicking the Add button.

The search domains will be tried in the order shown in the GUI. Just like with the nameserver entries, the order can be changed by clicking the up button. This will move the selected domain one slot upwards. Search domains can also be removed by clicking the X button.

|

The DNS search domains are set via DHCP on each host as it boots and are not updated at run-time. So any changes made, will only affect hosts booted after the change was saved. Already running hosts need to be rebooted to catch the change. |

3.9.1.3. Cluster-external Hosts

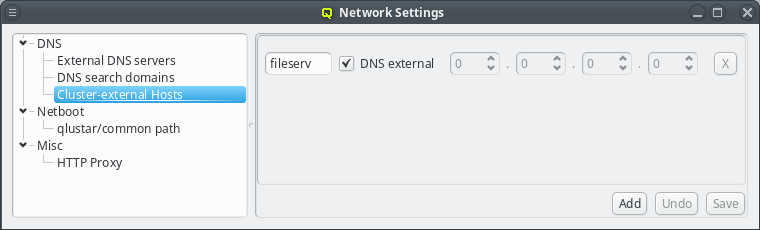

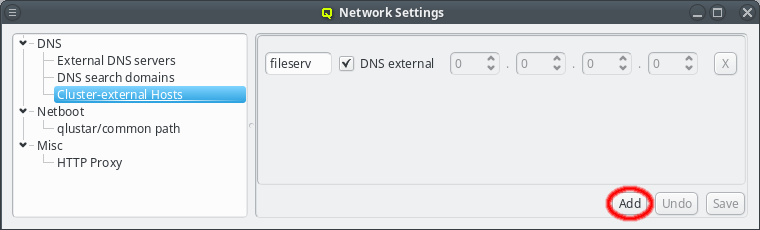

While QluMan automatically manages the DNS entries for the nodes in the cluster (any node that is shown in the Enclosure view), sometimes there are also hosts outside of the cluster networks that QluMan should know about, e.g. external file-servers that are used in Filesystem Exports.

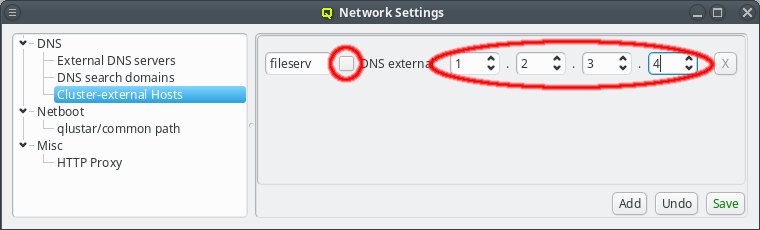

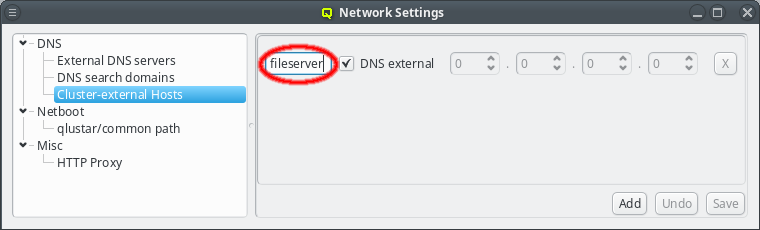

To add an entry for such an external host, simply click the Add button and enter its name. QluMan also allows to add the host to the DNS config for the cluster, but by default, for new entries that is not the case, and the DNS external checkbox is checked.

To add a DNS entry for a cluster-external host, uncheck the DNS external checkbox. This activates the IP widget and you can enter the correct IP. To finalize the input and save the IP, press Enter or click the Save button.

The name or IP of a cluster-external host may be edited at any time by selecting it, or by clicking the Up / Down arrows on the IP. Don’t forget to press Enter or click the Save button to confirm the changes.

|

To finally activate the changes to cluster-external hosts the |

3.9.2. Netboot

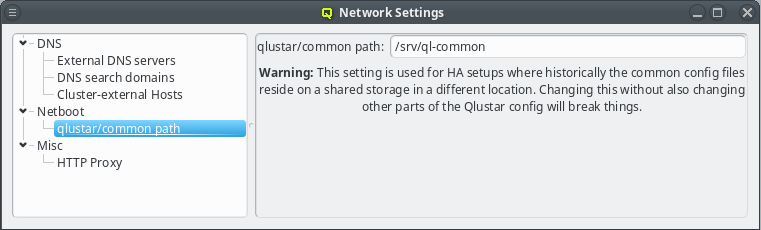

The qlustar/common path specifies the location of the cluster-wide configuration directory on the head-node that is used for its NFS export. This path value should not be changed unless there is a good reason for it. A custom value is usually required only for a head-node setup in high-availability mode.

3.9.3. Other Network Settings

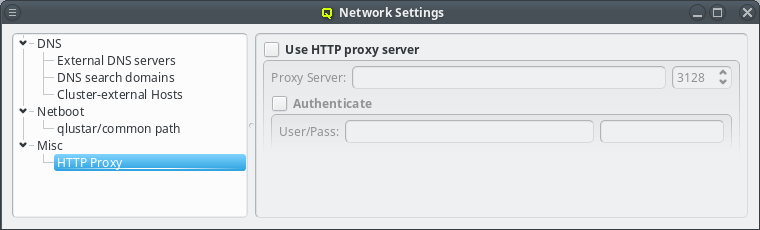

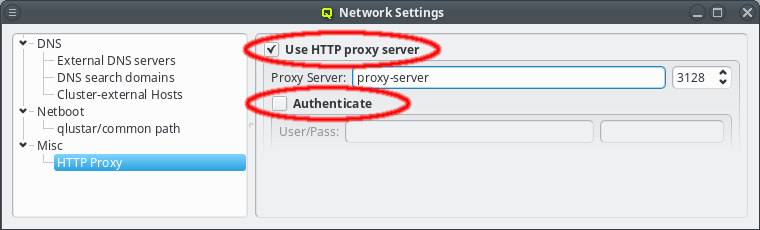

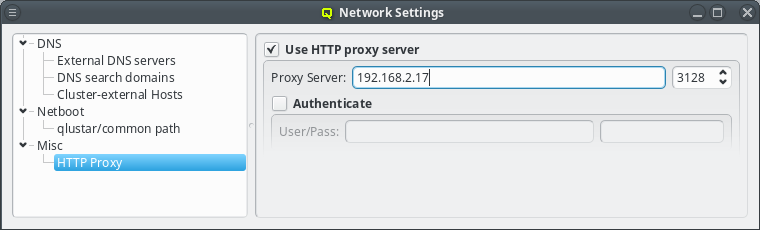

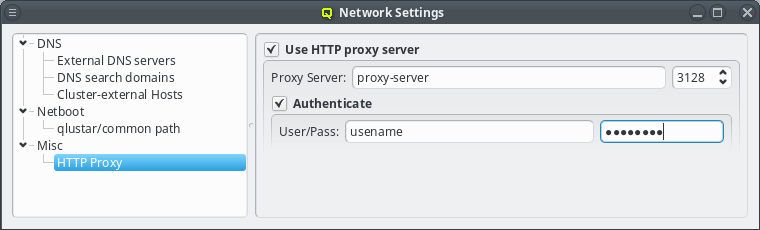

Sometimes the cluster head-node does not have direct access to the internet and requires a proxy server for a connection to the Qlustar repository servers. To enable support for such scenarios, click the check-mark before Http Proxy and enter the hostname of the proxy server together with the proxy port.

If the proxy requires authentication, click the check-mark before Authenticate and enter a username and password. The Http Proxy and User/Pass label will turn green when entries are edited with acceptable input but have not been saved yet. The labels will turn red when the current input is invalid and turn back to black once the input has been saved. The input can be saved by pressing Enter, or will be saved automatically when the input field looses focus. Leaving the user name field empty will disable authentication just the same as clearing the Authenticate check-mark.

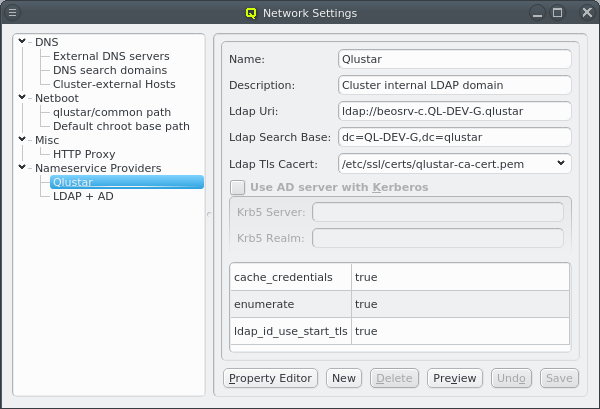

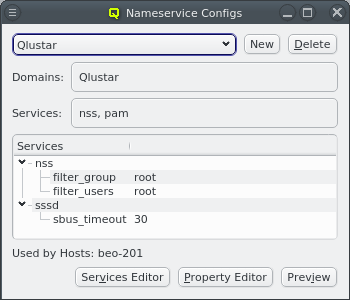

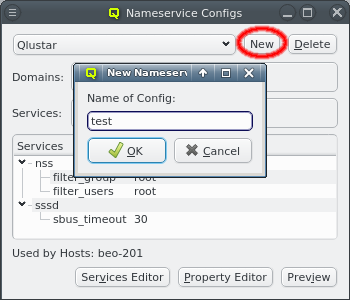

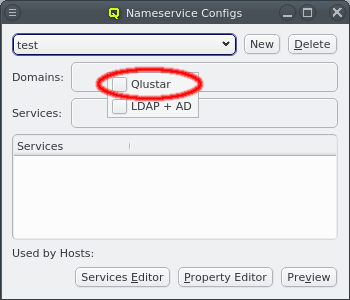

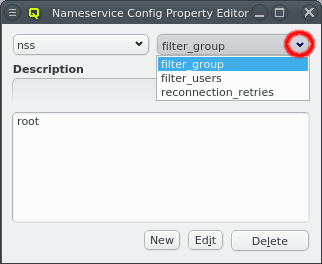

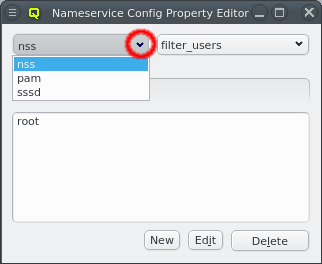

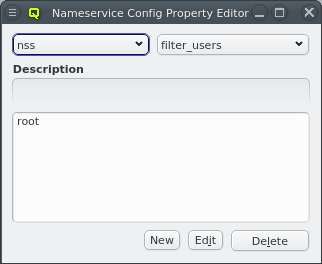

3.9.4. Nameservice Providers

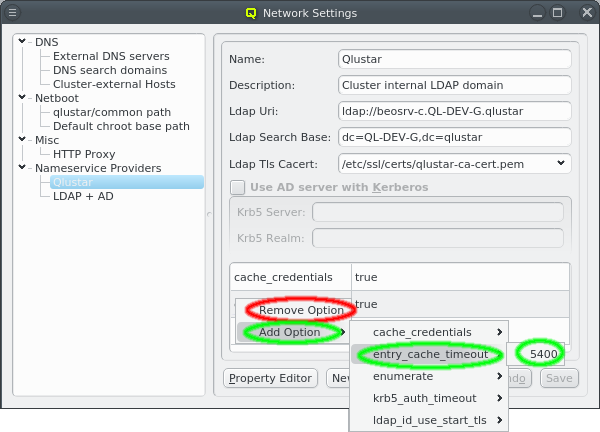

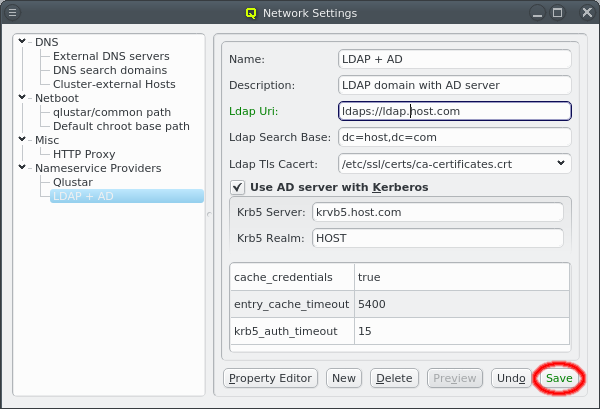

Nameservice providers running LDAP or Active Directory (AD) services can be used to provide user/group information and authentication to the cluster. The provider for the cluster-internal LDAP service is defined by default and named Qlustar. Other provider definitions are only needed if external LDAP or AD services are to be used. If so, they need to be configured as described below.

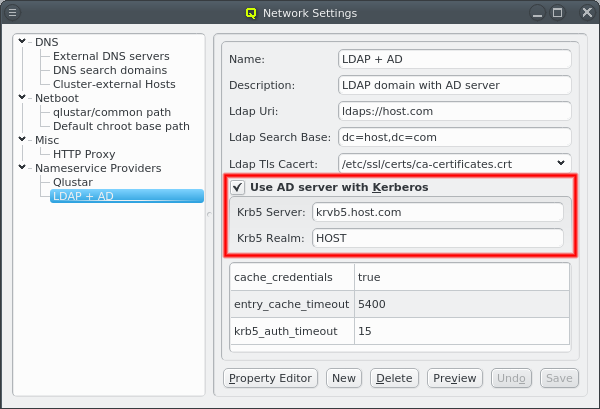

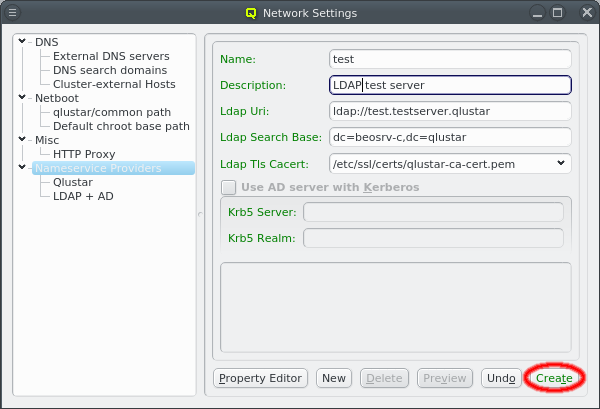

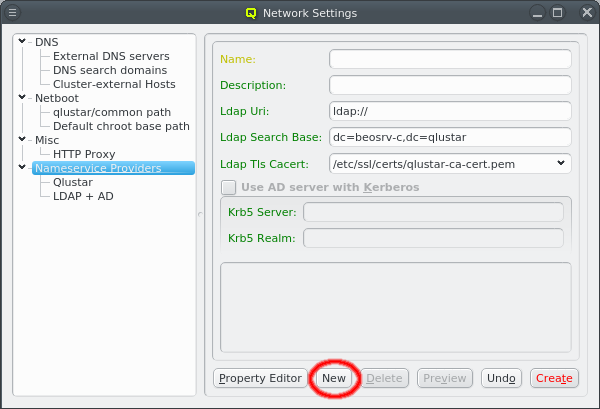

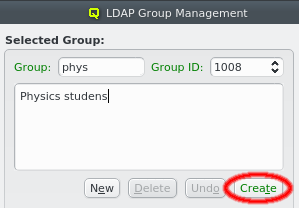

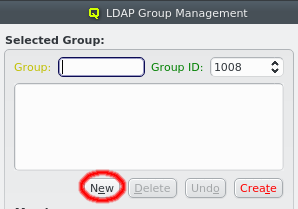

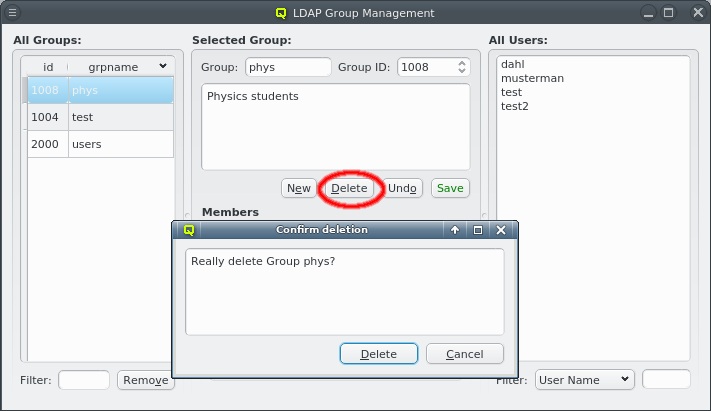

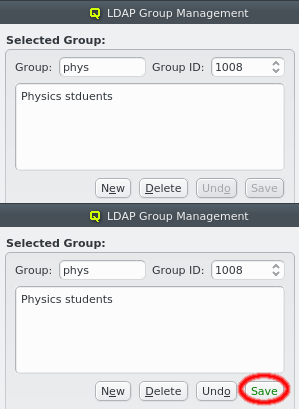

A new Nameservice Provider can be created by clicking the New button and filling out the form. The fields are color coded to show required and valid inputs. Once all fields are filled in correctly, the Create button turns green and clicking it creates the new Nameservice Provider definition. To create an AD provider definition, check the Use AD server with Kerberos check-mark and enter the Kerberos 5 server and Realm information.

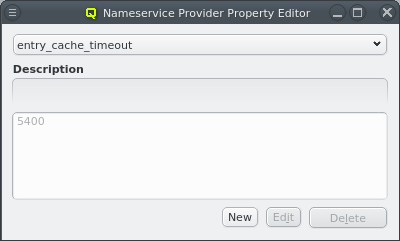

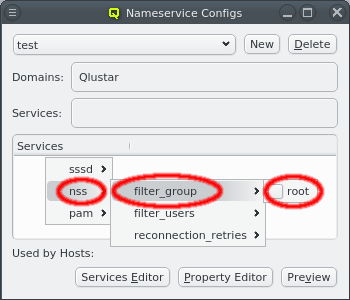

Once a Nameservice Provider has been created with its essential configuration, additional

options can be added or removed at the bottom through the context menu. Only a few options are

pre-defined, those needed for the Qlustar config. Additional ones can be added through the

property editor. For details about allowed options and their meaning please refer to the

sssd.conf manpage.

Besides adding or removing options, the essential configuration can also be edited by clicking the respective field. Any changes must be saved by clicking the Save button at the bottom. Clicking the Undo button or selecting a different entry in the tree on the left will undo any changes.

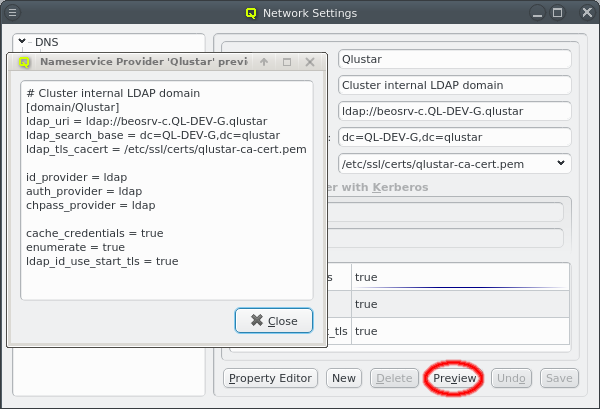

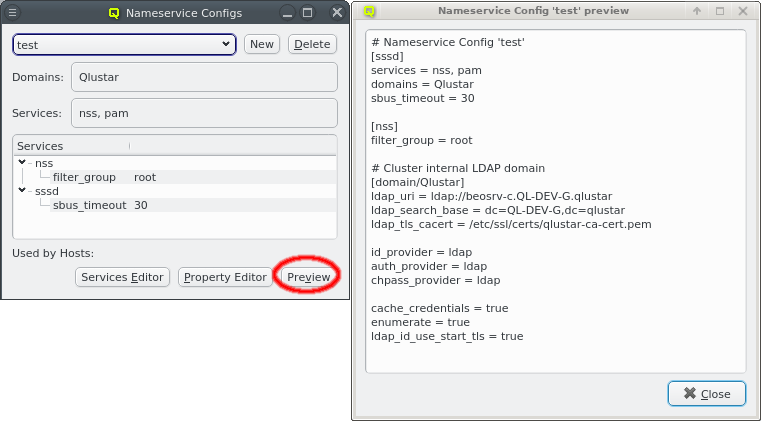

Once the configuration is complete, the Preview button allows double checking the

configuration that will be generated when the selected Nameservice Provider is used later to

generate the sssd.conf of a node. The shown snippet will be a part of the overall sssd

configuration of the host.

3.9.5. Qlustar Multicast Daemon

The boot process for Qlustar has two stages. First the kernel and a minimal initramfs is loaded

using PXE support of the node’s BIOS. The initramfs then downloads a squashfs image using

multicast provided by the Qlustar Multicast Daemon (ql-mcastd).

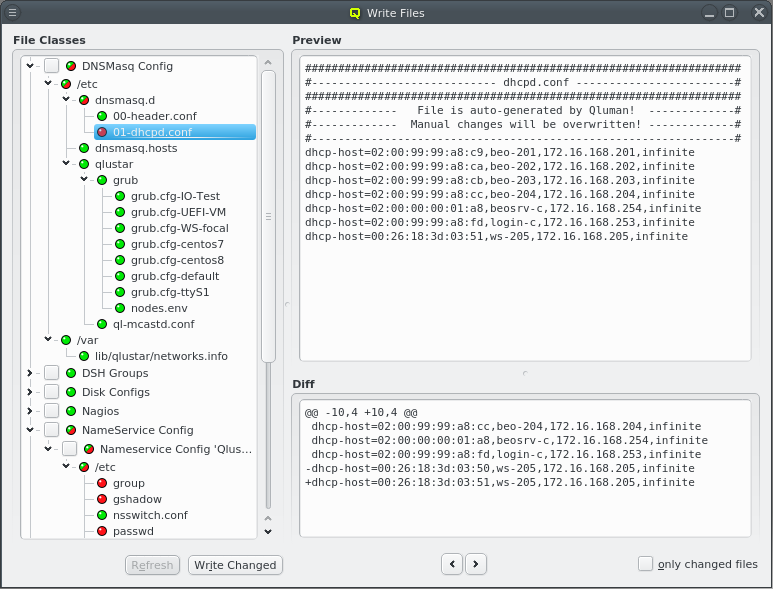

The generated configuration of ql-mcastd can be previewed and written as part of the DNSMasq

file class. It includes any configured network that has bootable nodes as well as IP and port

parameters defined in the MCastd section of /etc/qlustar/qluman/qlumand.cf:

[MCastd] multicast_ip = 232.1.0.0 multicast_control_port = 5000 multicast_port_min = 5001 multicast_port_max = 5999

In the unlikely event of a conflict with other services in the same network, the multicast IP,

control port and port range used for transfers can be changed. After editing the file, the

qluman-server service must be restarted so the new settings will be included in the

ql-mcastd.conf. If the control port has been changed, then, after writing ql-mcastd.conf,

all Qlustar images must be rebuild as well using:

0 root@beosrv-c ~ # qlustar-image-reconfigure all

4. Enclosures

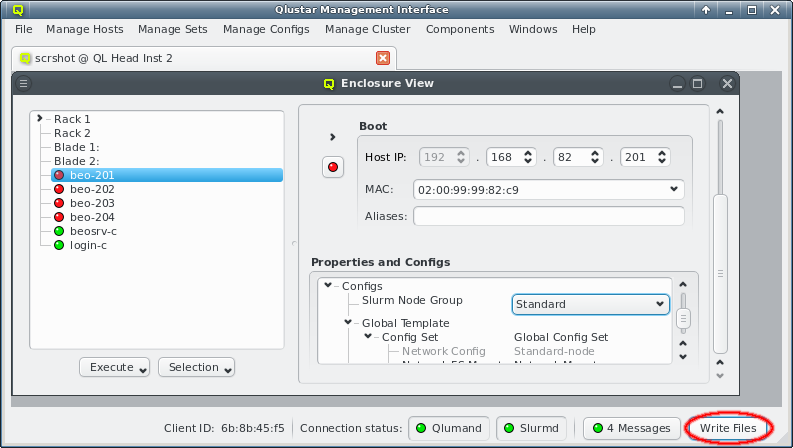

4.1. Enclosure View

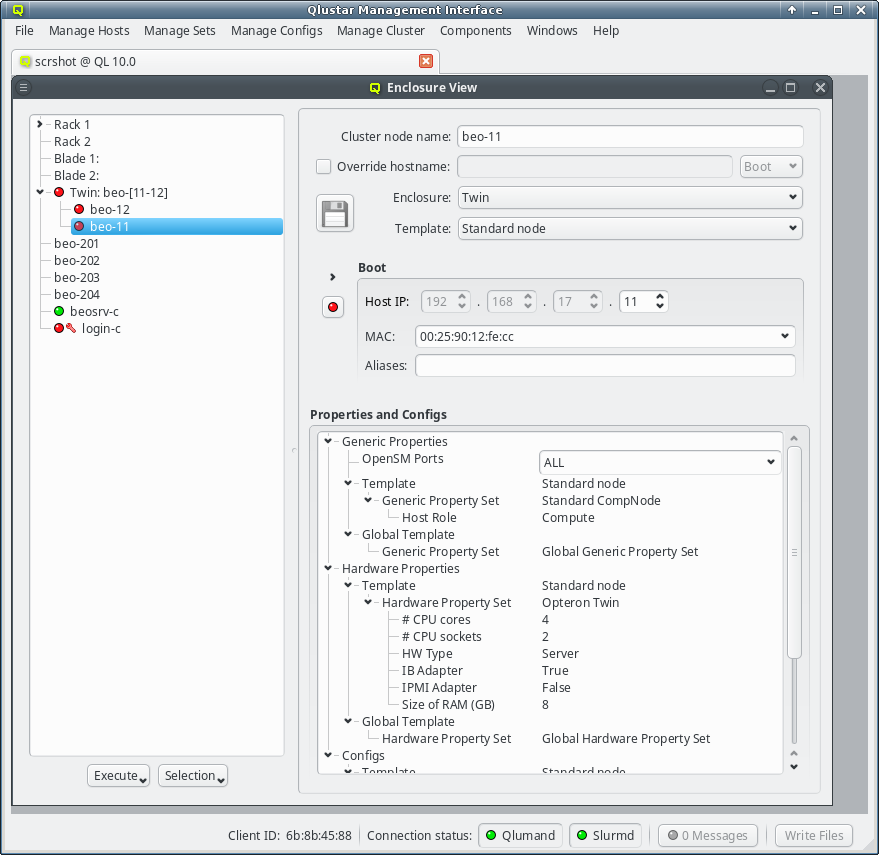

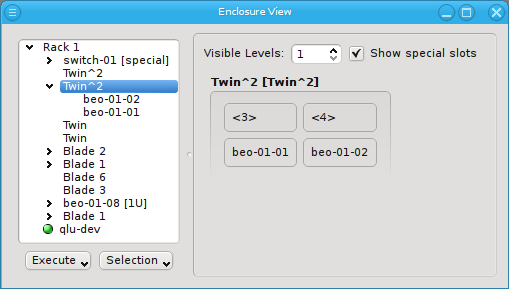

The Enclosure View shows an overview of the cluster in a tree structure. The tree is designed

to reflect the physical structure of the cluster. At the lowest level are the hosts. A host can

be a head, storage or compute node but also a switch e.g. In general, anything in the cluster

that has a name, IP and MAC address is a host.

A host is represented by its bare board and should be placed into a host enclosure. 1U, 2U,

3U or 4U enclosures contain exactly one board, while others like a Twin or Blade chassis can

have multiple boards. Once defined, host enclosures can be placed into racks, racks grouped

into rows, rows into rooms and so on. The tree has a simple drag&drop interface. E.g. you can

select a number of nodes (by holding the Ctrl key and clicking or holding the

Shift key and dragging the mouse) and drag&drop them into a Blade enclosure.

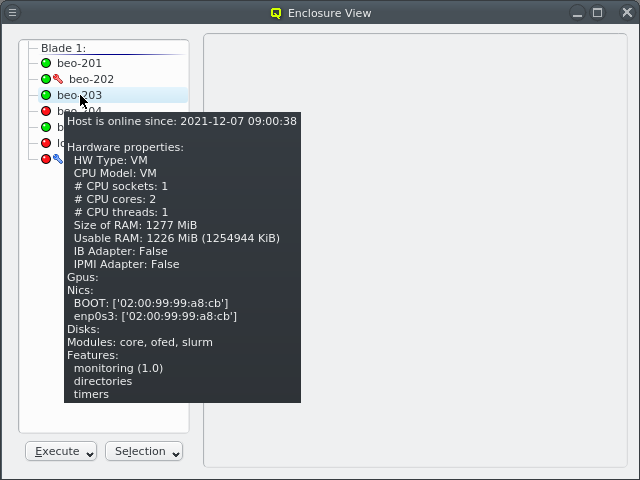

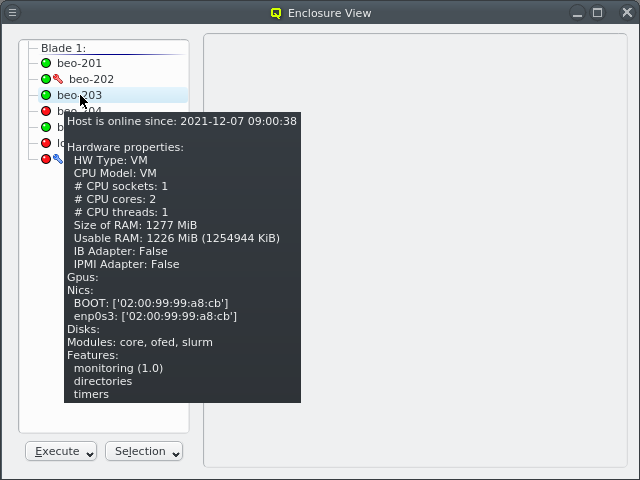

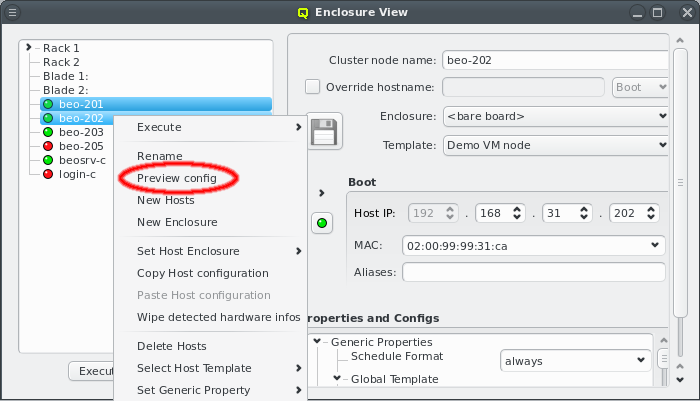

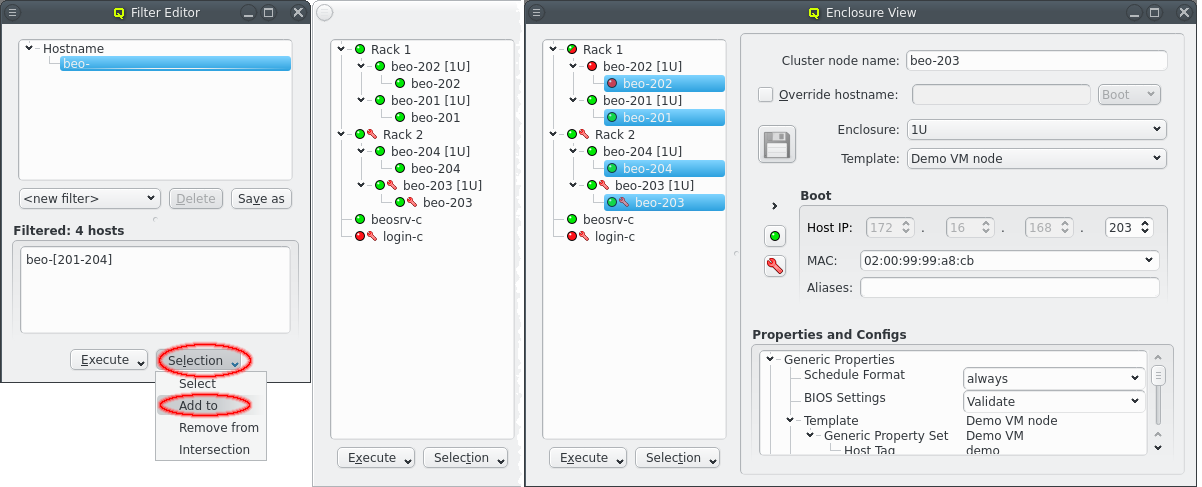

Selecting a node in the tree displays its configuration info on the right hand side. Hovering over a host entry in the tree view brings up a tool-tip with additional info about the host.

4.1.1. Editing a host’s individual network information

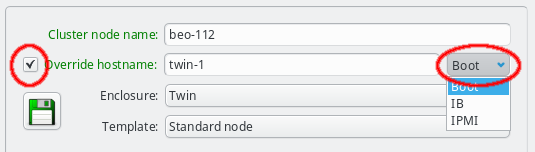

The cluster node name can be edited at the top. Normally, the node name will also be used as

the real hostname (displayed by the hostname command). If a different hostname is desired, it

can be entered by clicking the Override hostname check-mark and entering the name

in the text field next to it.

To complete the change of the hostname, the network that the chosen new hostname should be part of, must be selected from the list offered in the drop-down menu at the right of the text-field. This choice determines the IP address of the host, which will be used for the hostname in the NIS config, and which applications will see when they resolve the hostname.

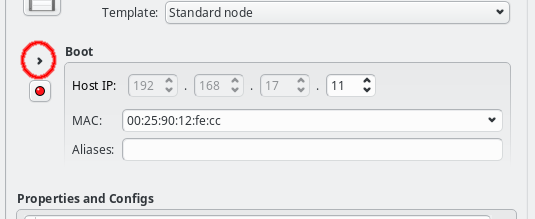

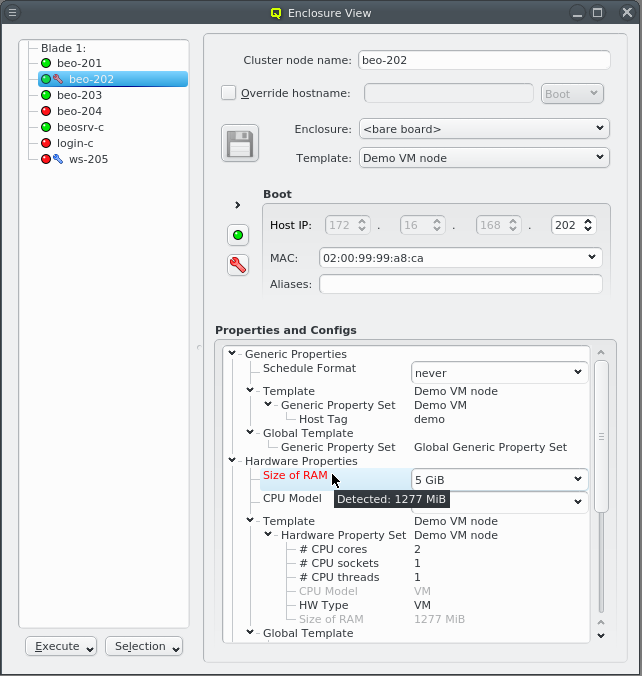

In the center of the window, the host’s individual network config is shown. Initially, only the boot network is visible, as shown in the screenshot. Clicking the > button will display the complete list of networks assigned to the host through its Network Config. For each network, the Host IP, MAC address and optional aliases are shown (more details in section Host Networks Settings).

While editing a field, the label corresponding to it, will change color indicating the validity and state of the information entered so far. A green label means that the current input is a valid choice, but has not been saved yet. A yellow label means that the input is incomplete, but can still be completed to become valid (this only applies to the MAC address field, in case a partial address is entered). A red label indicates that the current input is invalid and can not be used.

The tool-tip, displayed when the mouse moves on top of the label, states the reason, why the entered value is invalid. The most likely reason is, that the input is already used by another host. The node name, hostname override, the host IPs, MAC addresses and aliases must all be unique.

Along with the color coding of the label, the Save button will also become enabled and change color, whenever a field is edited and not yet saved. It will turn red if any of the changes are invalid, even if some of them are. Again, the tool-tip will point to the reason why values are invalid. Changes can be saved by pressing return in the text field or by clicking the Save button. Once all changes have been saved in the database, the corresponding labels will turn black again and the Save button will become disabled.

|

As long as changes have not been saved, trying to work on a different host by clicking on it in the tree view, or closing the Enclosure View all together, will pop up a reminder dialog asking to save/undo the changes or cancel the action. |

4.1.2. Other host specific settings

For nodes that are not part of a multi-host enclosure (like a Blade or Twin chassis) the

enclosure type can be changed to one of the single-slot host enclosures (1U, 2U, etc.). A new

enclosure of the chosen type will then be created if the node is not already part of one. If a

node is included in a multi-host enclosure, this field will be ghosted.

The template field allows to select a so-called Host Template for the node. Usually, large groups of nodes have an identical hardware and software configuration and will use the same template. Deviations from the properties coming from the template can be set for individual hosts by direct assignment of either a property/config set or individual properties/configs directly to the host through its context menu. In case of unique properties, direct assignments override settings from the template (or property set), for non-unique properties this is additive.

|

Any changes made in the configuration only affect the active node (as indicated by the hostname in the info part of the enclosure view), and not all selected nodes. Configurations for all selected nodes can be made by using the context menu (right click) in the tree view. |

4.2. Managing Enclosures

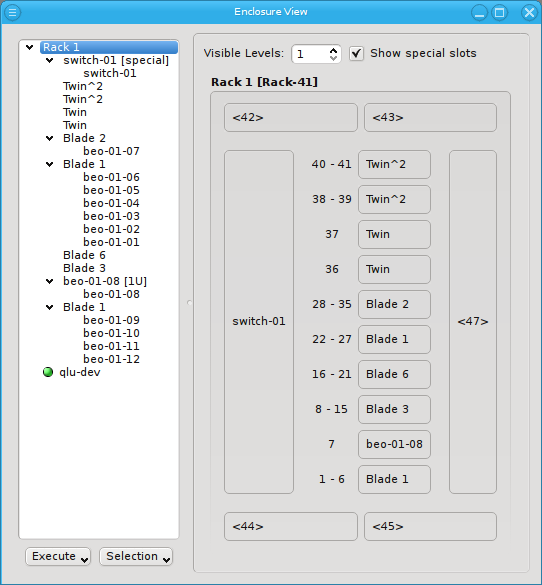

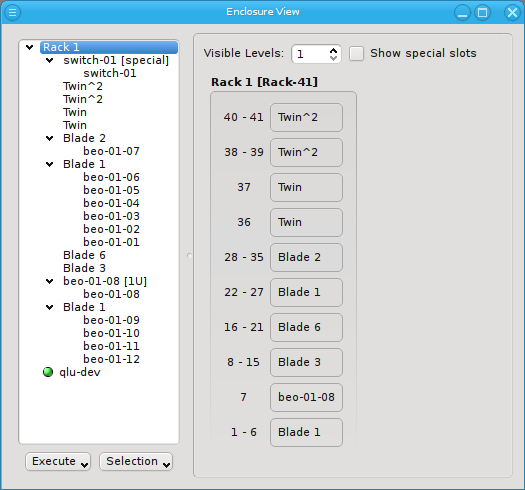

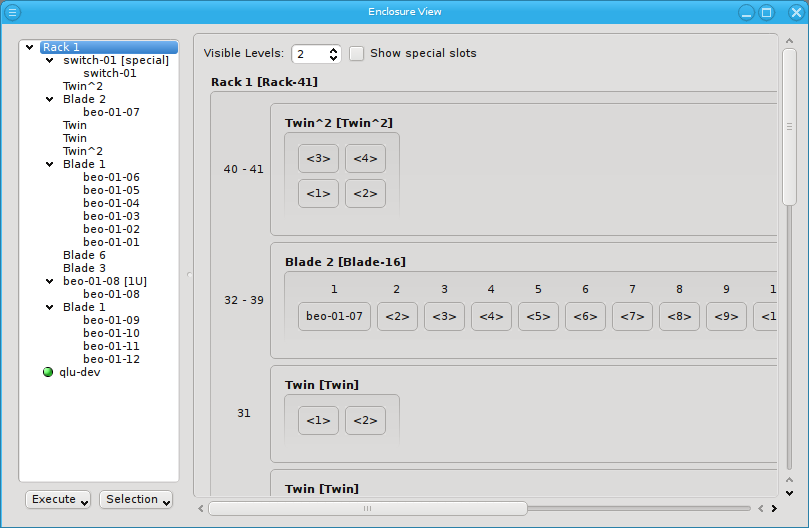

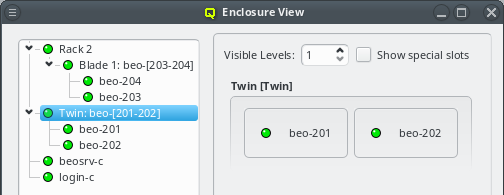

Similar to host nodes, selecting an enclosure entry displays the physical layout of the corresponding enclosure on the right. Controls to select the visibility level and special slots are available at the top of the display. See below for more details about these. The name of the enclosure and its type (in brackets) is shown in the title. In the above case, both name and type are "Twin². Below the title you have a representation of the physical layout of the enclosure. For this example, you see the 2x2 slots that are characteristic of a

Twin² enclosure. Two slots are filled with beo-01 and beo-02 and two slots remain empty,

showing only the number of each slot in brackets.

Selecting a rack shows a more complex picture. The current example rack holds ten enclosures in

its central 19 inch slots: A FatTwin, a Twin, a Twin², a Blade 1, 3 Blade 2, another Twin² and

two 1U enclosures containing beo-11 and beo-12. The special top, left, right and bottom

(not visible) slots are empty. In future versions a network switch or power controller, that is

mounted at some special position of the rack, can be placed into these special slots.

Now let’s explain the effect of the two controls at the top in more detail: The Show special slots check-box controls the visibility of the top, left, right and bottom special slots. Especially if these slots are empty, this will provide a more compact view of the interesting central slots. The other control, the visibility level, controls how many levels of the enclosure hierarchy are shown: Selecting a depth of 2 shows not only the selected rack with its slots but also the contents of the enclosures in each slot.

Since the current version of QluMan only supports host enclosures (Twin, Blade, …) and racks, a depth larger than 2 has no effect yet. In future versions, it will be possible to group racks into rows, rows into rooms, rooms into buildings and so on. This will allow you to reflect the physical layout of your cluster in as much detail, as you like.

4.2.1. Populating Enclosures

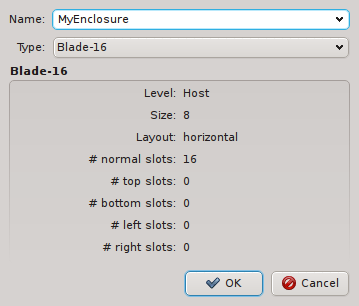

New enclosures can be added through the context menu. The new enclosure must be given a name and its type can be selected. Currently, enclosure types cannot be manipulated yet. This will change in a future version.

Suitable for ordinary servers, a host being selected in the enclosure view can be placed into a single slot host enclosure directly by selecting the correct type in the host info part of the window (see Enclosure View). For host enclosures that can hold more than one server/node (twin servers, blades etc.), drag&drop may be used to move hosts into them. Moreover, it’s also possible to create larger (non-host) enclosures (like racks) and move host enclosures into them also by using using drag&drop. Note, that a bare host cannot be placed directly into a non-host enclosure, only if it is already inside a host enclosure.

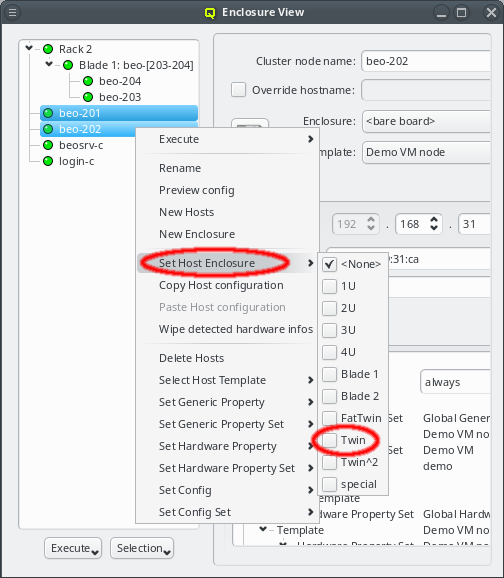

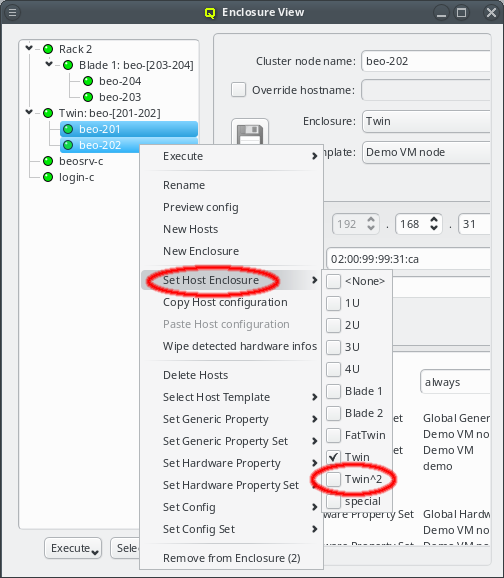

Another option to place hosts into enclosures is by selecting a number of them and then choosing a host enclosure from the context menu. This way, a new enclosure of the selected type is automatically created and all selected hosts are moved into it. If more hosts than can fit into a single enclosure of the chosen type are selected, additional enclosures of the same type will be created such that all hosts can be placed into one of them. This makes it easy to position large numbers of identical hosts into their enclosures. If the selected hosts were in an enclosure before and that enclosure becomes empty and is not itself part of a larger enclosure then the empty enclosure is automatically removed.

Relocating hosts by selecting a different host enclosure is supported not only on directly selected hosts but also on hosts inside selected enclosures. This allows changing the type of enclosure a group of hosts is in by selecting the old enclosure(s) and choosing a new one from the context menu. Note that this procedure does not change the type of the old enclosure but rather creates a new one, moves all the hosts to it and then deletes the now empty old enclosure(s).

Try it out: Place a number of hosts into a large enclosure (like a blade), then select the enclosure and choose a small enclosure (like 1U) to relocate them. In general, such an operation will create one enclosure of the new type and fill all its slots before creating a second one. Hosts having been in different enclosures before, can end up in the same enclosure and hosts that were in the same enclosure before can end up in different enclosures after this operation.

When using drag&drop for the relocation, the host or enclosure is always placed into the lowest suitable slot of the target enclosure. This reflects our experience, that usually enclosures are simply filled from left to right and bottom to top.

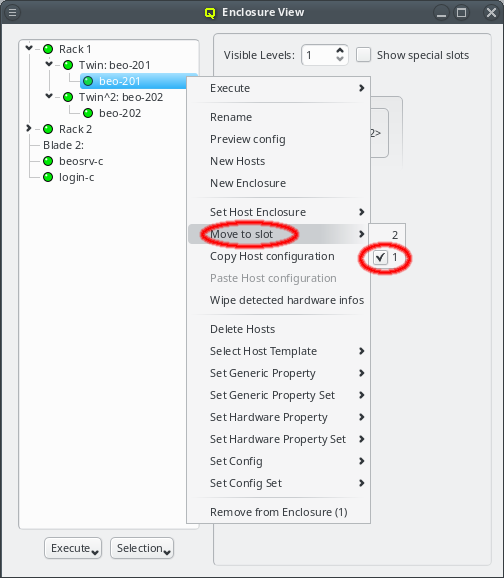

But sometimes this is not the case and a host or enclosure should be in a different slot as compared to the automatic placement. In this case, the host or enclosure can be moved through the context menu. The latter shows all the free slots the host or enclosure can be relocated to and a checked mark indicates the current location. Of course the relocation is only allowed into free slots. Hence, it may require removing (drag&drop out of the enclosure) a host or enclosure temporarily to free space for moving things around.

4.2.1.1. Host Selections

There are situations, where one wants to change a property or config of a whole set of hosts. For example, you may want to change all nodes located in a particular blade to no longer format their disk on boot. This can be achieved by selecting a set of hosts in the enclosure view with the mouse. A range of hosts can be selected by clicking on the first host and then clicking on the last host, while pressing the Shift key. Hosts can also be added or removed from the selection by clicking on a host while pressing the Ctrl key. Once a set of hosts is selected, changes can be made to all selected hosts through the context menu. For instance, this allows changing the Host Template or add/alter a generic property of a set of hosts.

|

When a host is part of an enclosure, selecting the enclosure will also select the host(s) inside of the enclosure, provided it is collapsed. However, hosts inside of expanded enclosures must be selected individually. |

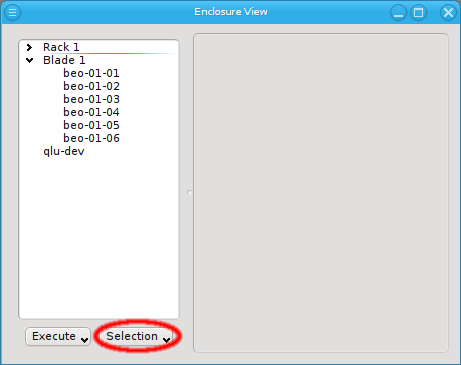

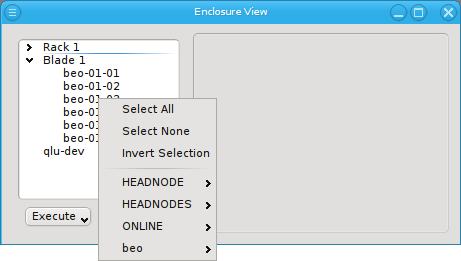

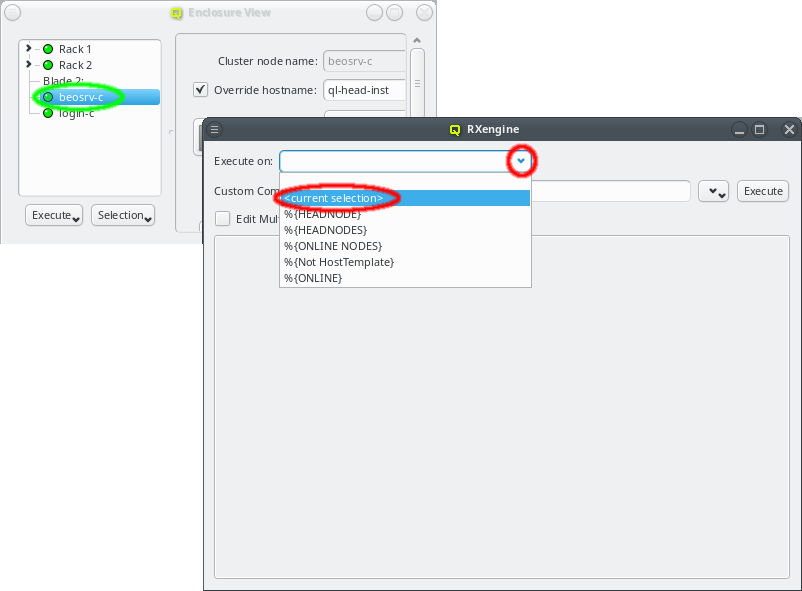

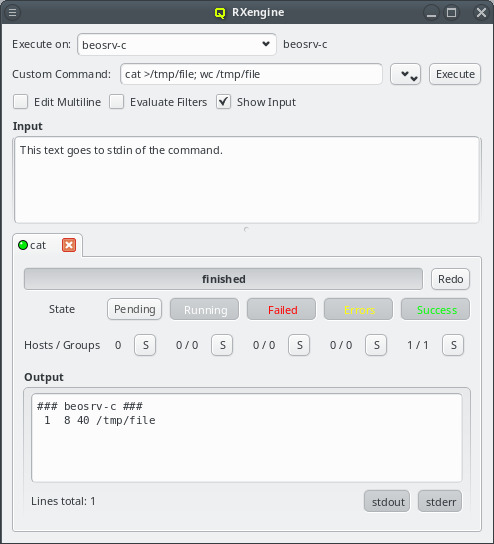

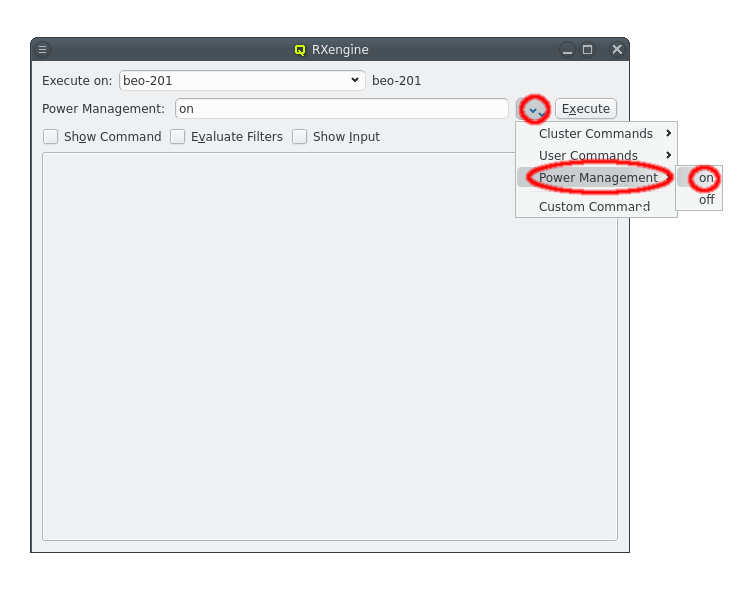

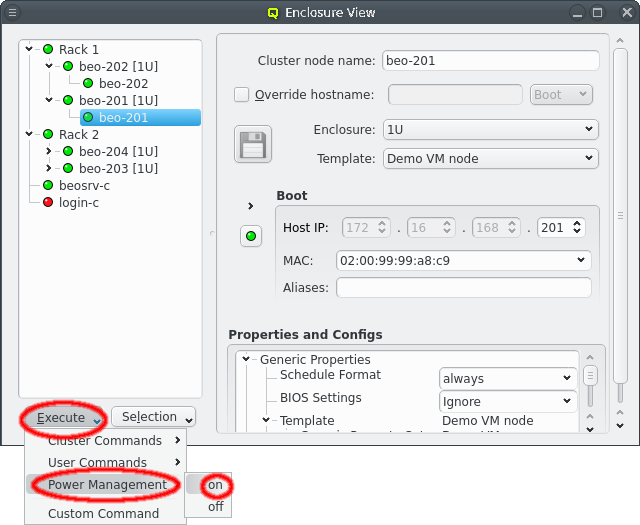

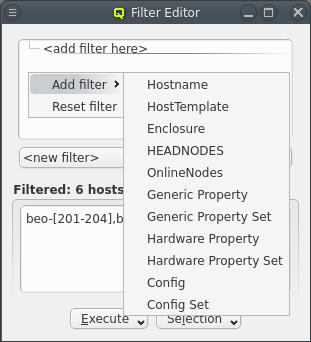

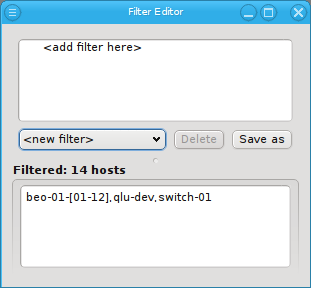

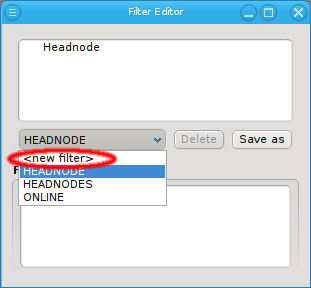

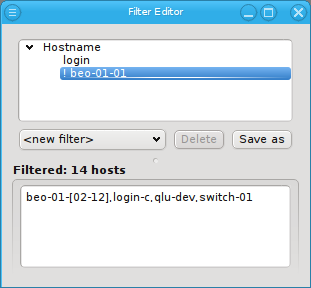

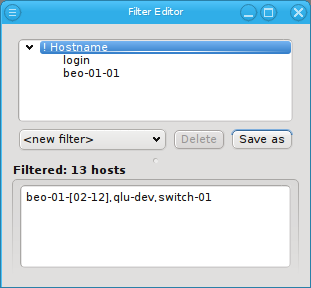

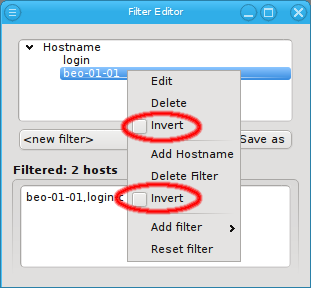

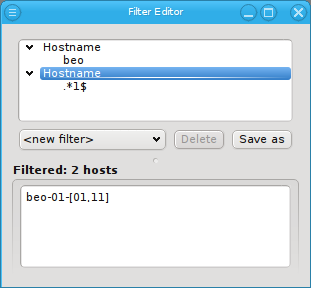

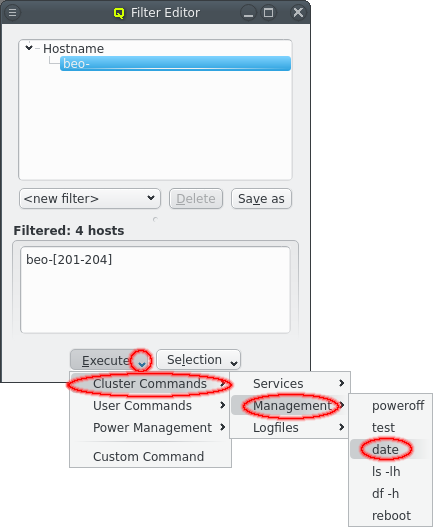

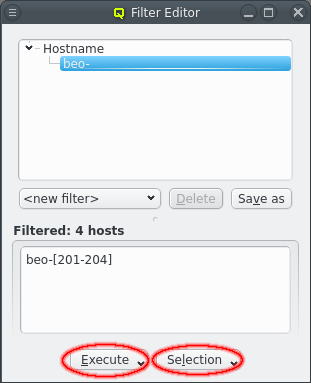

An alternative and more powerful way to select a set of hosts is available via the

Selection button at the bottom of the Enclosure View. When pressed, at the top

of the appearing selection menu you’ll find 3 items: To select all hosts, clear the selection

or to invert the selection.

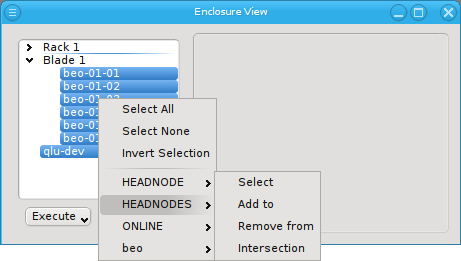

Below these items is a list of filters by which subsets of hosts were defined according to specific criteria. For more details on how to construct such Host Filters see Host Filters. When pressing Select, the selection is set to the hosts defined by the corresponding filter, dropping any previously selected hosts. Add to adds, while Remove from removes the hosts defined by the filter from the current selection. Intersection sets the selection to only those hosts in the current selection, that are also part of the set defined by the filter.

5. Adding/Configuring Hosts

5.1. Adding Hosts

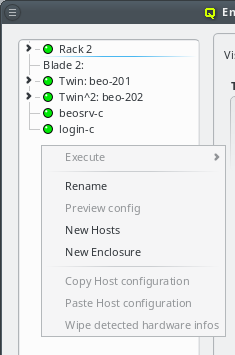

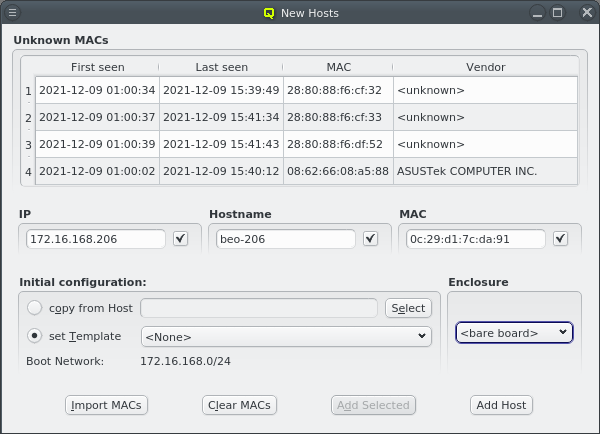

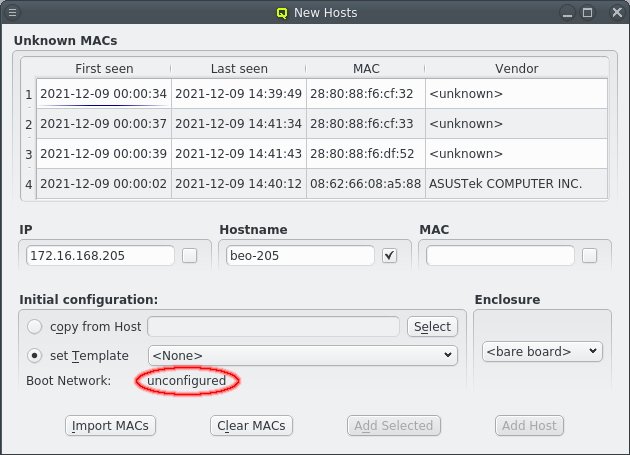

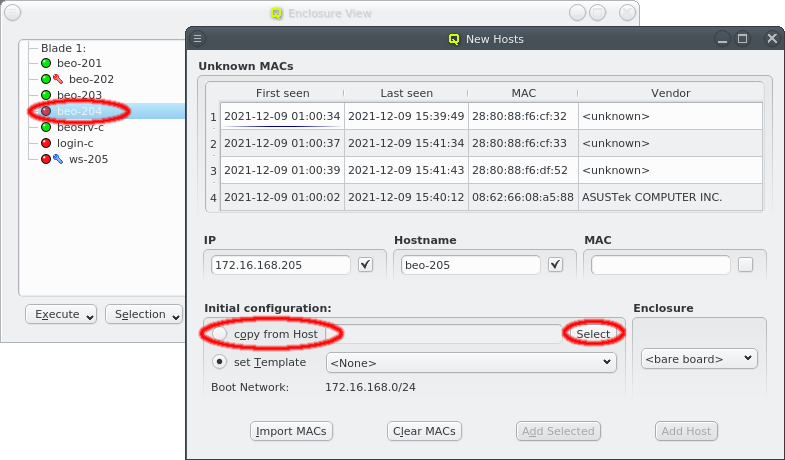

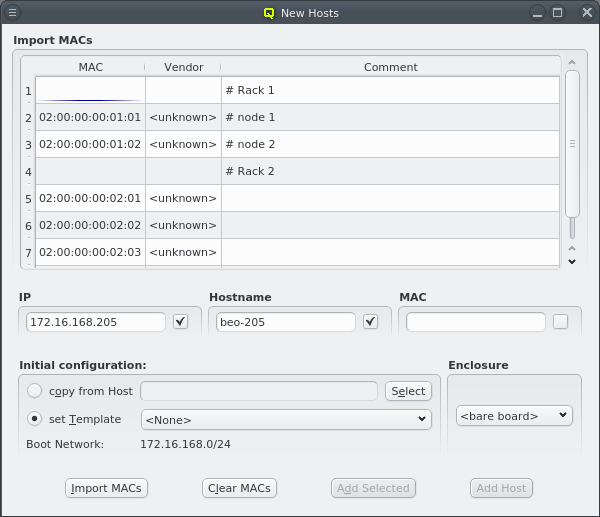

To add new hosts to the cluster you can either select New Hosts from the context menu in the Enclosure View tree or from the Manage Hosts menu. This opens the 'New Hosts' window.

Adding a new host requires the specification of an IP address, hostname and MAC in the corresponding three text fields of the dialog. The entered values are checked for their validity. If one of them is not valid, the check-box to its right remains cleared. The tool-tip of the check-box will then show, why it is invalid. If all the values are valid, all check-boxes will show a solid check and the Add Host button will become selectable.

The validity of the IP is determined by 2 factors:

-

The IP must not be used by another host.

-

It must be part of the boot network to be used for the host.

When the New Hosts window is first opened, the initial config for a new host is empty and

only the properties and configs from the Global Template will affect it. Selecting a template

or copy from Host for the initial configuration will add additional configuration settings

for it.

| The chosen initial configuration must include a Network Config. Otherwise no IP will be valid and no host can be added. |

For convenience and if it makes sense, the IP address and the numeric part of the hostname (if there is one) will automatically be incremented by one, after a host was added. So in most cases, these fields will not have to be changed manually to add the next host. Only the new MAC will need to be entered.

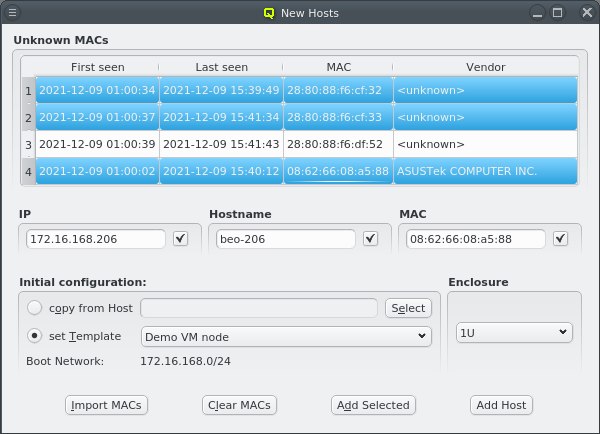

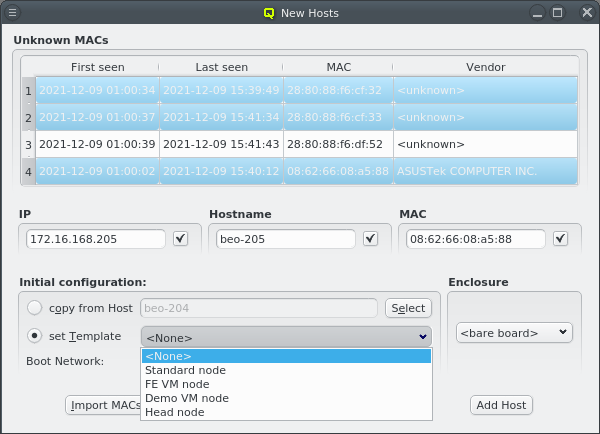

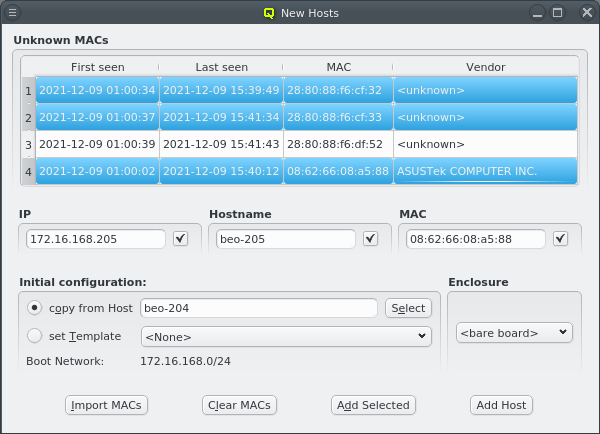

To help adding new hosts, qlumand scans the DHCP log file for unknown hosts that have

requested an IP address. For each unknown host found in the logs, the table at the top of the

window shows the time of the first and last appearance in the log, its MAC address as well as

the hardware vendor this MAC is assigned too (if known). Selecting a MAC in the table copies it

into the MAC text field at the bottom and a double-click adds the host with the

selected MAC. One can also select multiple lines (by holding the Ctrl key and

clicking or holding the Shift key and dragging the mouse) and then click the

Add Selected button at the bottom to add them all using the auto-increment

feature for the IP address and hostname. If unsure, try adding a single host first and check

the auto-increment does the right thing before adding a group of hosts.

One easy way to add groups of hosts is to power them on one at a time with a short delay (say 30 seconds). The hosts will then appear in the Unknown MACs table in the order they were powered on and can be added as a group with the click of a single button.

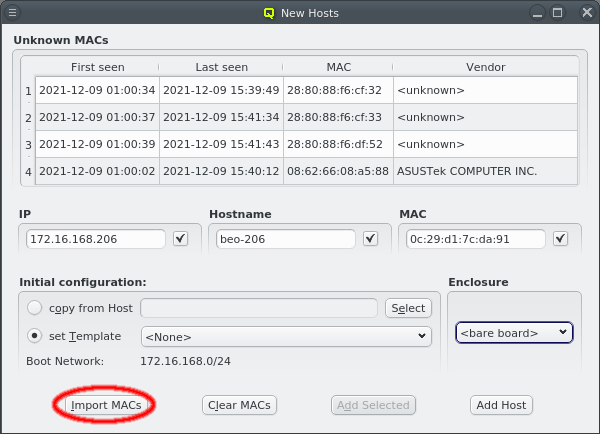

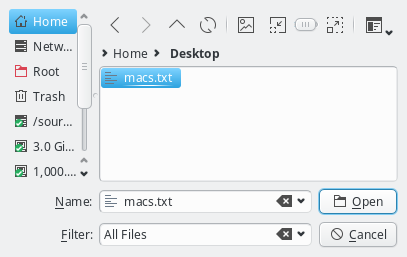

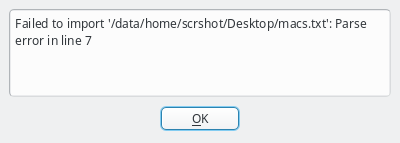

Another option is to import a list of mac addresses from a file by clicking Import MACs. Network switches with a management interface often have an option to list the MAC addresses for each port, so you could capture this list and save it in a file. The file might need some editing to conform to the syntax qluman-qt expects, which is as follows: Lines starting with an '#' and empty lines are treated as comments. Everything else must start with a MAC address in the standard hexadecimal notation using ':' as separator. Any text following the MAC address is displayed in the comment column after importing. Example (see also the corresponding screenshot):

# Rack 1 02:00:00:00:01:01 # node 1 02:00:00:00:01:02 # node 2 # Rack 2 02:00:00:00:02:01 02:00:00:00:02:02 02:00:00:00:02:03

In case the file can not be parsed an error is shown with the line number at which parsing failed. Otherwise the MAC addresses will be shown in place of the unassigned MAC addresses detected by the DHCP server. Adding single hosts or groups of host from the list works the same way as with the detected MACs as described above. Clicking the Clear MACs button clears the imported MACs and returns to the list of MACs detected by the DHCP server.

At the bottom of the window a Host Template can be selected that will be used as the default for new hosts. Most of the time, no additional configuration is needed for a new host. As an alternative way to make settings for the new hosts, one can select an existing properly configured host and choose to copy its settings to the new ones.

5.2. Configuring Hosts

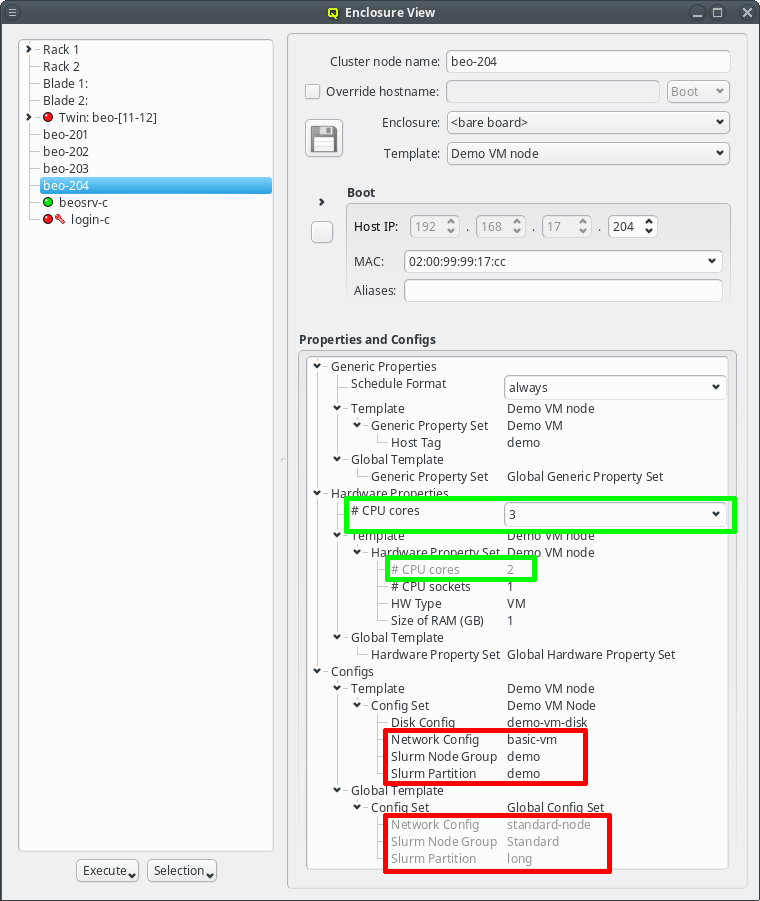

5.2.1. Four Levels of configuration

The configuration of a host results from the assignment of different types of properties and

Config Classes to it. A property is always a key + value pair. They are further divided into

Generic Properties and Hardware Properties. Generic/hardware properties and config classes

can be individually assigned to a host. This is the configuration level with highest priority,

meaning that such an assignment will always be effective.

They can also be used to define Generic Property Sets, Hardware Property Sets and Config

Sets. This is simply a means of grouping them together, so they can be used as a single

entity. These sets may also be individually assigned to a host. This is the configuration level

with second highest priority.

The third level of configuration are Host Templates. A Host Template consists of exactly one

Config Set, one Generic Property Set and one Hardware Property Set.

The fourth and most generic level of configuration is the Global Template. It applies to all

hosts in the cluster and consists of the Global Generic/Hardware Property and Config Set. In

principle, the latter are just like any other set, with the one difference that they always

apply to all hosts. This is useful when defining a base configuration for a cluster.

If a generic/hardware property or config is defined and assigned to a host in a particular hierarchy level, it overwrites the corresponding assignment(s)/value(s) from all lower priority levels. This introduces a lot of flexibility while retaining consistency.

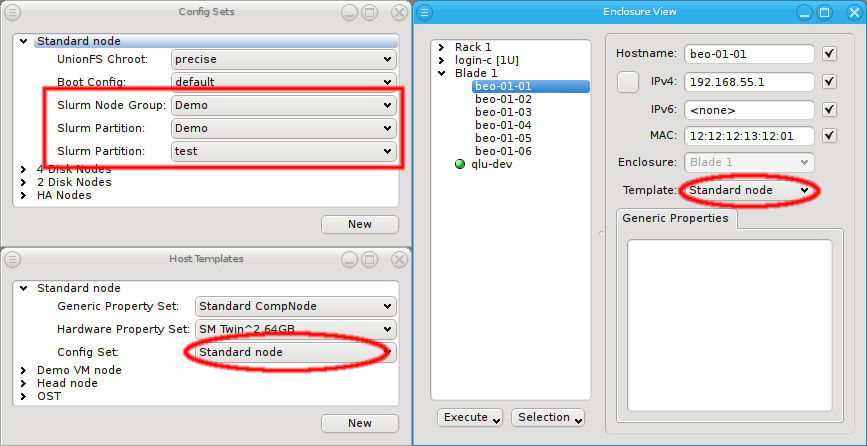

For example it allows setting a property in the Global Template that is right for most hosts

and then replace it in a particular Host Template being used for a few exceptional hosts. The

screen-shot shows two examples of this strategy: The assigned Host Template of the chosen host

replaces the configs for Net Config, Slurm Partition and Slurm Node Group of the Global

Template (red box) and the number of CPU cores is directly assigned replacing the value from

the Host Template (green box).

|

The tree representation of a host’s properties/configs in the Enclosure View clearly indicates overwritten assignments by displaying them in a light gray. |

5.2.2. Generic and Hardware Properties

Hardware Properties

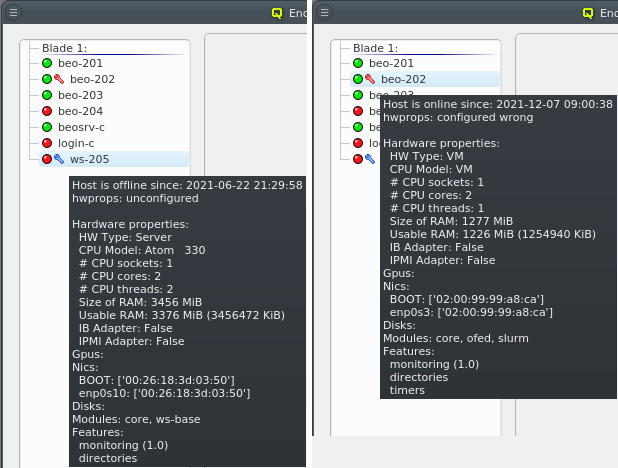

Hardware Properties are used to describe the hardware of a host. Among others, hardware

properties like the amount of RAM or number of CPU cores are used to configure the Slurm

workload manager, so jobs can be assigned to the desired hosts. Others, like e.g. the HW type,

are purely informational and might be used for inventory management.

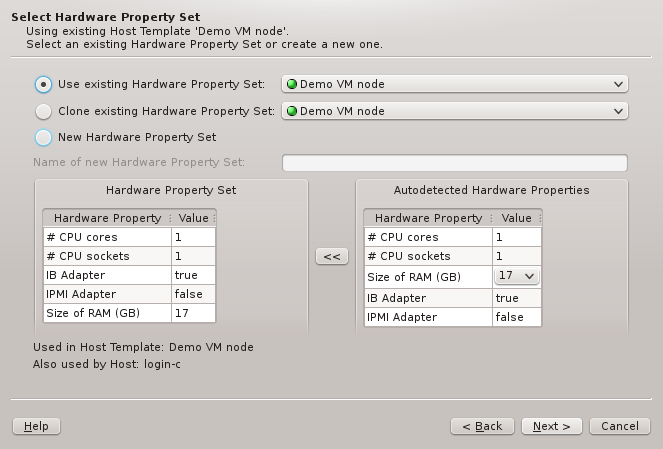

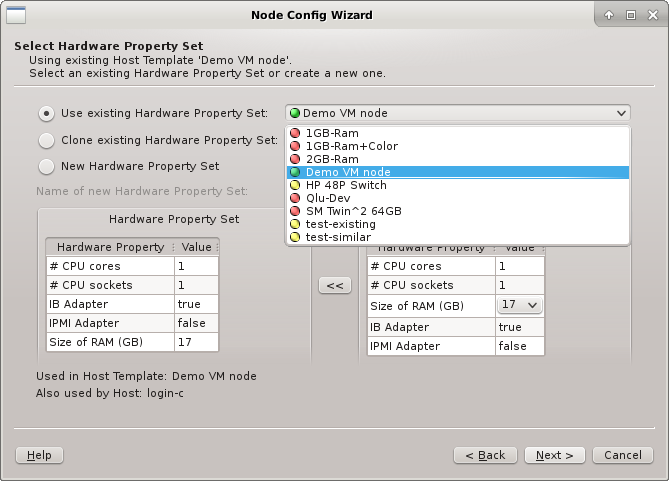

Hardware Properties usually don’t have to be entered manually. When a host boots, its basic

hardware properties are detected and reported to the head-node. They are shown as a tool-tip in

the Enclosure View when hovering over a host. Hosts that have none or only partially

configured Hardware Properties will show a blue wrench, while hosts for which the configured

Hardware Properties do not match the detected ones will show a red wrench. The

Hardware Wizard uses the reported values to

generate the correct configuration for a set of hosts and is the best way to create their initial

configuration. It can also be used to correct the configuration of hosts in case their hardware

was changed.

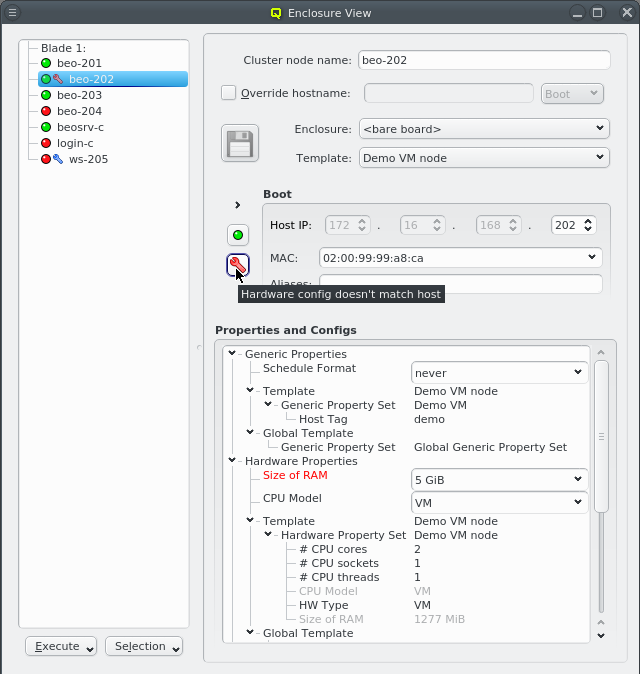

Selecting a node in the Enclosure View tree displays its configuration info on the right hand

side. This includes the red or blue wrench if there is a problem with the configuration of

Hardware Properties or GPUs. In the latter case, a few more details about the GPUs are

included. Individual Hardware Properties that don’t match will be shown in red in the

Properties and Configs tree and their tool-tip will show the detected value.

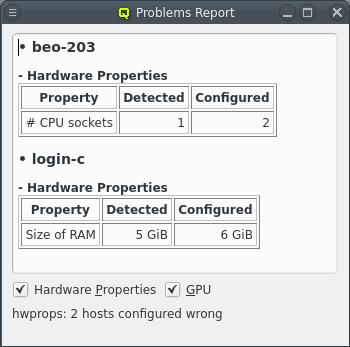

5.2.3. Problems Report

Using a host entry’s tool-tip or selecting the host to get further details about a configuration problem works only for one host at a time. With many misconfigured hosts, an easier way to get an overview of problems is by opening the Problems Report from the Manage Cluster menu. This will show the hardware and GPU configuration problems of all nodes in a list, displaying both the detected and configured values for each problematic GPU or hardware property.

At the bottom of the dialog an overall host-count of the problem is shown together with two check-boxes that allow to toggle the display of problems with Hardware Properties or GPUs selectively.

Generic Properties

A property that is not hardware related is called generic. Generic Properties can be

configuration options, like OpenSM Host, or purely informational, like Paid by. While

hardware properties are meant to be more rigid, typically with a configurable set of fixed

values, generic properties are more flexible and can be defined at will. Generic Properties

are also not necessarily unique, making it possible to assign multiple values for a single

generic property. This is useful e.g. to put hosts in multiple groups for dsh/pdsh (via the

'Host tag').

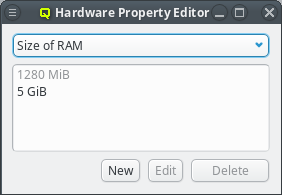

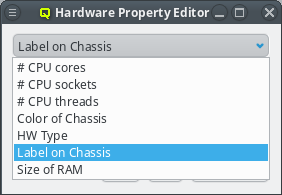

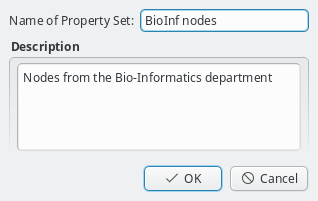

Generic/Hardware properties and their values are managed by the corresponding Property

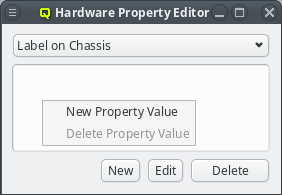

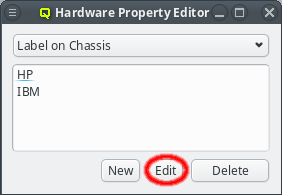

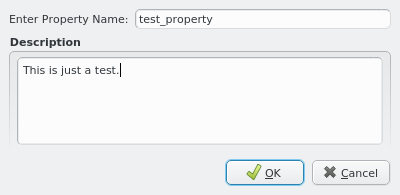

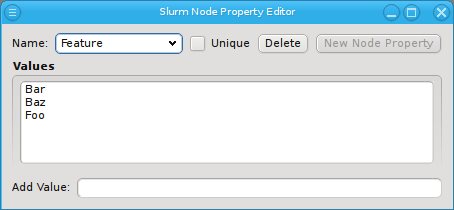

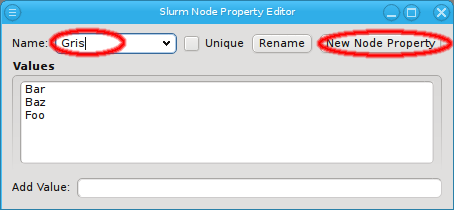

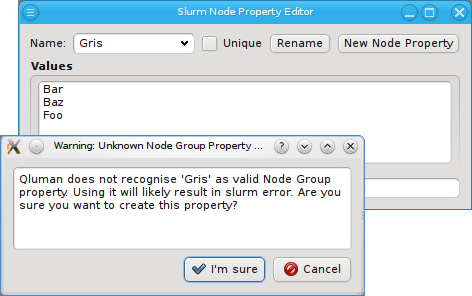

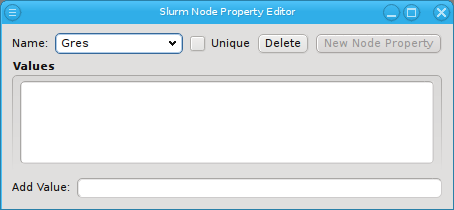

Editor. It is reachable from the Generic/Hardware Property Sets windows. A new

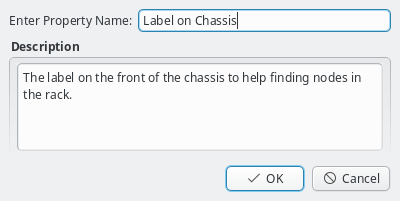

Generic/Hardware property can be created by clicking the New button. Each

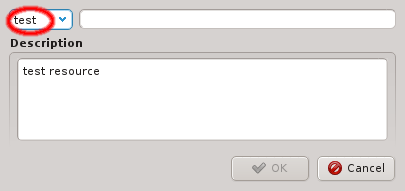

property has a name and a description. The name must be unique and the Ok button

will only be enabled if that is the case. The description is optional and will be shown as a

tool-tip when hovering over the property in other windows.

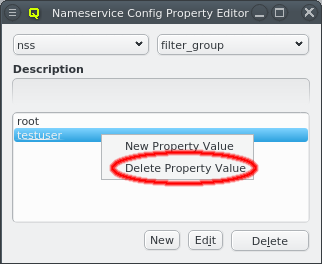

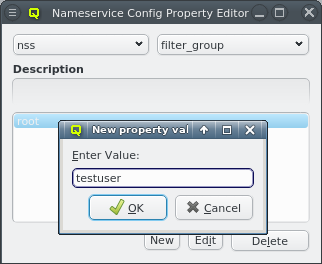

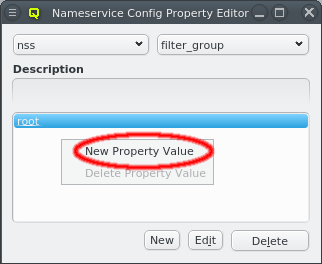

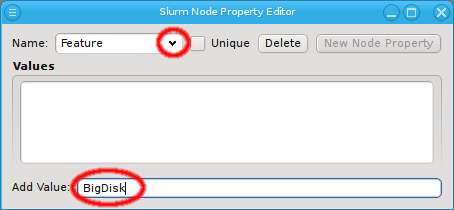

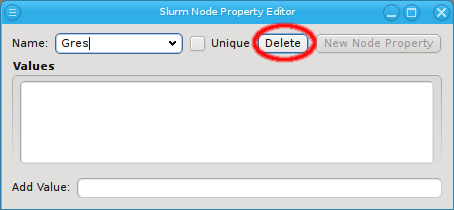

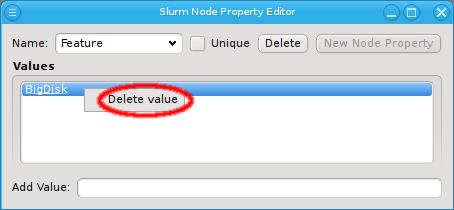

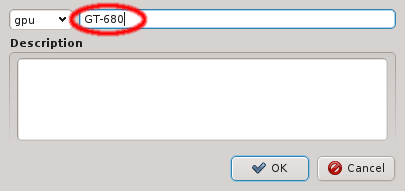

Once a property exists, values can be added to it. To accomplish this, the property must first be selected from the drop-down menu at the top of the Property Editor. Then values may be added or deleted using the context-menu.

With the exception of essential pre-defined properties, a property can be edited by clicking

the Edit button. Examples of properties that can not be edited (or deleted) are

the ones used to generate the slurm.conf. Changing their name would lead to errors in the

generated file. Similarly, some property values can not be deleted and will be shown

grayed out.

5.2.4. Property/Config Sets

Generic/hardware sets and config sets simplify the configuration of hosts substantially. They

are a means of grouping generic/hardware properties or configs, so they can be used as a single

entity and be assigned to individual hosts directly or via a Host Template. There is also a

global set of each type which is always assigned to every host. The generic/hardware and

config sets can be managed by opening the corresponding window from the Manage Sets menu.

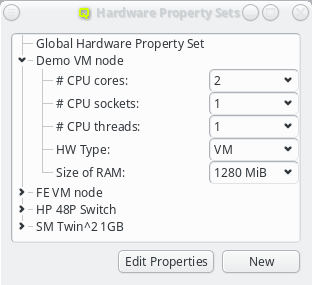

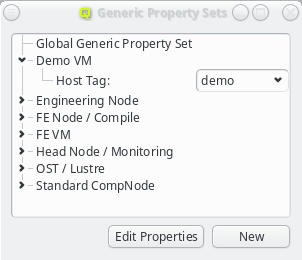

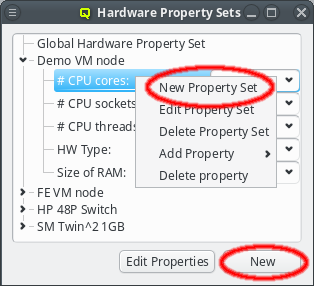

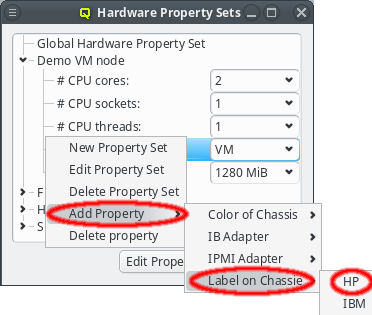

Hardware/Generic Property Sets

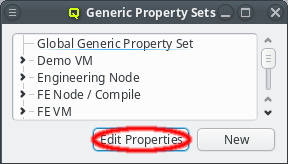

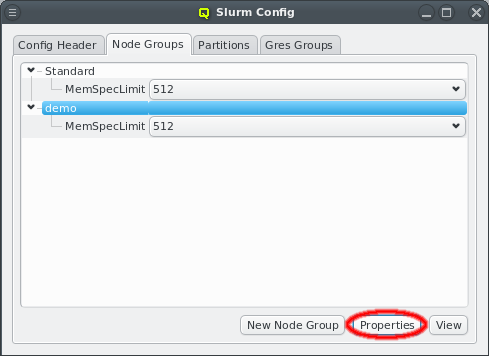

Property sets are shown in tree form with the name of each set as the top level item and the key/value pairs of assigned properties as children. The first entry is always the global property set. A new property set can be created by clicking the New button or from the context menu. An existing property set can be renamed or the description changed by selecting Edit Property Set from the context menu. Deletion of an unused property set is also possible via the context menu.

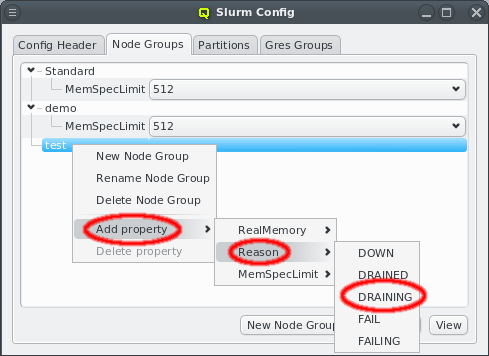

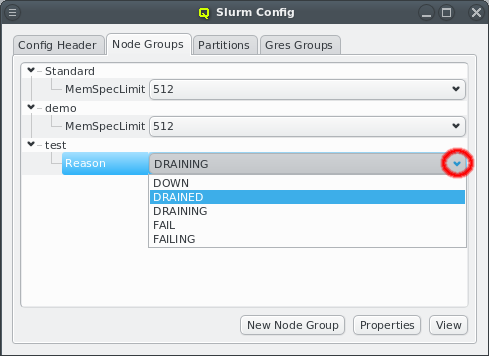

New properties can be added to a property set by opening the Add Property sub-menu in the context menu: Select the desired property and its value. Only properties and values that don’t conflict with already included properties or values are shown in the sub-menu. Already included properties may be changed in two ways: Either a different value is selected from the drop-down menu or the value is edited directly, in which case the change must be confirmed by pressing Enter. If the entered value for the property doesn’t exist yet, a new value is automatically created. Another way to create new values and the only way to delete them is through the property editor by clicking Edit Properties.

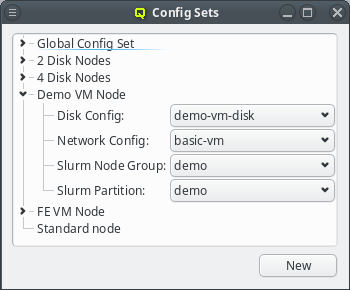

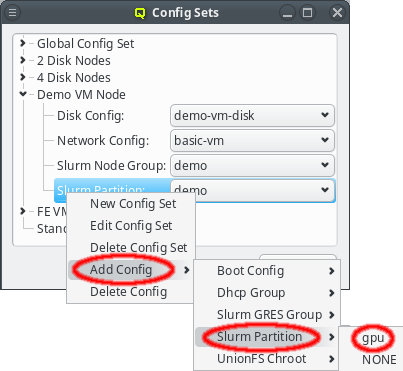

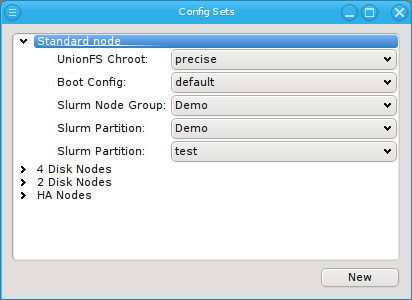

Config Sets

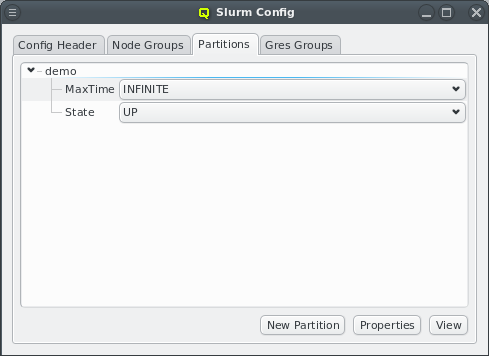

Config sets are managed the same way as property sets. They are displayed as a tree with the global set at the top and the key/value pairs of assigned config classes as children. Creating a new config set can be done by clicking the New button or from the context menu just like for property sets. Adding and removing config classes to/from a set also works the same way.

Unlike property sets though, the list of usable config classes is fixed and values can not be

edited directly from this window. Config classes require a more

complex and individual config. So for each config class there is a menu item in the

Manage Configs menu. Depending on the type of config class, one or more values

for that class can be assigned to a Config Set (slurm partitions are an example where

multiple values are assignable).

|

The sub-menu only shows config classes and values that can be added without conflicting with already assigned entries. |

5.2.5. Assigning a configuration to hosts

As mentioned in the beginning of this chapter there are 4 levels of configuration. Going from

lowest to highest priority these are: Global Template, Host Template, directly assigned

sets and directly assigned properties and configs.

Global Template

The Global Template is the most generic way to assign configurations to hosts. It applies to

all hosts in the cluster and consists of the Global Generic/Hardware Property and Config Set.

Host Templates

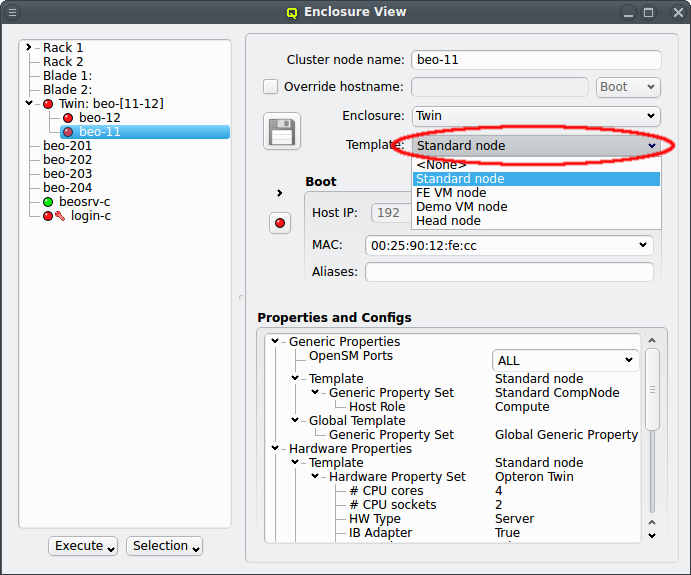

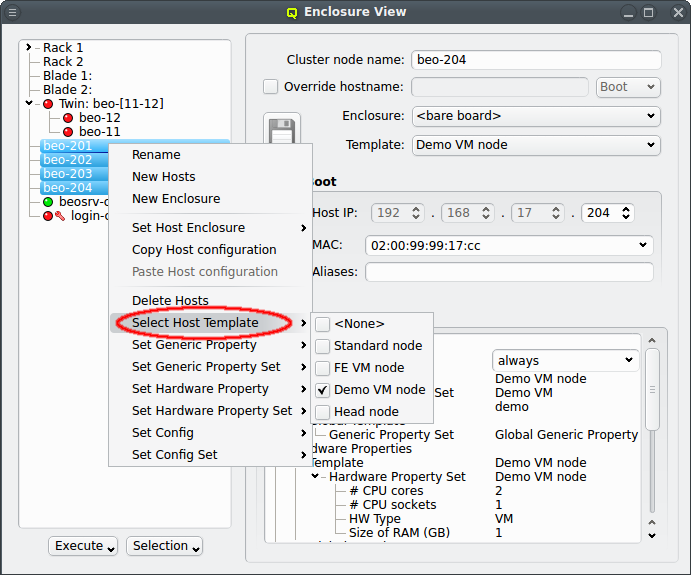

The next level of configuration is the Host Template. When a correct Host Template exists,

a host can be configured by selecting the desired template in the Enclosure View window. For

a single host, this can be done by selecting it in the tree view. This brings up the host

information on the right and a template can be selected from the drop-down menu. To configure

multiple hosts, you would select them in the tree view and choose a Host Template from the

context menu. The check-marks in the sub-menu indicate which Host Templates are currently

assigned (if any) for the selected nodes. This action will override the previous assignment for

all selected hosts.

Alternatively, especially when no correct Host Template exists yet, the Hardware Wizard can be used to to create a new or modify an existing Host Template and

assign it to hosts.

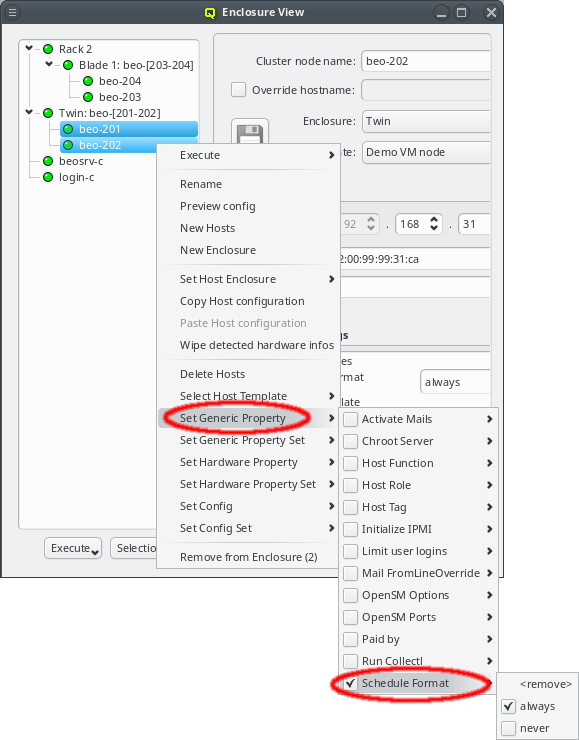

Directly assigned properties, configs and sets

Generic/hardware properties, configs and their corresponding sets can also be individually assigned to a host. Such assigned properties take precedence over ones of the same type selected through the Host or Global Template. This is useful when a particular (or a few) node(s) require a special property/config (set) while everything else should be set the same as for other hosts with the same template.

|

By default, every new host has the generic property |

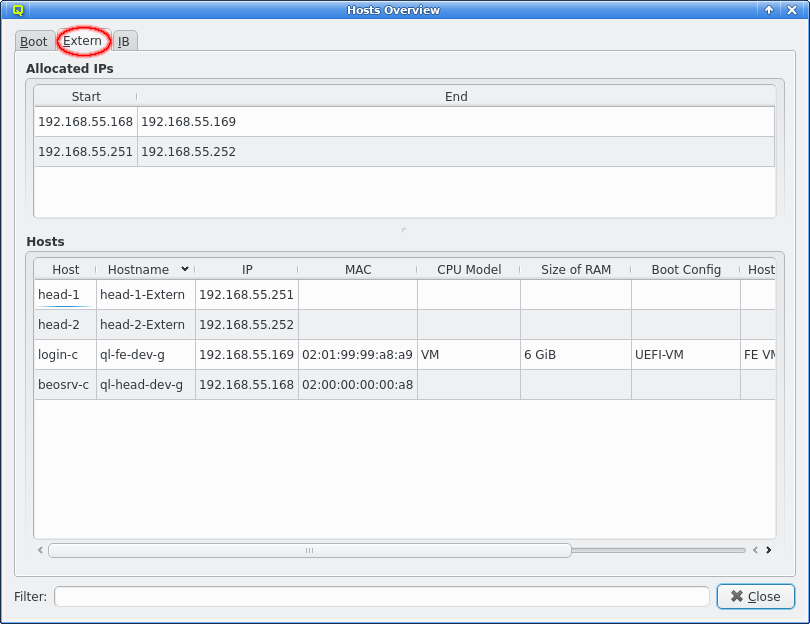

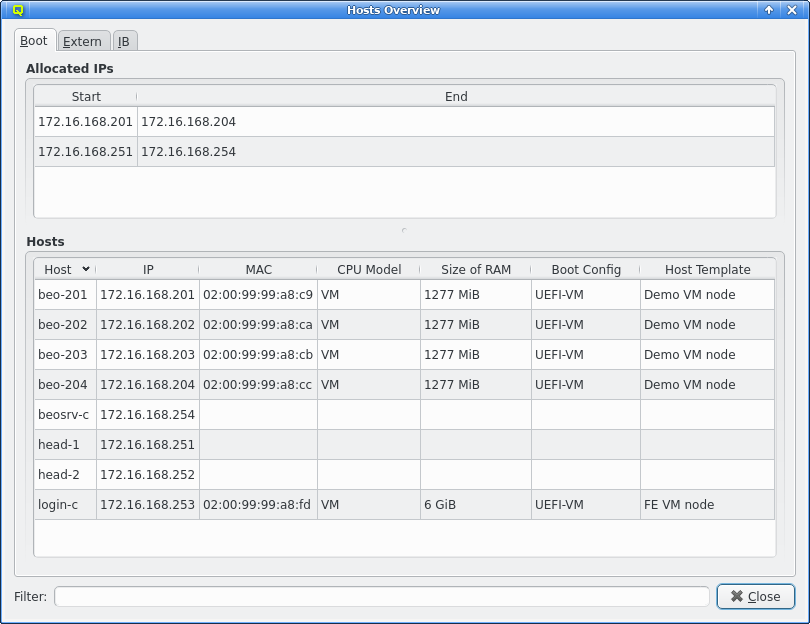

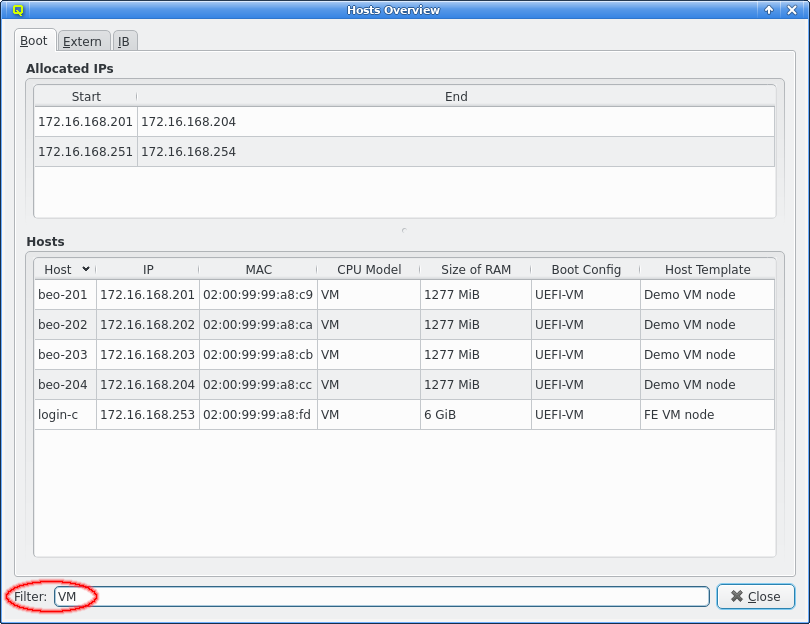

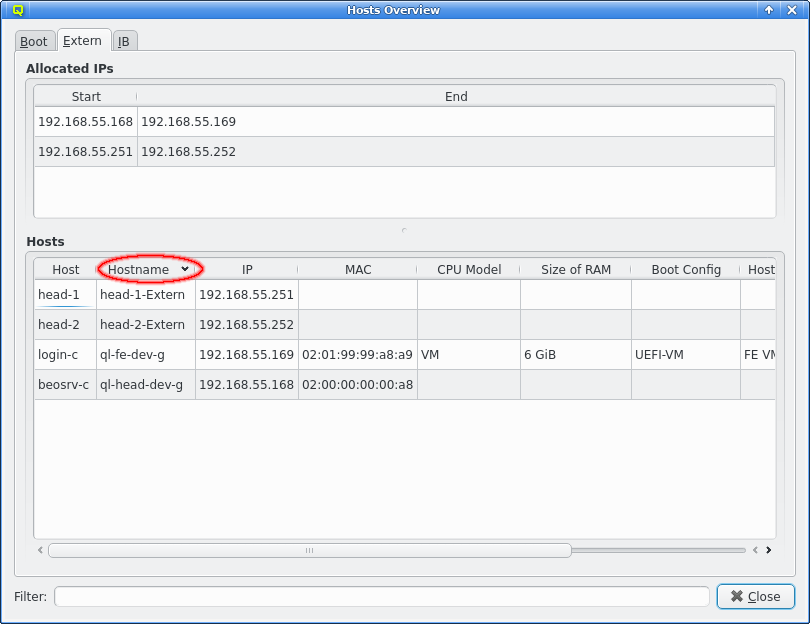

5.3. Host Overview

The Host Overview window provides a tabular overview of all the hosts in a cluster split by networks. Tabs at the top allow to select the network one is interested in. In each tab, the IP ranges allocated in that network are shown at the top and a list of hosts with their most important config parameters below.

The Allocated IPs box at the top always shows all used IPs in the network regardless of the active filter (see below). The addresses are shown as start and end IP for each continuous range of allocated IPs. When planning to add a new set of hosts this can be helpful to find unused IPs in a range and then using them to obtain a more contiguous block of addresses.

At the bottom is the list of hosts with a NIC in the selected network. For each host, its QluMan name, hostname (if overwritten), IP, MAC, CPU Model, size of RAM, GPU (if any), Boot Config and Host Template are shown. Empty columns are omitted, e.g. the Hostname column in the Boot network.

Since the list of hosts for a network can be long, there are two ways to help navigate it: a) The list can be sorted by any column simply by clicking at the respective column header. b) More powerful, an on-the-fly filter can be applied to the lists. By entering a string or Python regular expression into the Filter text-box, the lists will be reduced to lines that contain the entered string or match the regex.

|

Clicking on the header of a new column will retain the previous sorting of rows for which the entries in the newly selected column are identical. This way a list can be sorted by multiple columns. For example selecting the CPU Model column followed by the Host Template column will show hosts sorted by the Host Template column and hosts with the same Host Template will remain sorted by CPU Model. |

6. Hardware Wizard

6.1. Purpose

When setting up new hosts, there are a number of configuration or other settings to be made. They are used to specify their hardware configuration, to determine what OS they should boot and to fine-tune the behavior of applications running on them. All the necessary steps for the desired configuration of the nodes can be done manually and also be changed later through the various dialogs from the main window.

As a convenient alternative, the Hardware Wizard guides you through the necessary

configuration steps with a special emphasis on the hardware configuration. It uses the

auto-detected hardware properties of hosts to suggest their optimal configuration

options. Furthermore, it tries to keep a balance between the available configuration

strategies: Using templates, property/config sets or individual properties/config classes.

6.2. Selecting Hosts

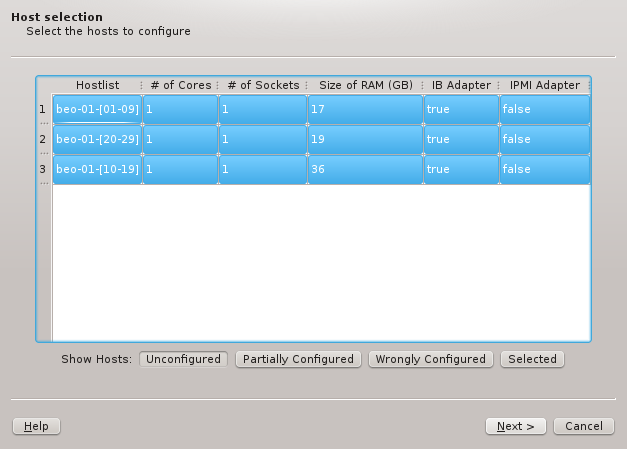

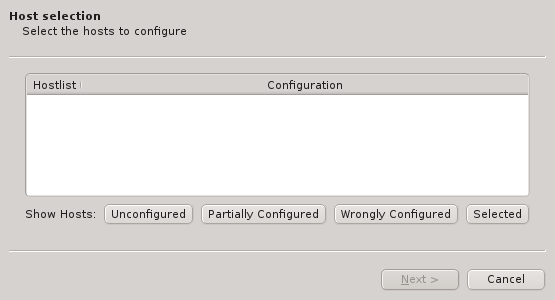

The first step is to select the hosts that should be configured. Initially, the lists of hosts

is empty. One or more of the four buttons at the bottom have to be pressed to pre-select hosts

that should be considered. The Unconfigured button adds all hosts that do not have any

hardware configured at all. A freshly added host without an assigned Host Template will fall

into this category. The Partially Configured button adds hosts that already have some

hardware configured correctly but not all of it. The Wrongly Configured button adds

hosts, where the configured hardware properties do not match the hardware detected at boot,

e.g. when nodes have been updated with more ram. Finally, the Selected button adds hosts,

that have been selected in the enclosure view, including hosts that are configured correctly

already.

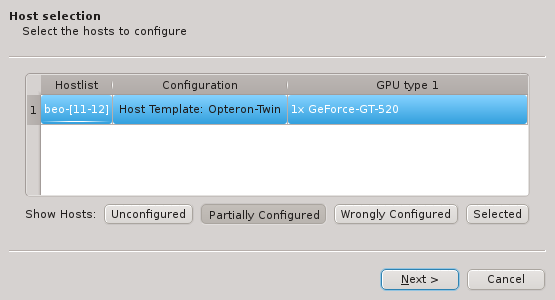

Once one or more of the buttons are pressed, the affected hosts will show up in the table. To

keep things compact, hosts with identically detected hardware are grouped together and shown in

hostlist syntax. By default, all shown groups are selected and will be configured using a

single Host Template and therefore single Hardware Property, Generic Property and Config

Set. The possible differences in hardware configurations within the list of selected hosts

will be handled by the wizard with the per host settings. In case all the groups shouldn’t

use the same Host Template, groups may be selected or deselected individually and the

remaining ones can be configured by running the wizard again later. Groups of hosts with

identical hardware can’t be split up though. If this is required, select the hosts individually

in the Enclosure View and use only the Selected button. Once the desired groups

of hosts have been selected click Next to continue configuring them.

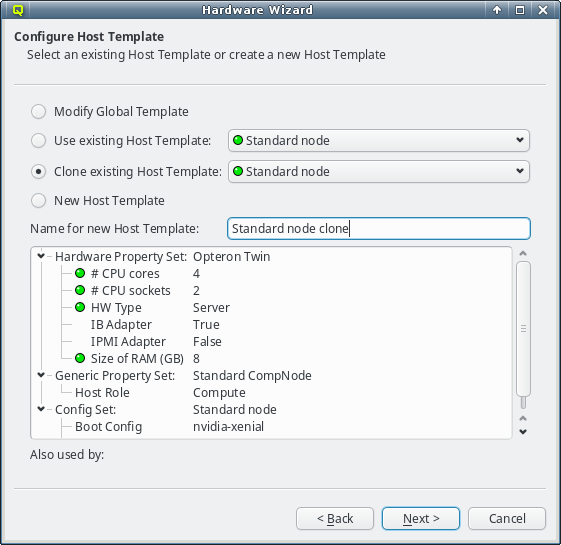

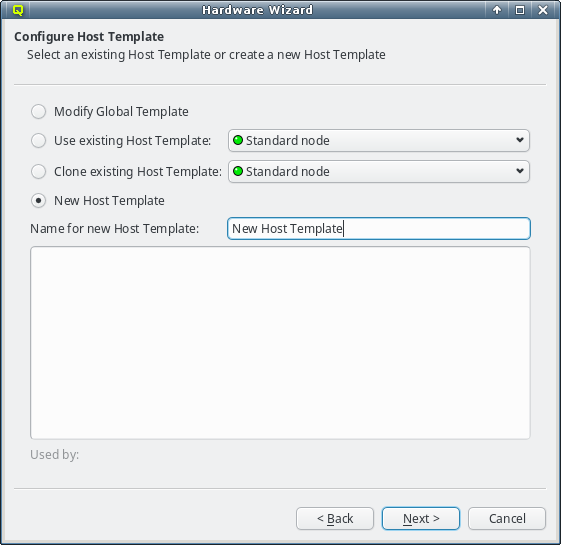

6.3. Configuring the Host Template

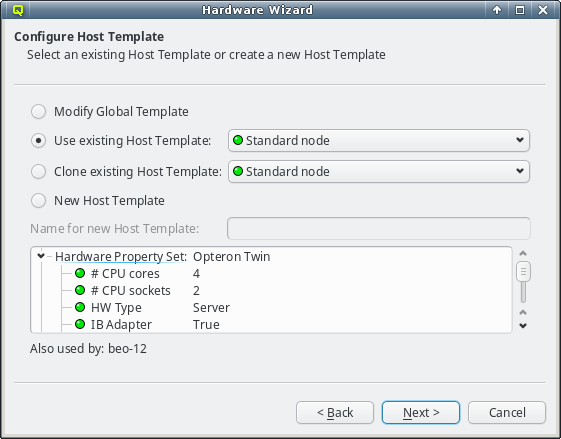

As explained in Configuring Hosts the major part of a hosts

configuration is derived from a Host Template. One of the wizard’s goals is, to find an

existing Host Template with a Hardware Property set that optimally matches the detected

hardware for at least some of the selected hosts. If such a Host Template is found, it will

be pre-selected and the Use existing Host Template choice will be active.

The settings inherited from this template, are shown underneath in tree format and below the property tree, a list of hosts, that currently use the selected template, is shown for informational purpose.

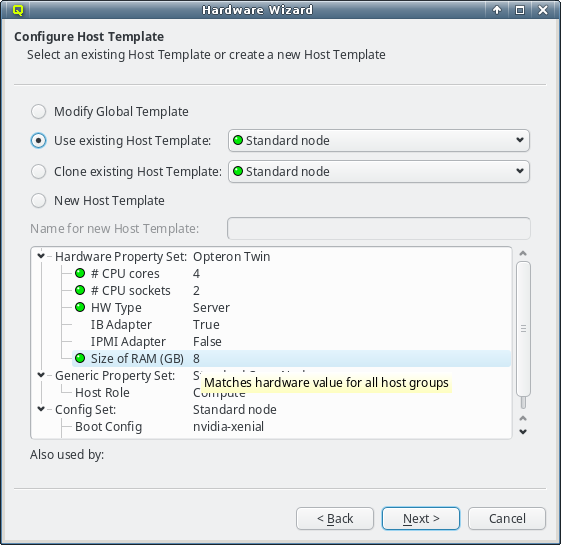

The individual properties belonging to the Hardware Property Set of the selected Host

Template are color-coded, to show how well they fit the detected values of the host groups

being configured. Hovering over a hardware property brings up a helpful tool-tip explaining the

coloring. A green bulb indicates, that the property matches the detected value for all hosts. A

yellow one, that it matches some but not all hosts. This happens, when some of the selected

hosts have different hardware and means that the selected template is still a good fit. A red

bulb indicates that the value matches none of the hosts and is a bad fit. Such a property value

may be changed later in the follow-up pages or a different Host Template can be selected

right-away.

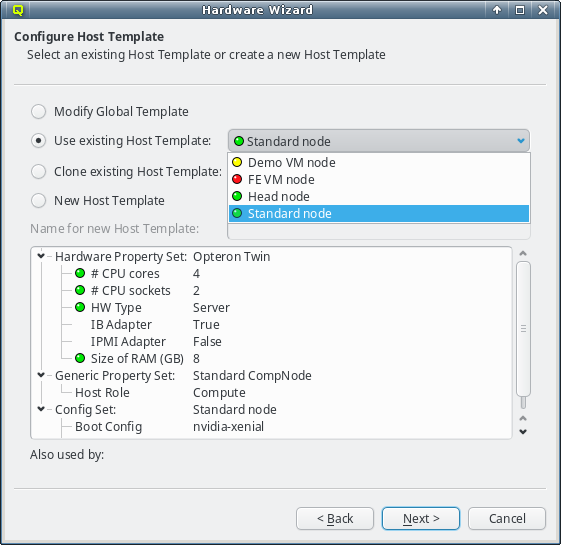

In case the pre-selected Host Template is not the desired choice, a different one can be

selected from the drop-down menu. The choices are again color-coded to indicate how well they

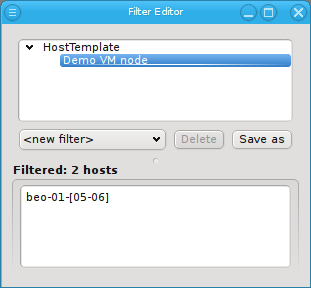

match the detected properties of the selected hosts. Here a green bulb means that the Host

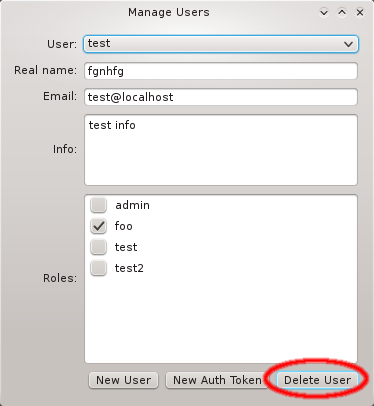

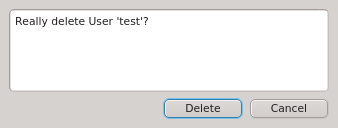

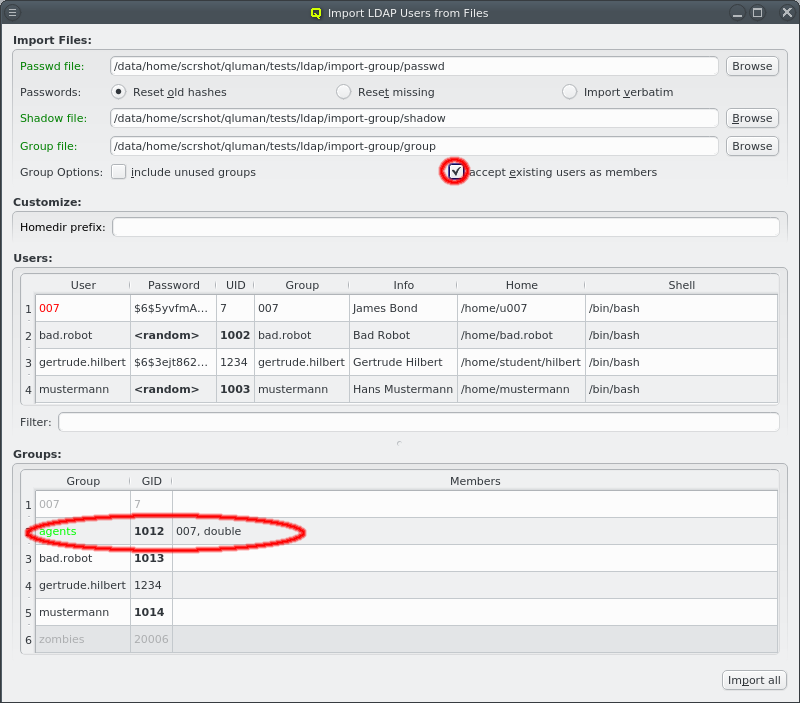

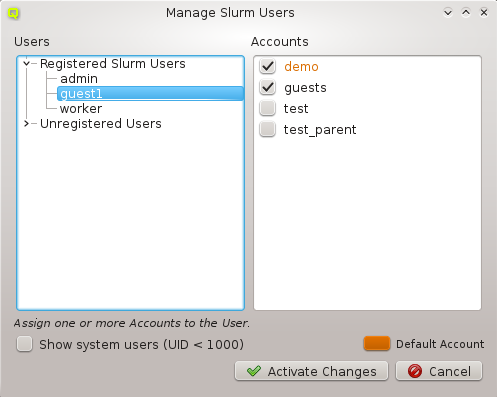

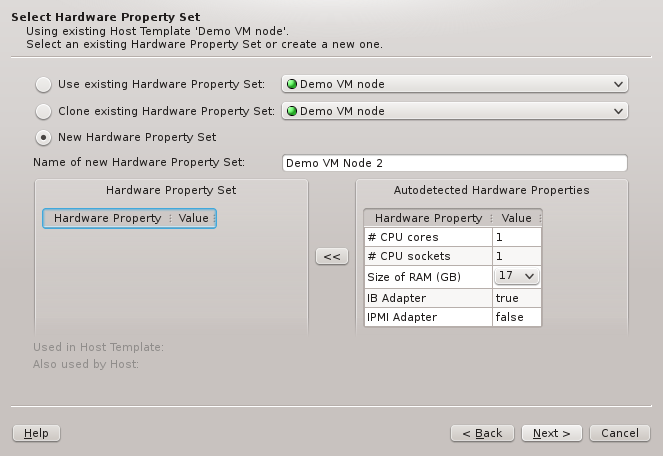

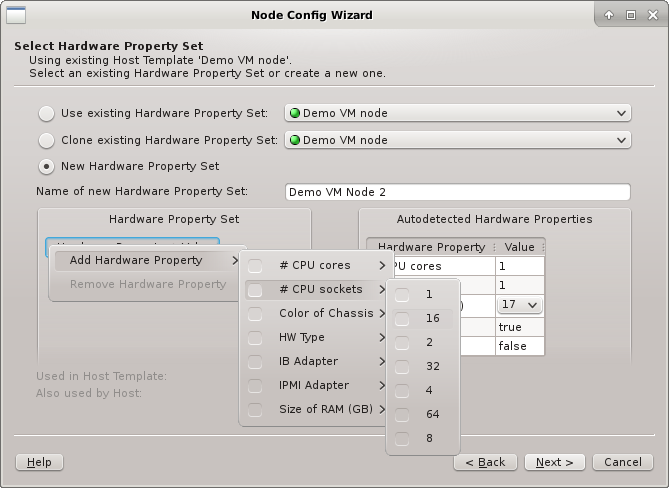

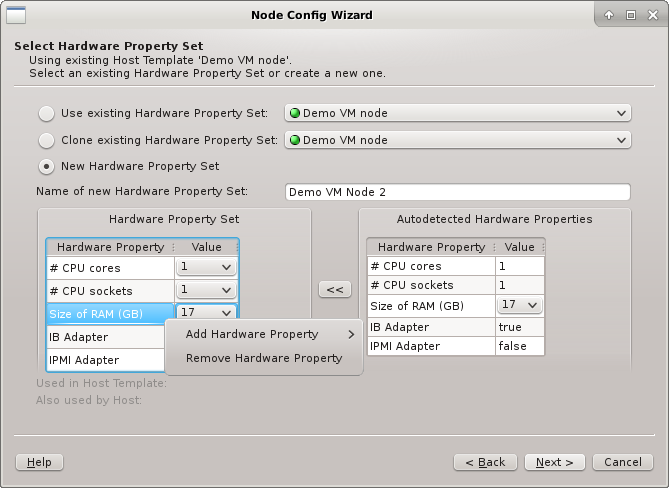

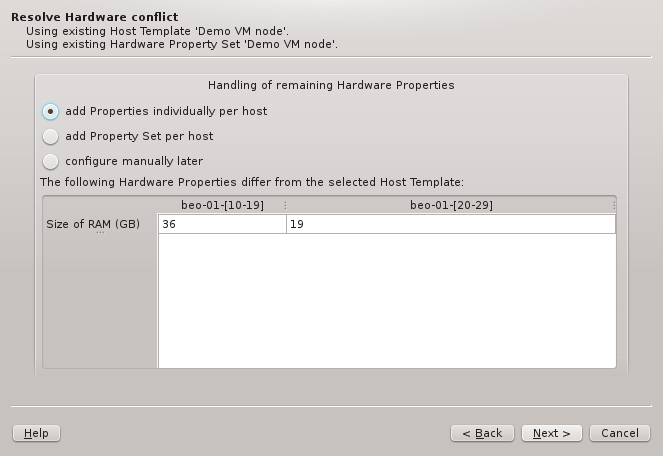

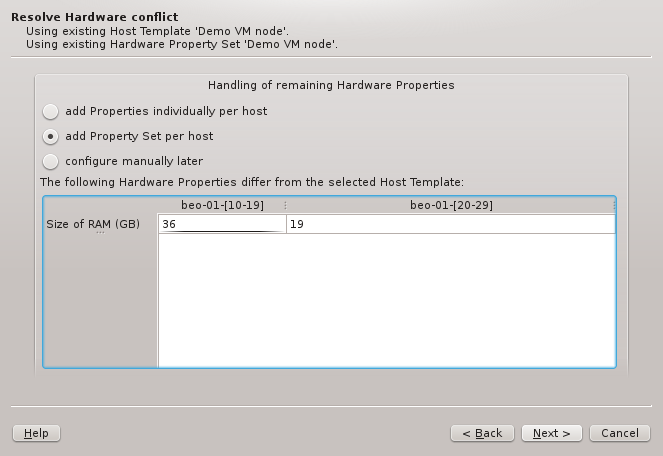

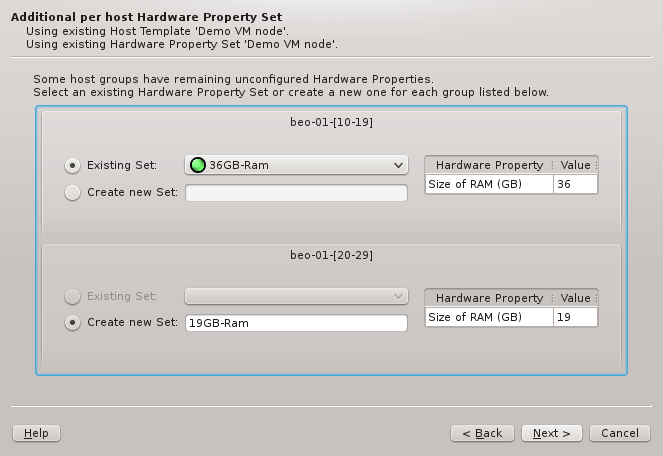

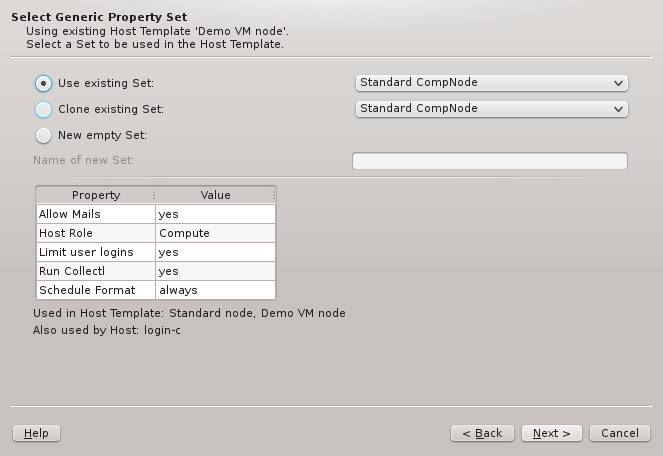

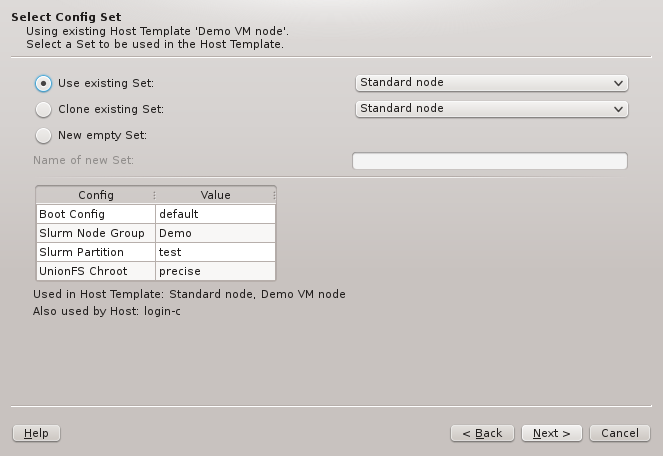

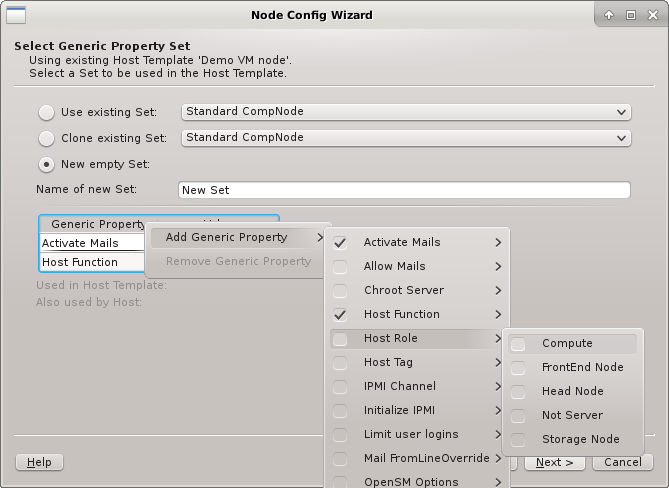

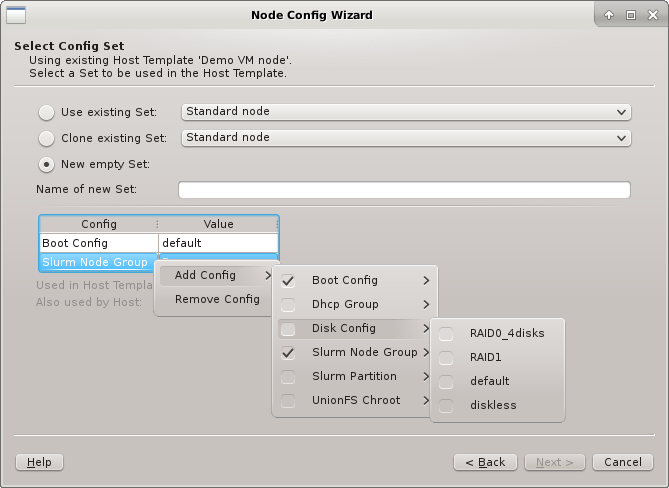

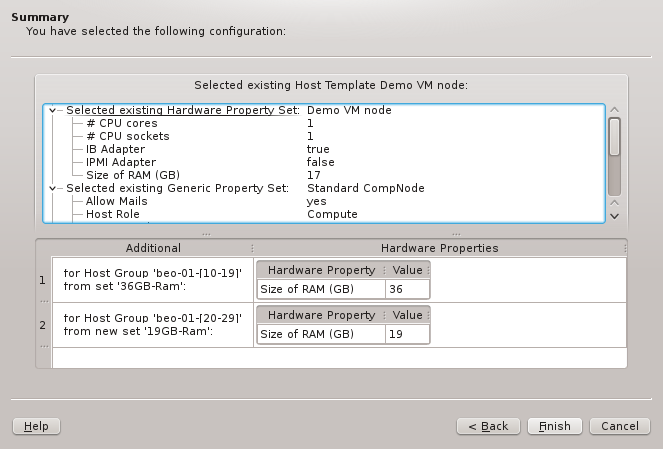

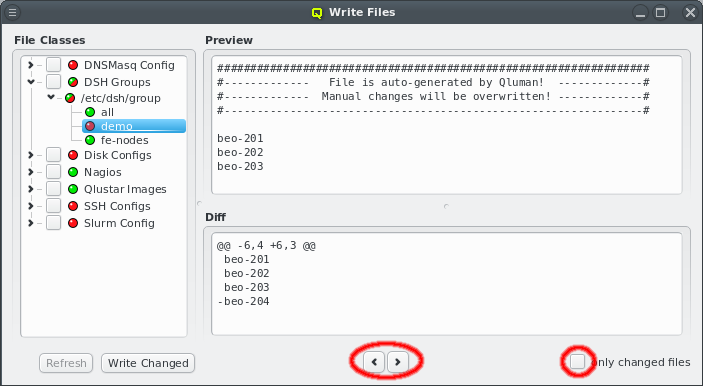

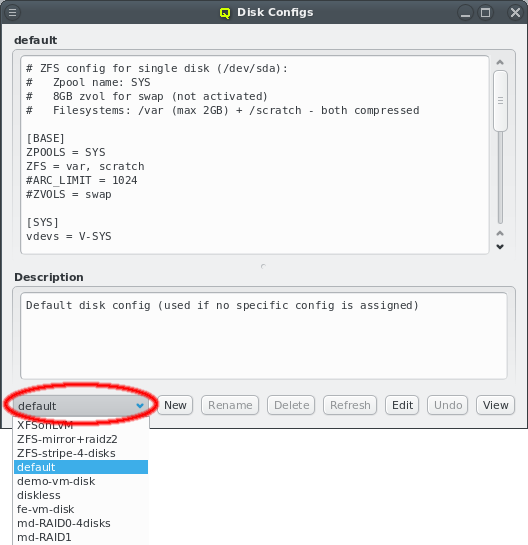

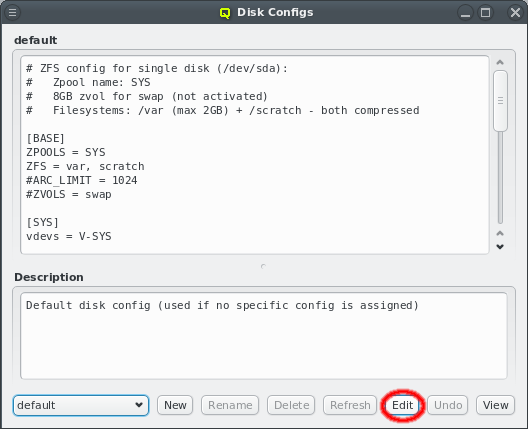

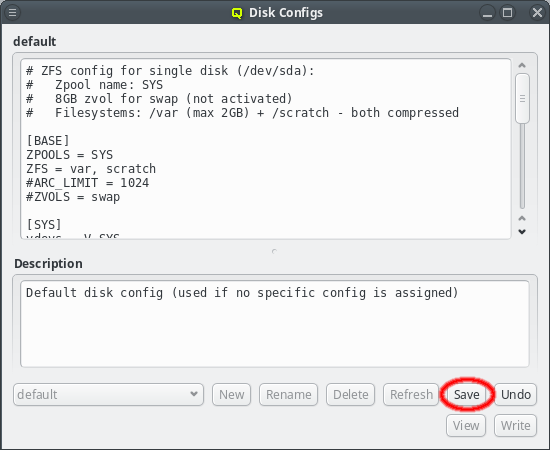

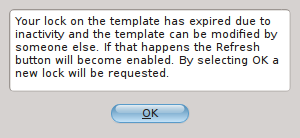

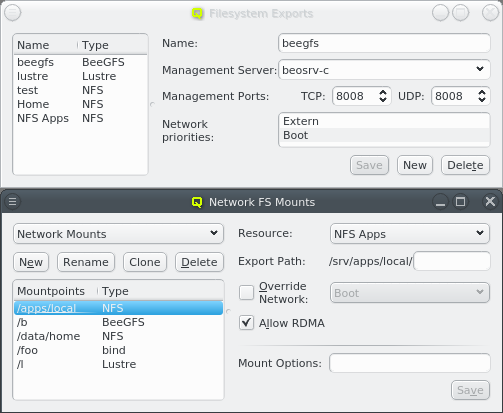

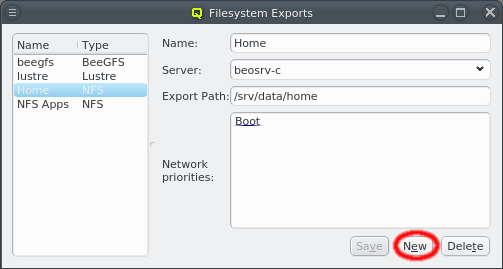

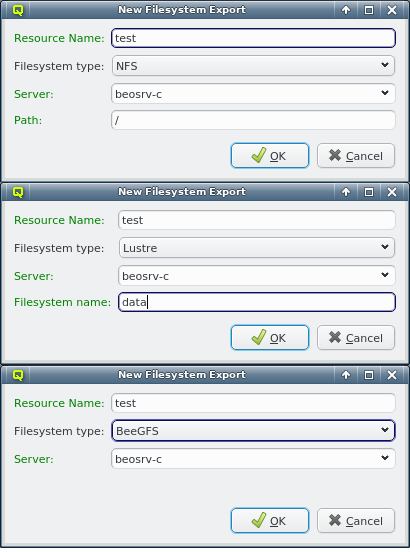

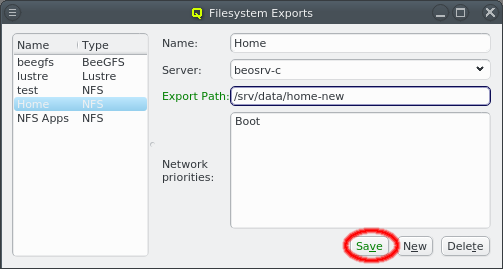

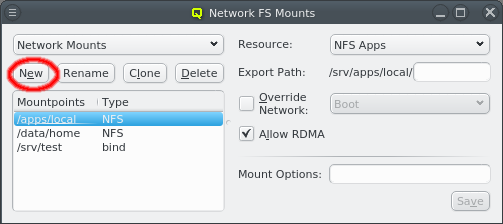

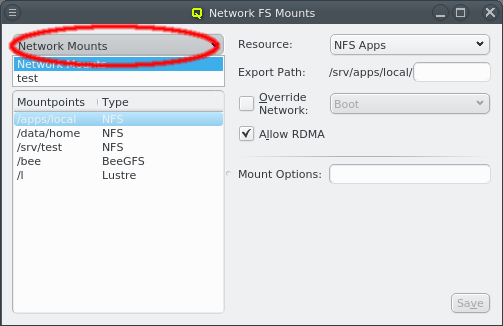

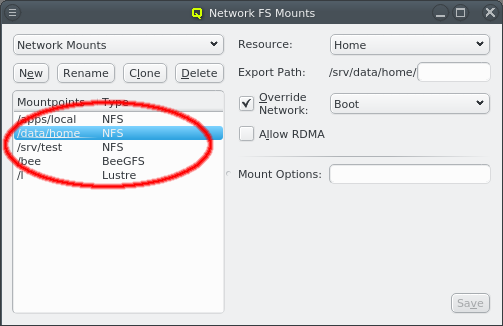

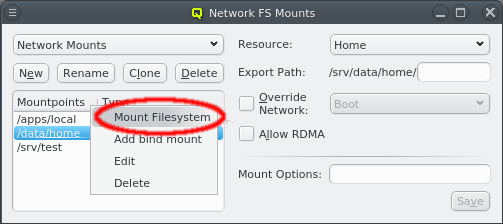

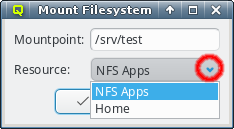

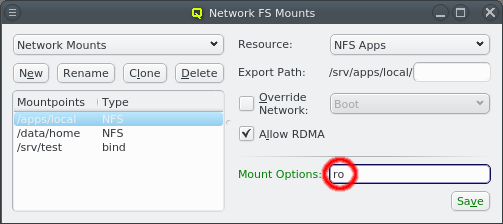

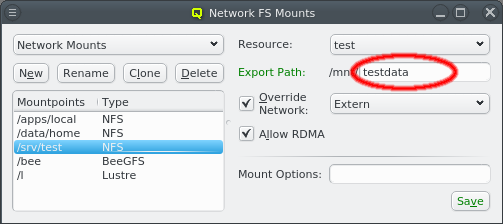

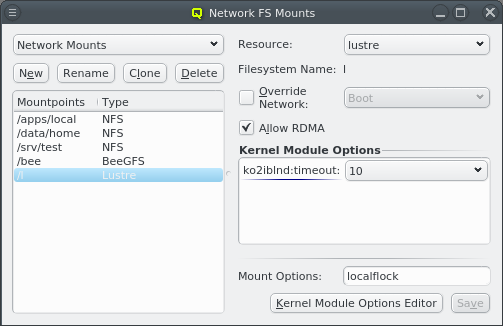

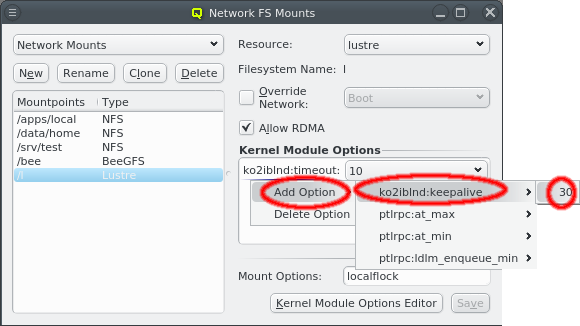

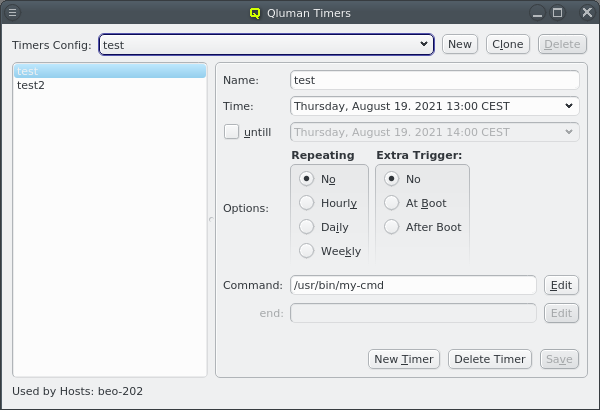

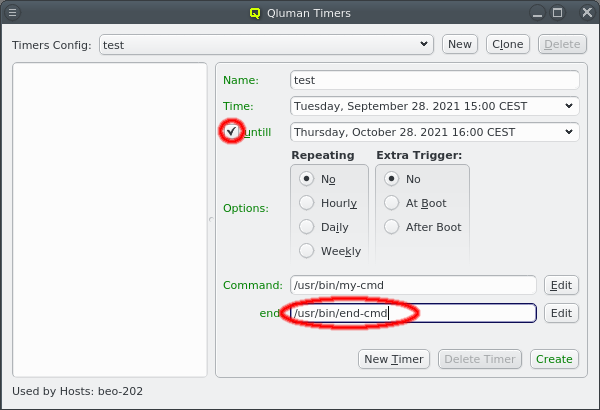

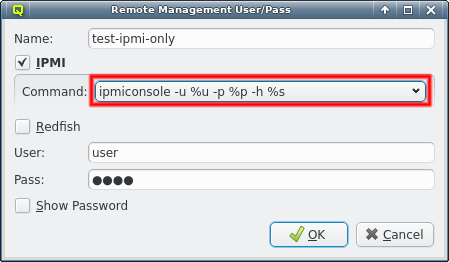

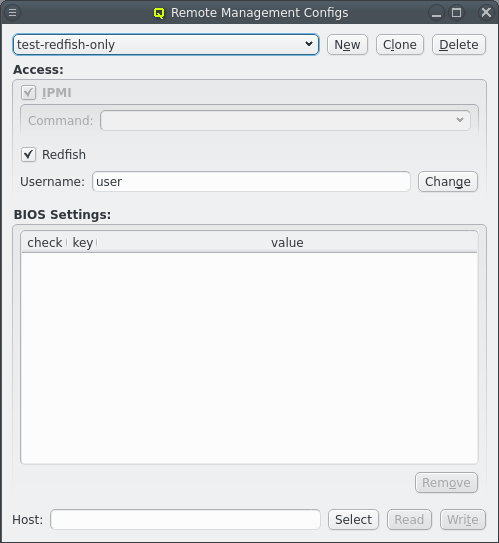

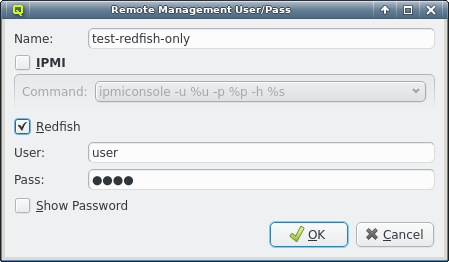

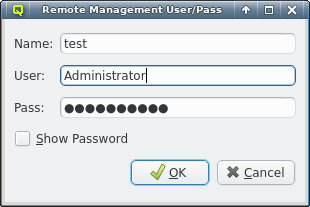

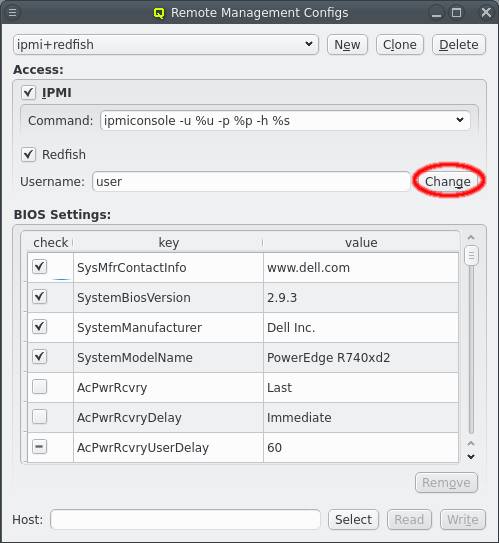

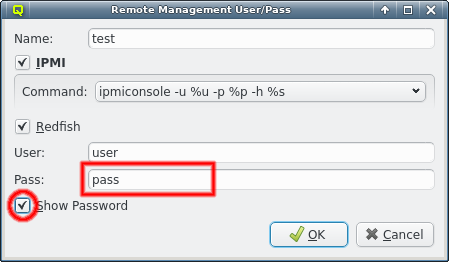

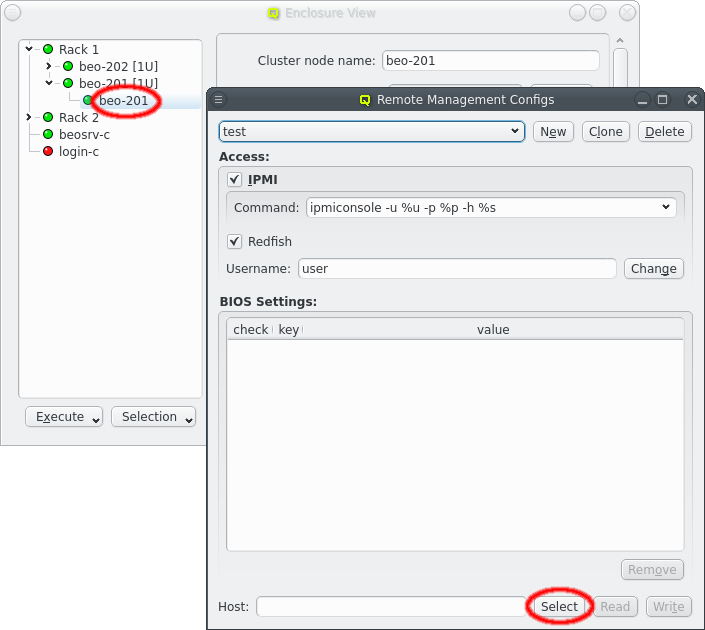

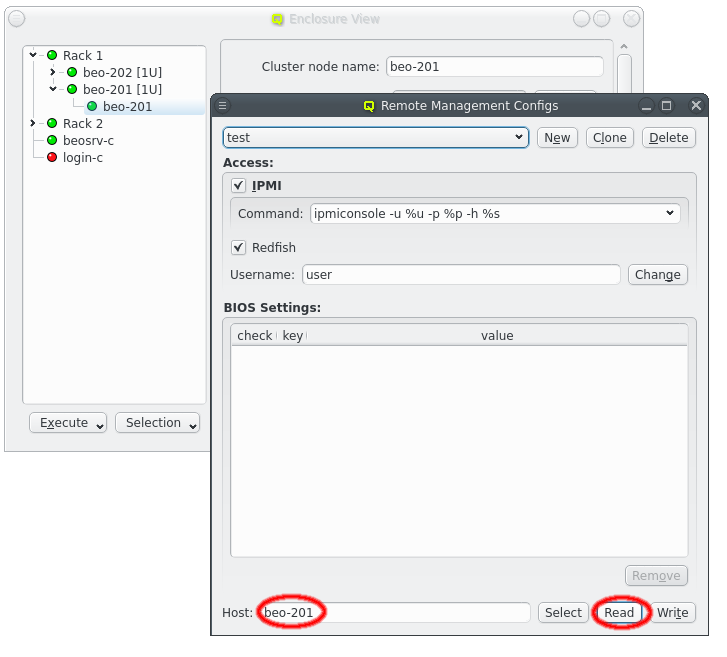

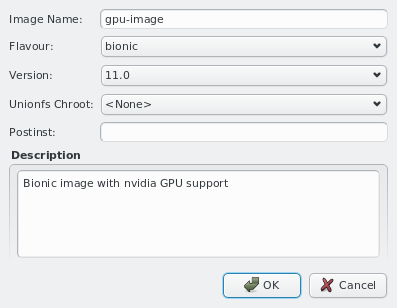

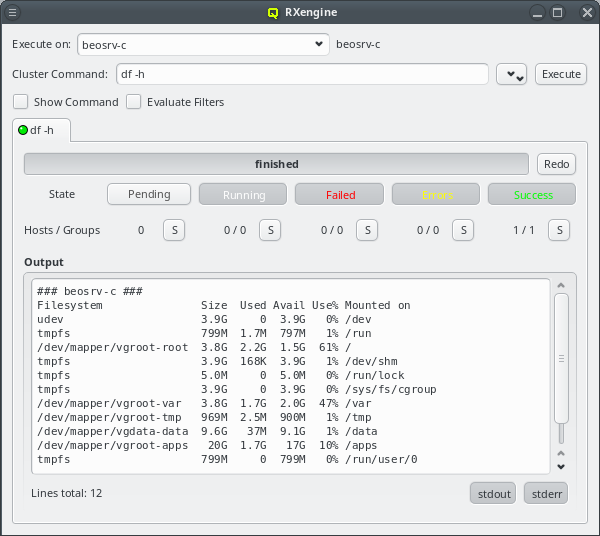

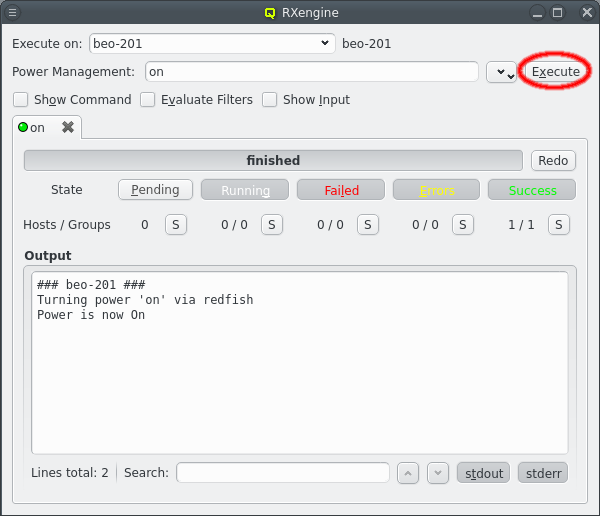

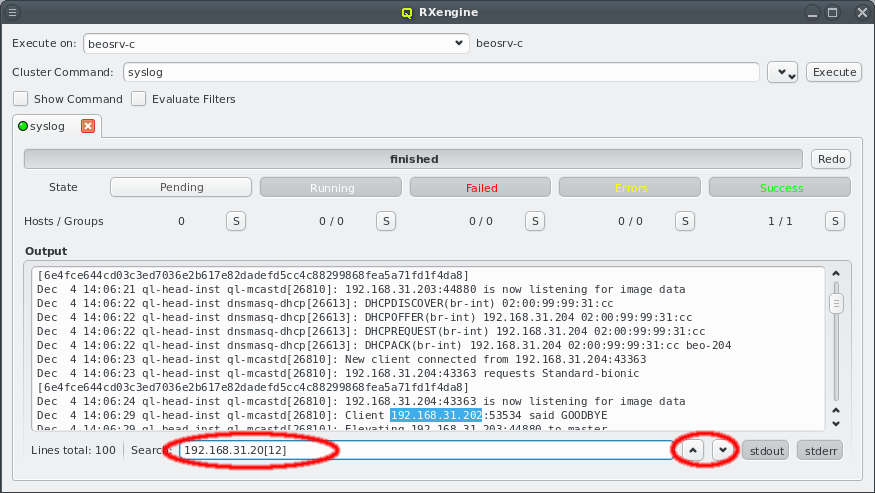

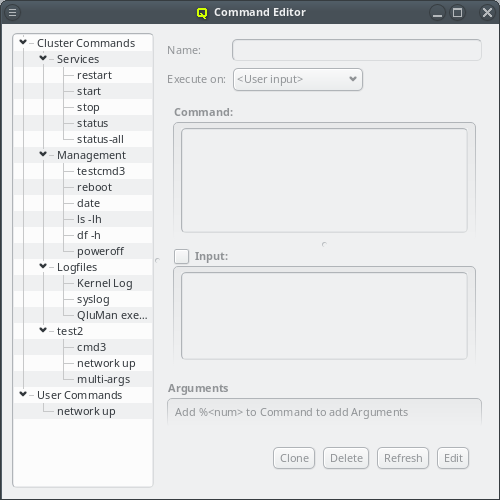

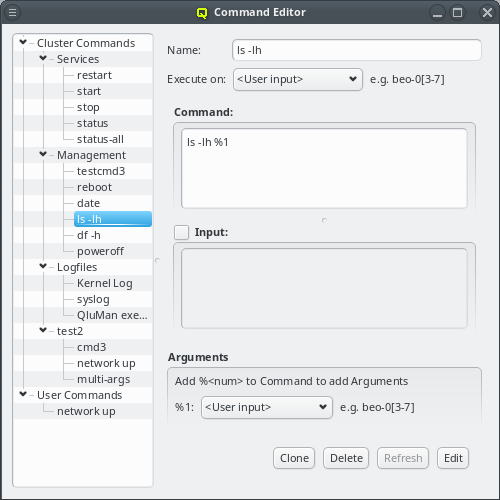

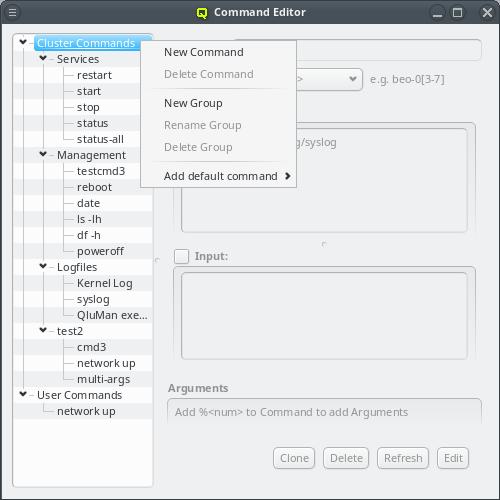

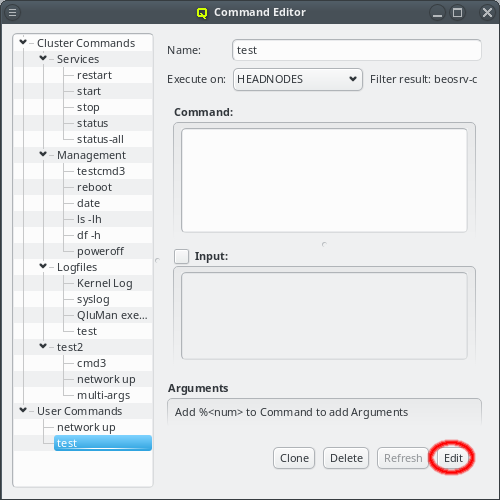

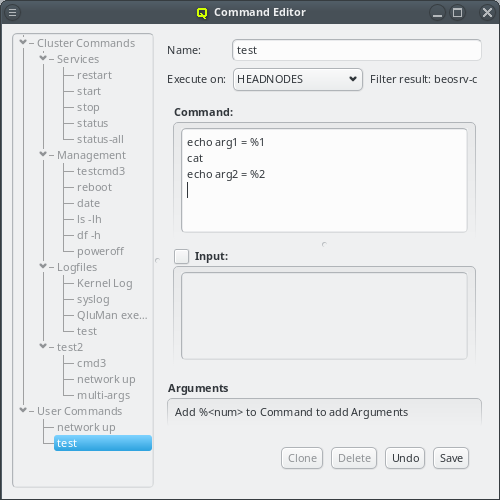

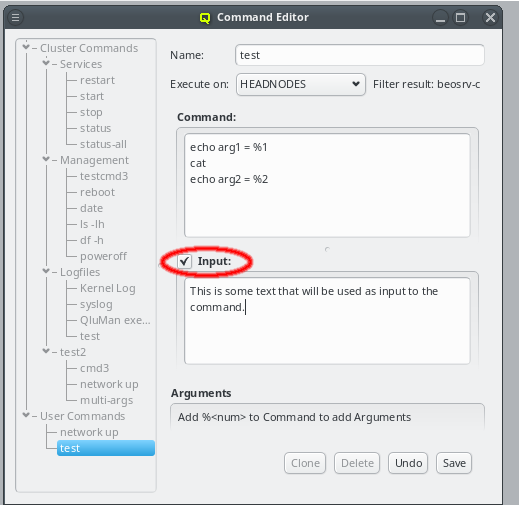

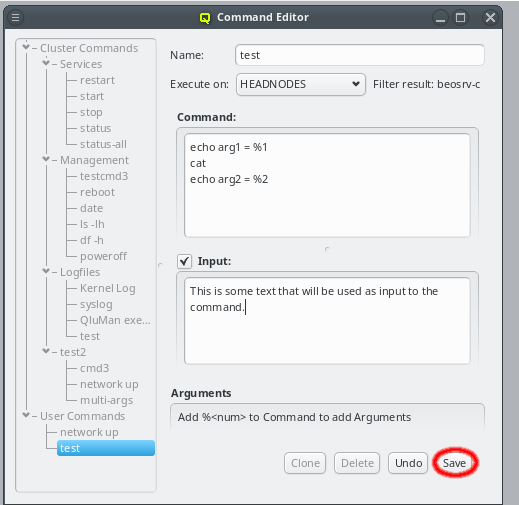

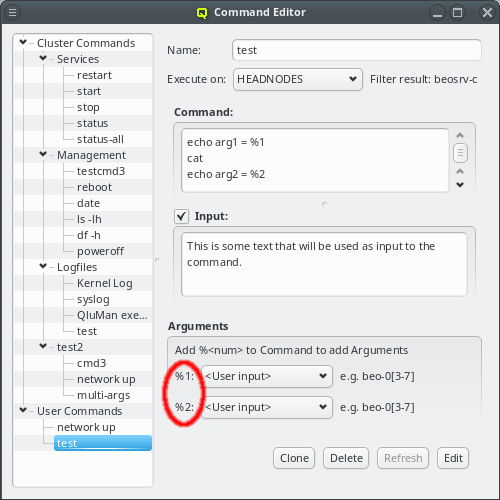

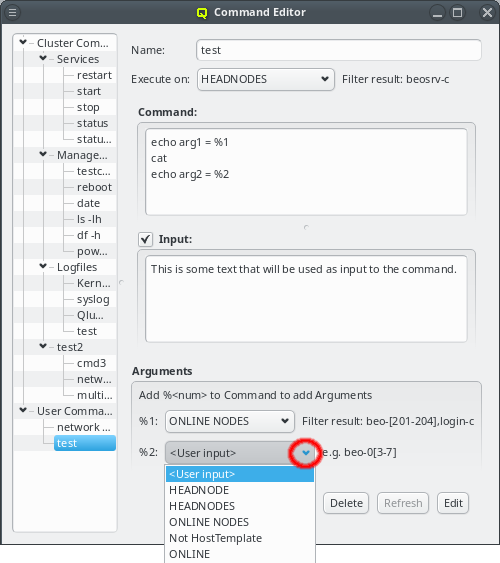

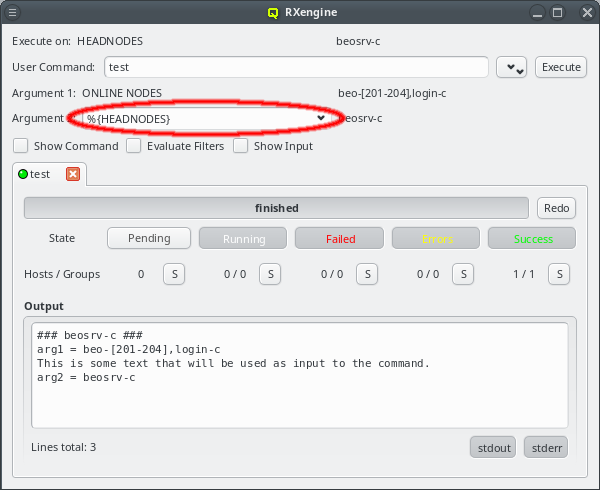

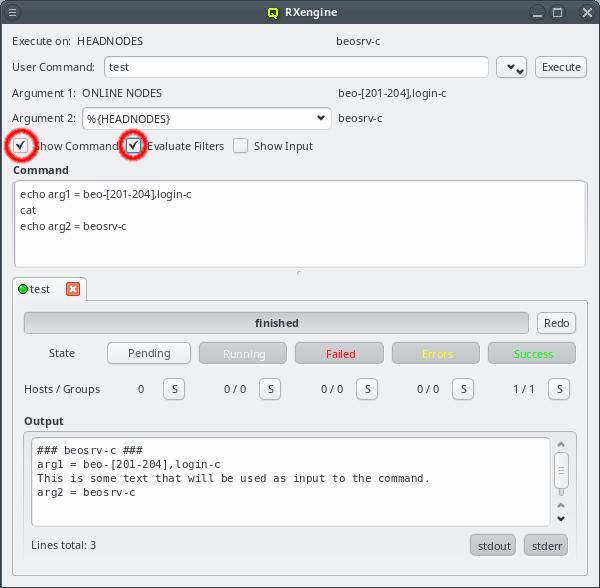

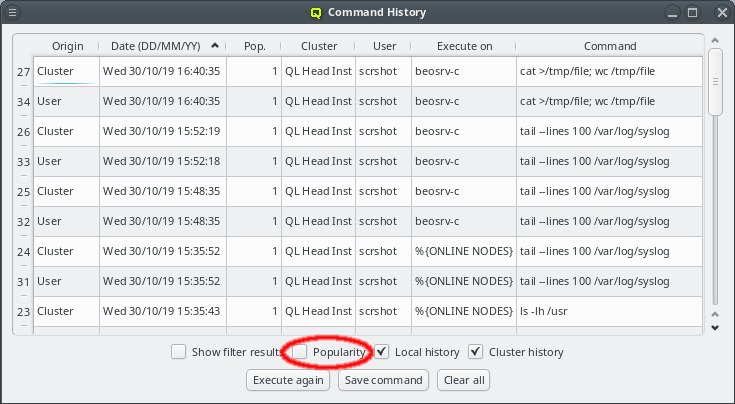

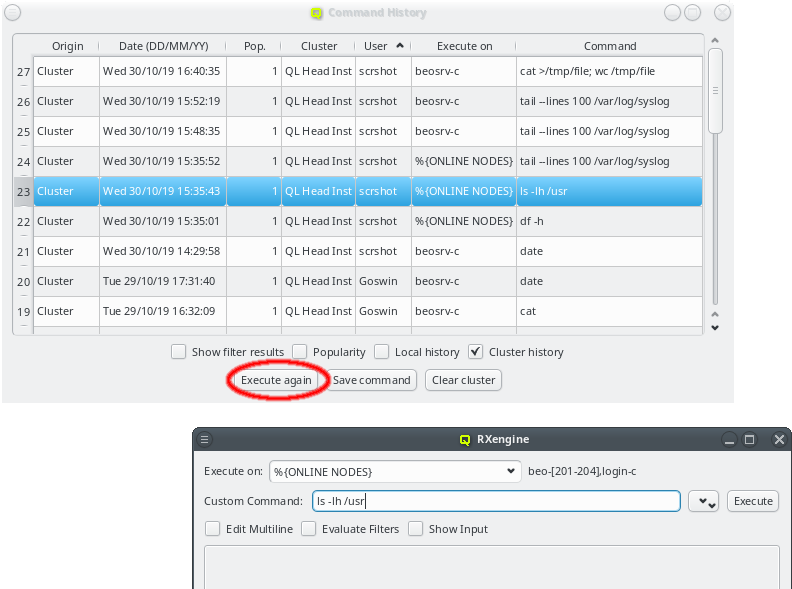

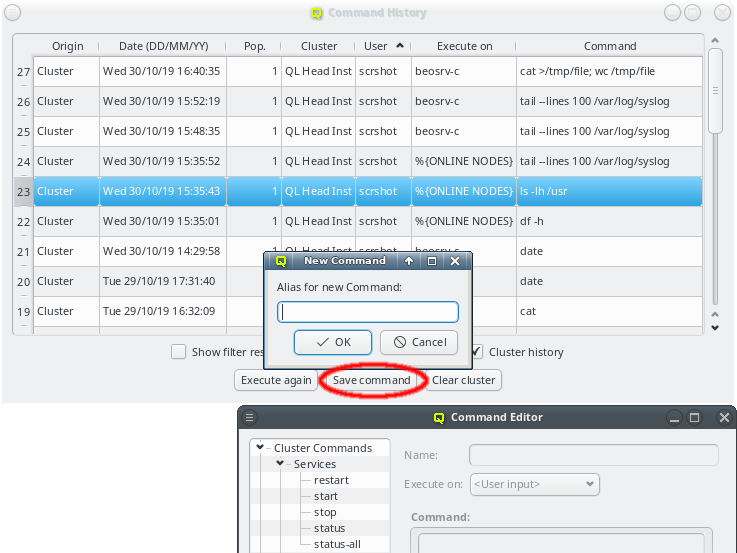

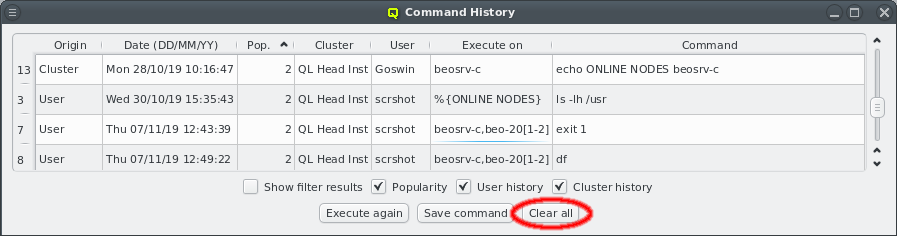

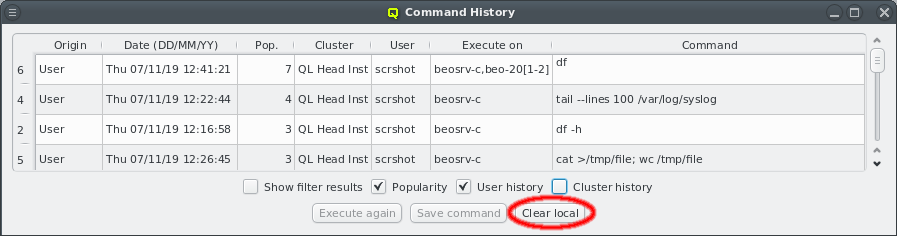

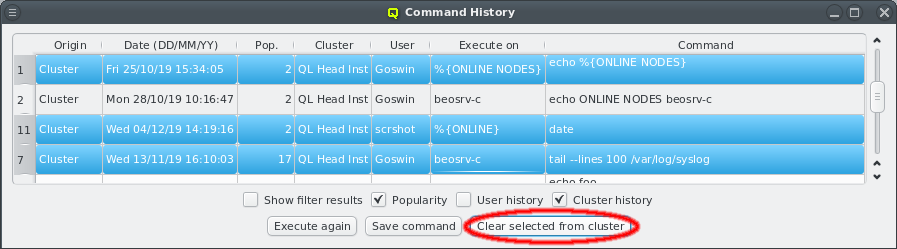

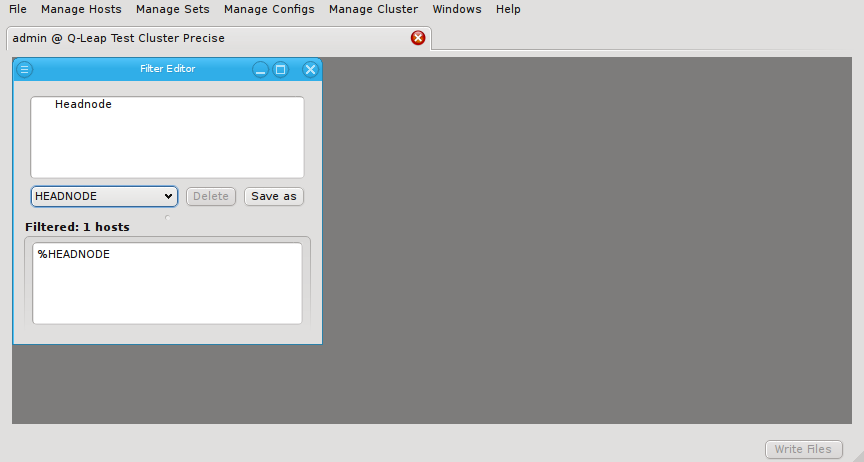

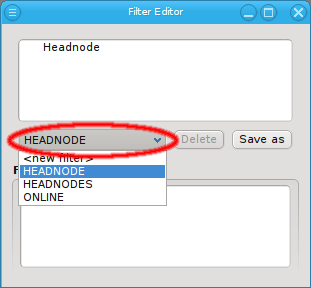

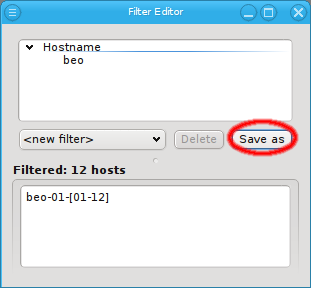

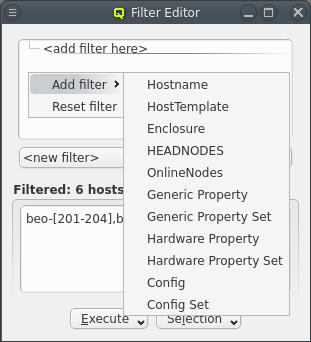

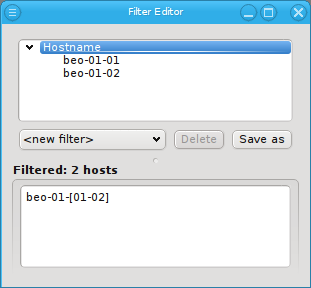

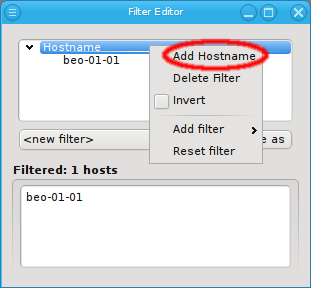

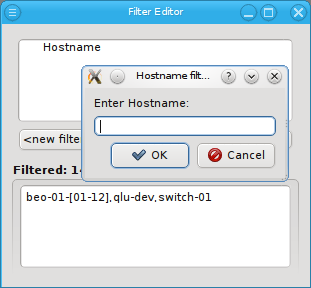

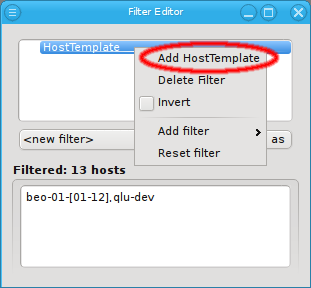

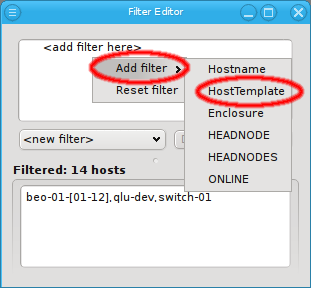

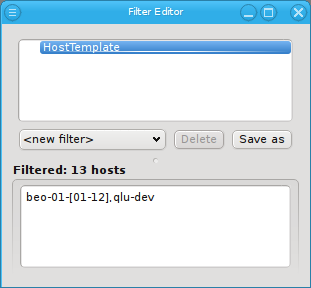

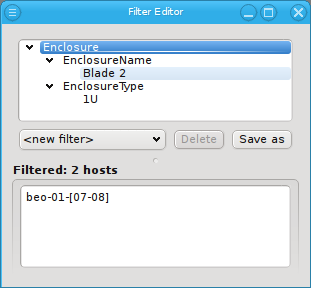

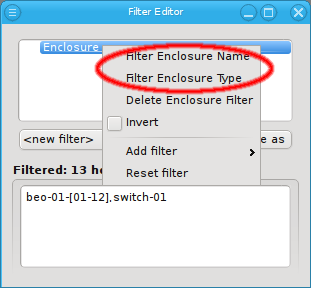

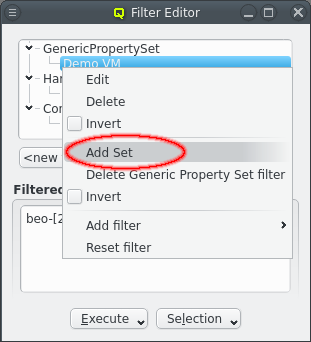

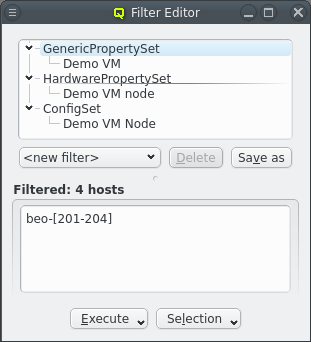

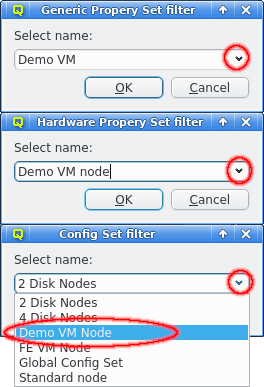

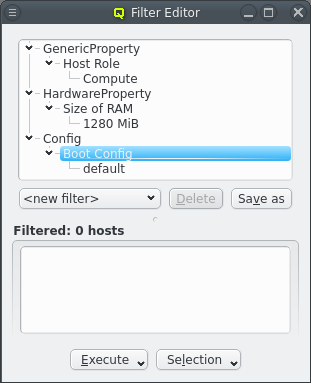

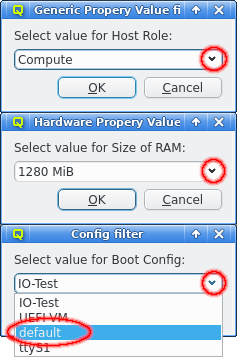

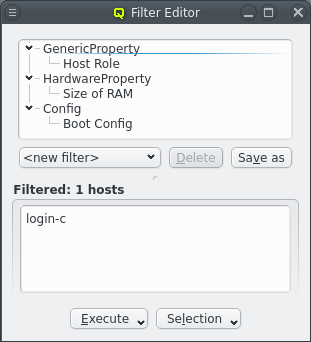

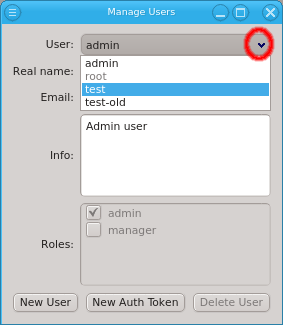

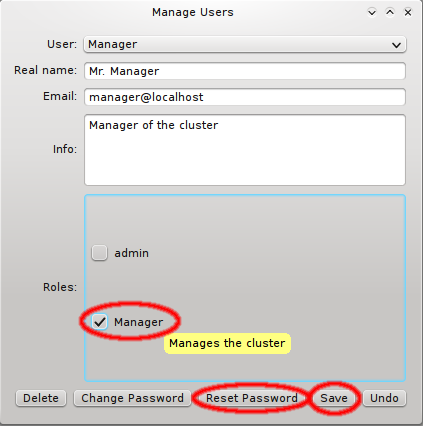

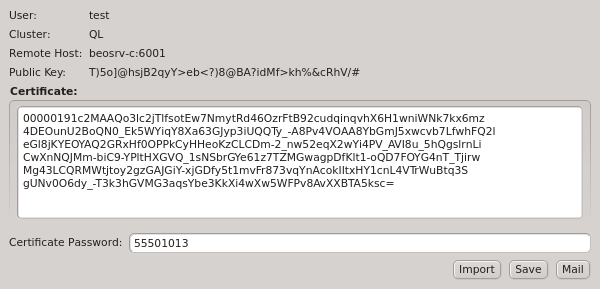

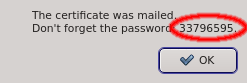

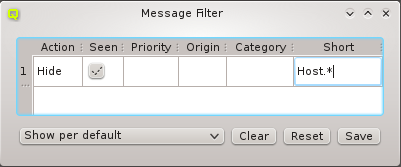

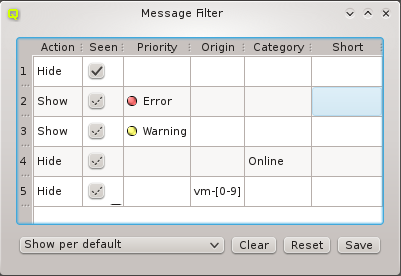

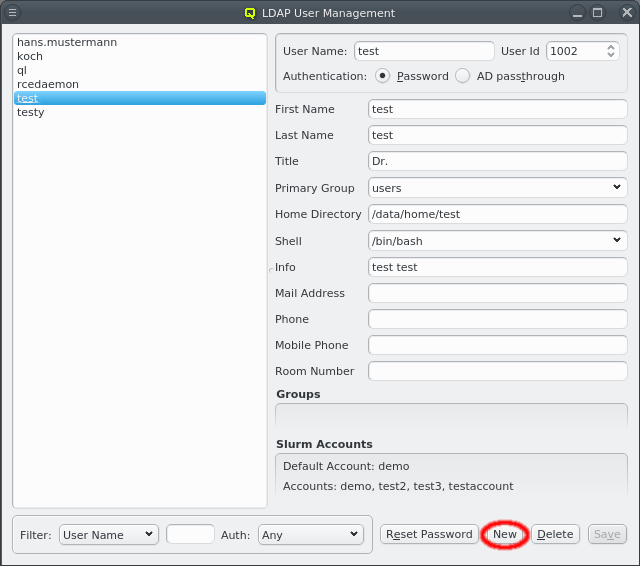

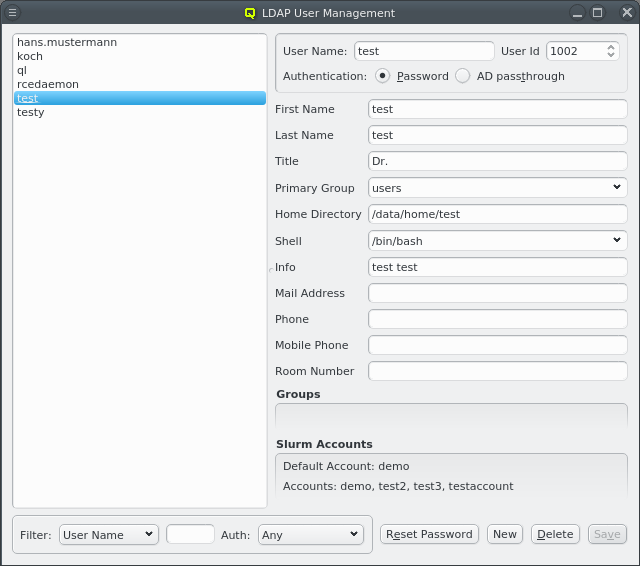

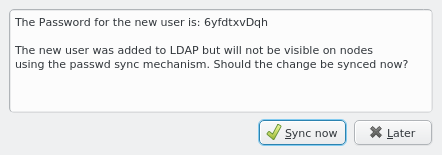

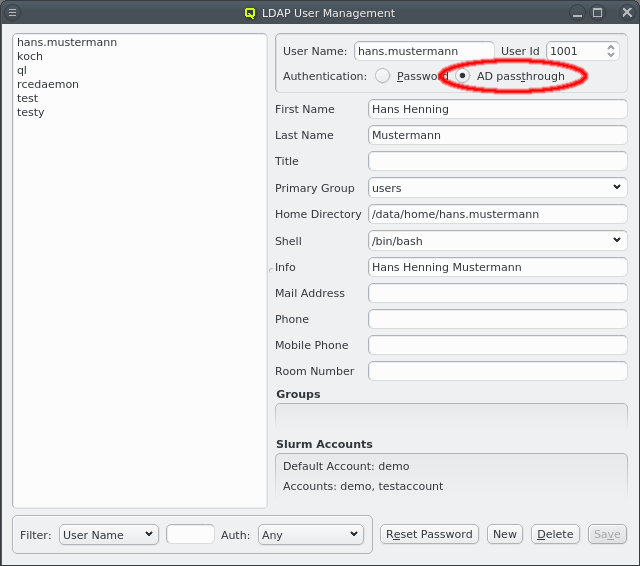

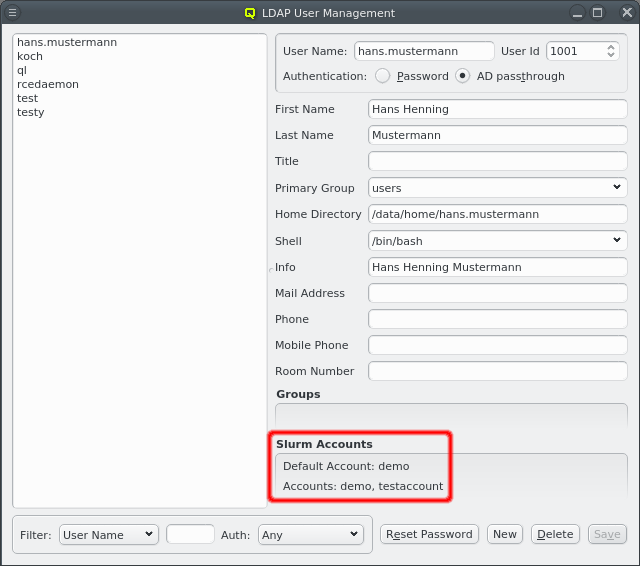

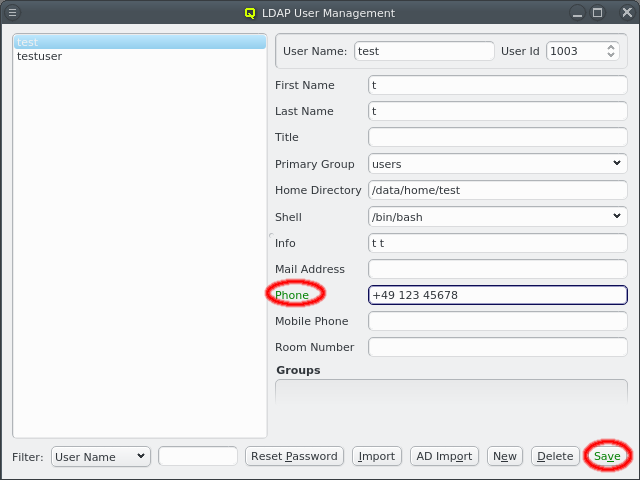

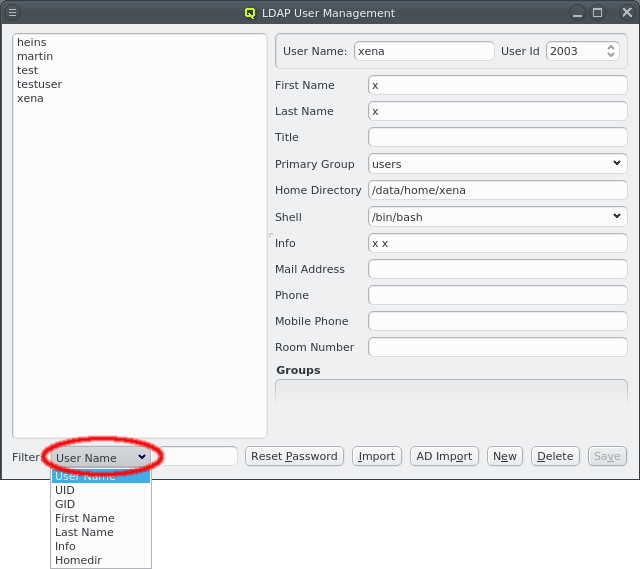

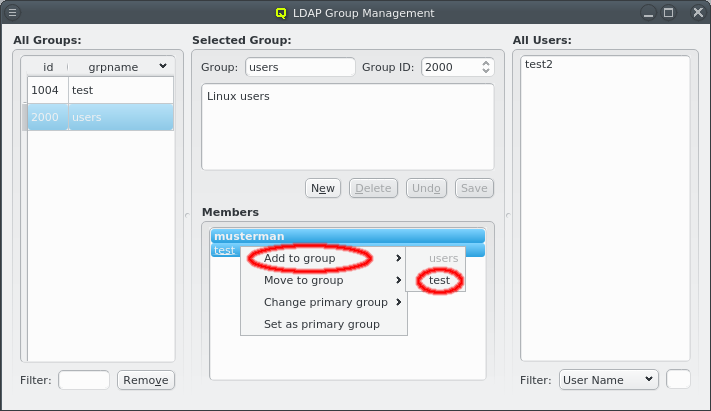

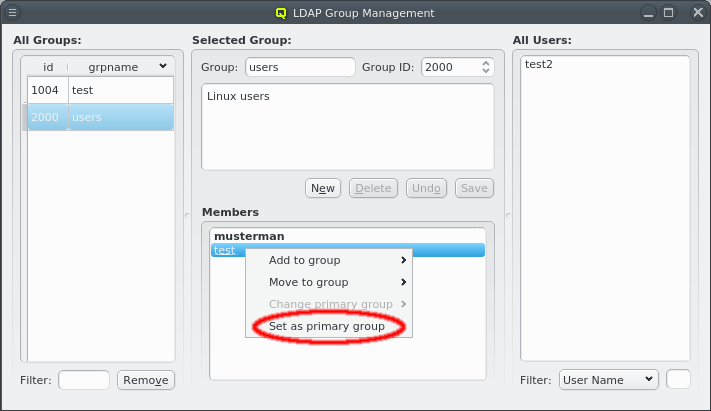

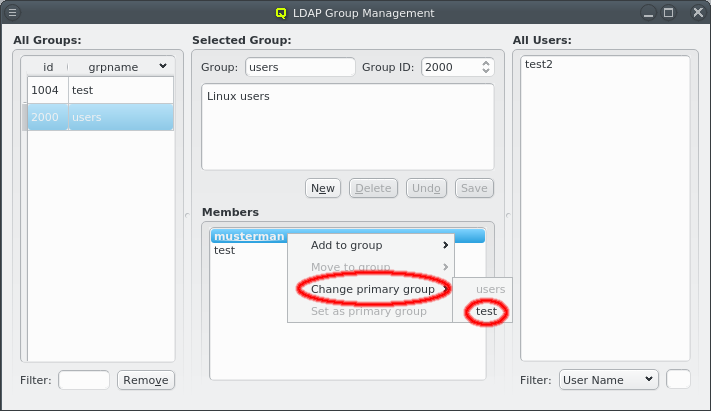

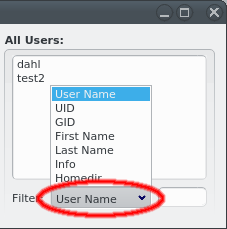

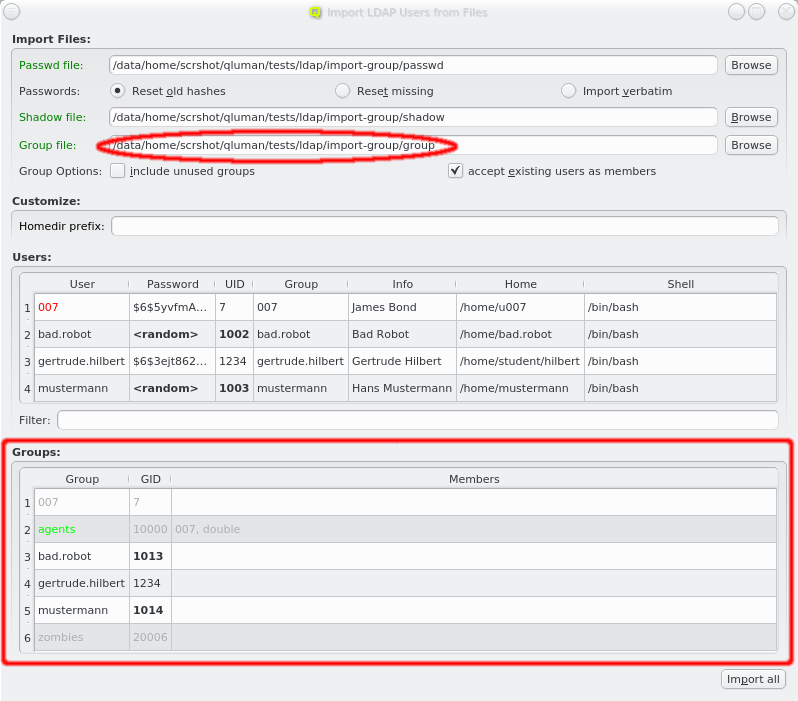

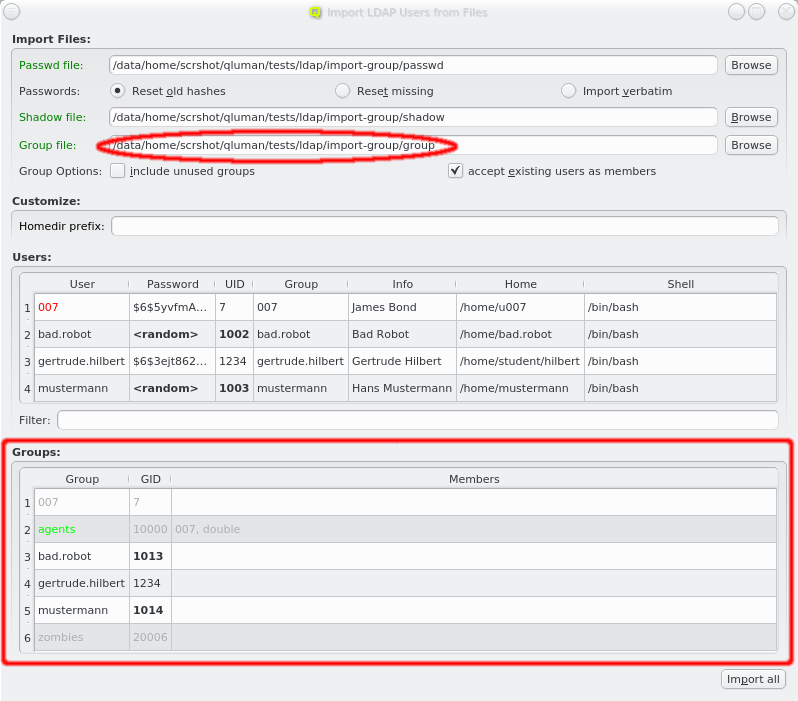

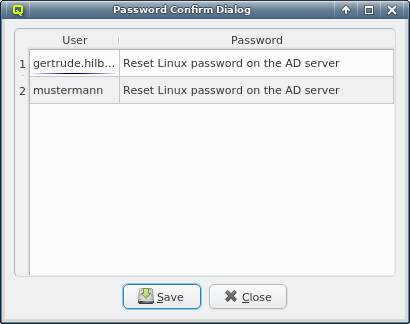

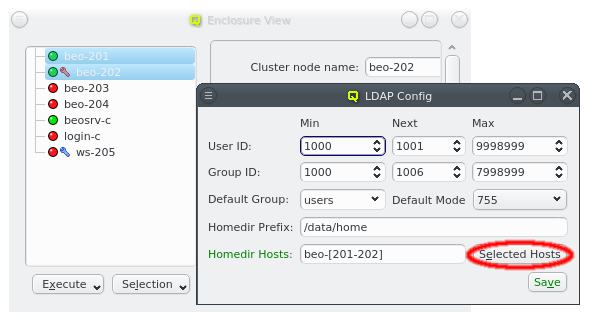

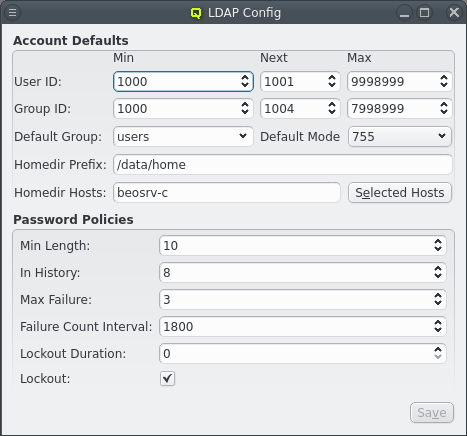

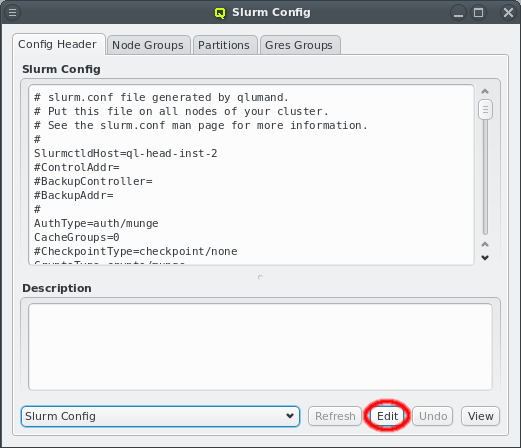

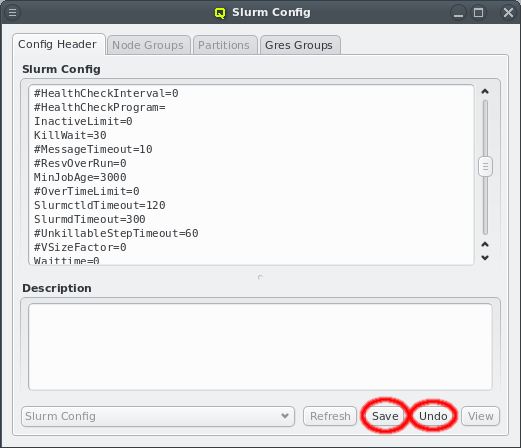

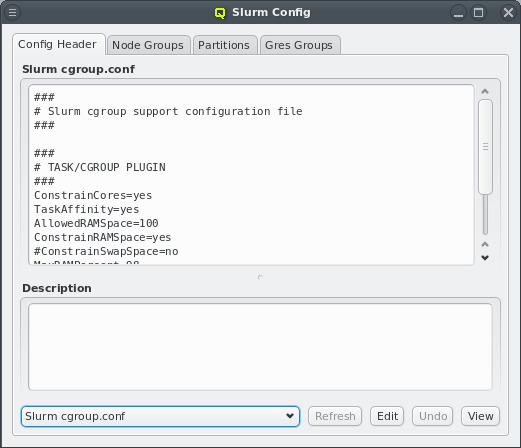

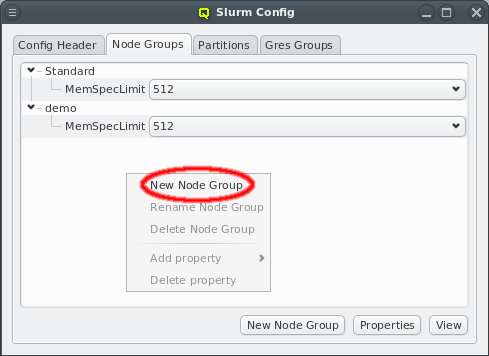

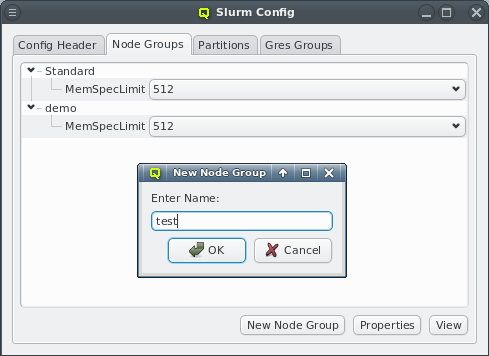

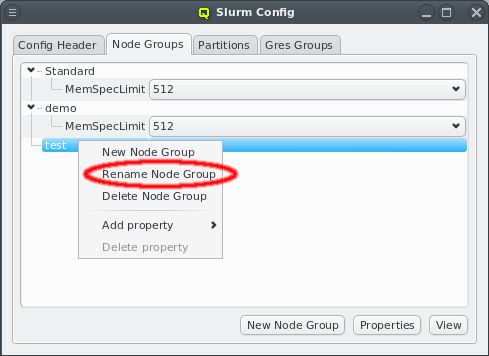

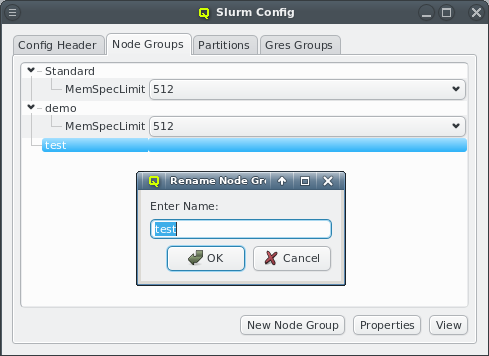

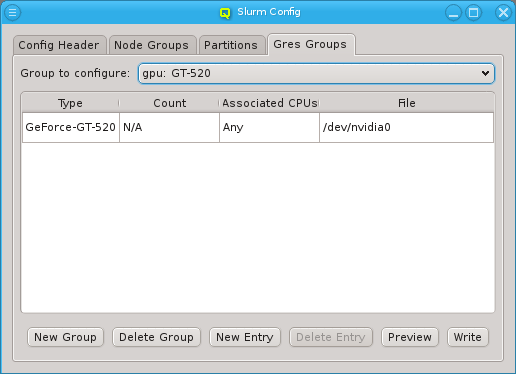

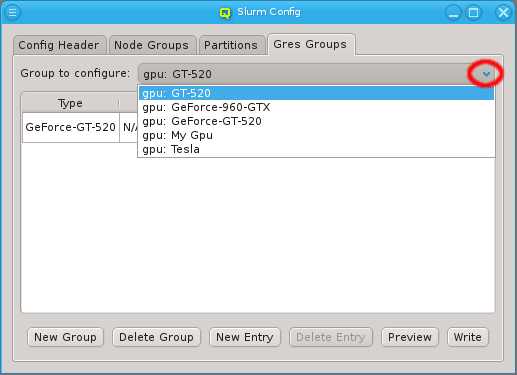

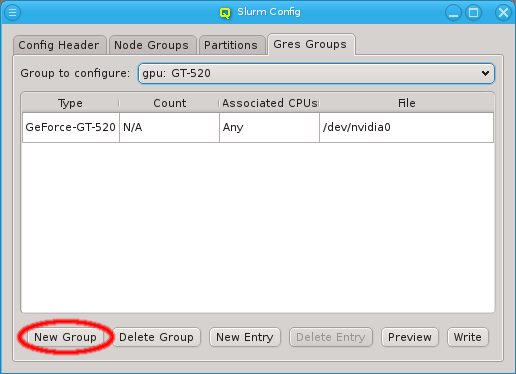

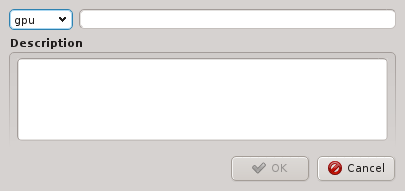

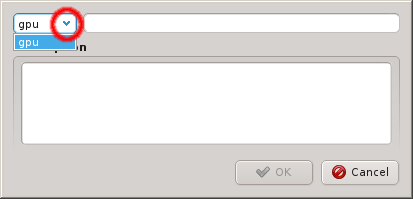

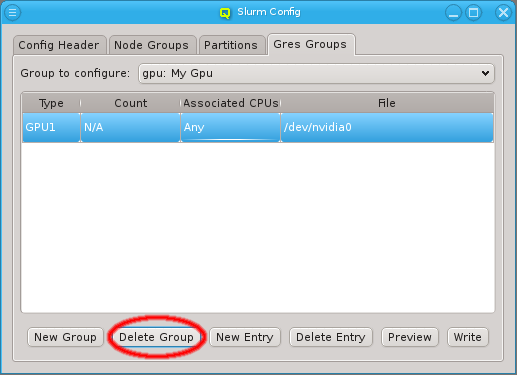

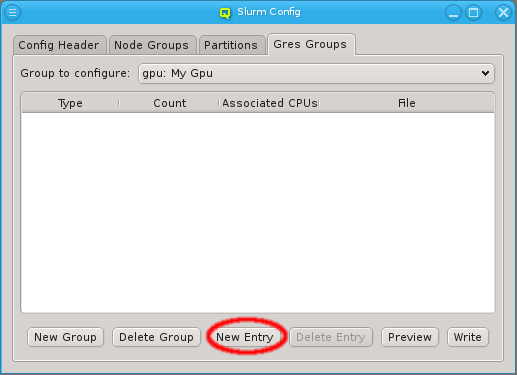

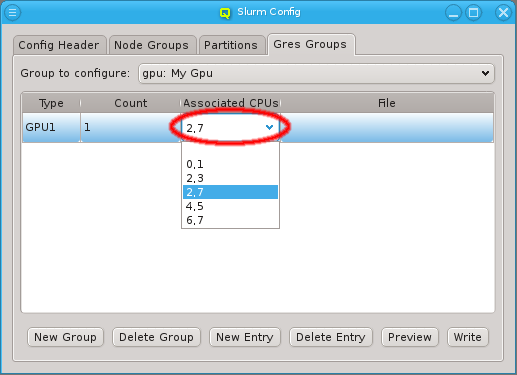

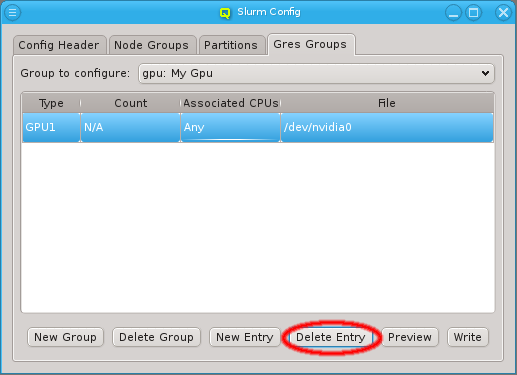

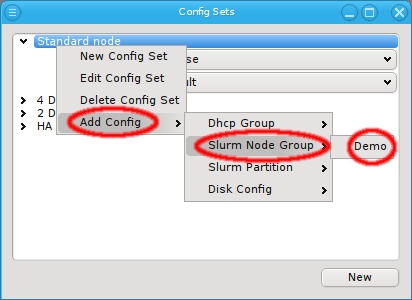

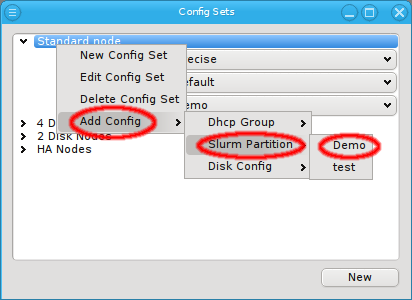

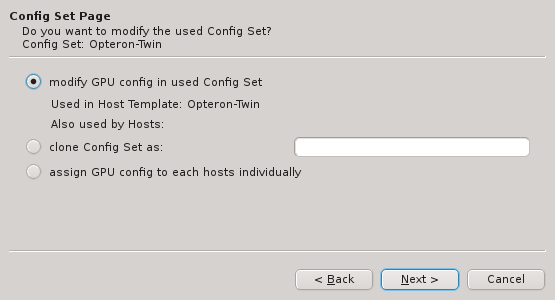

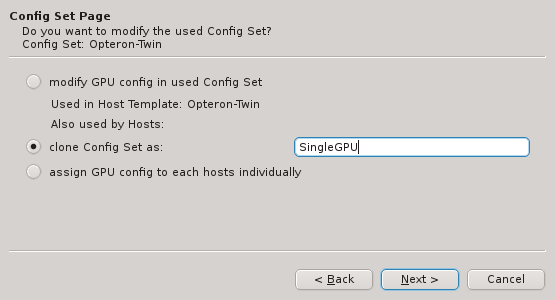

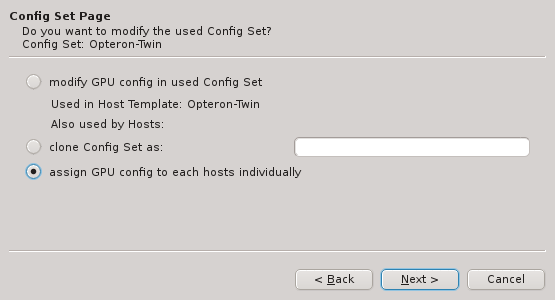

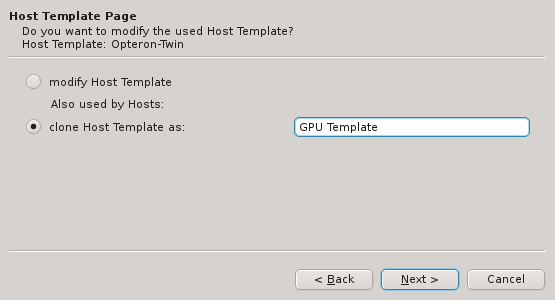

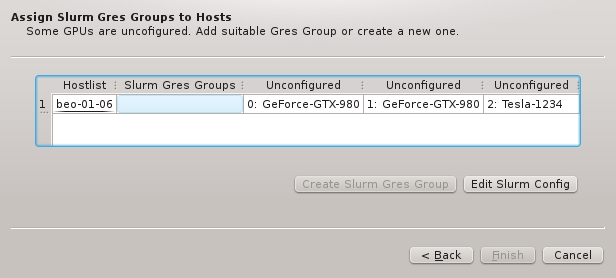

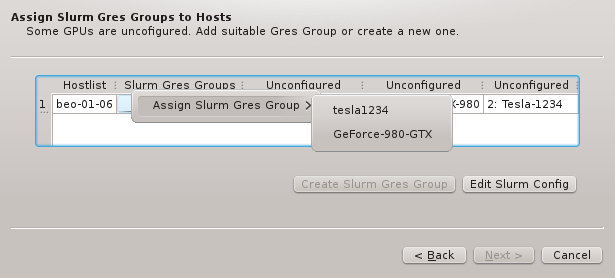

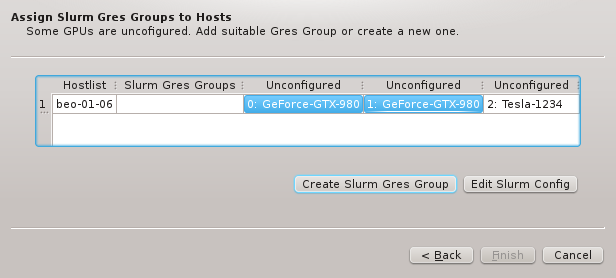

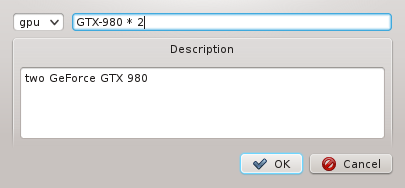

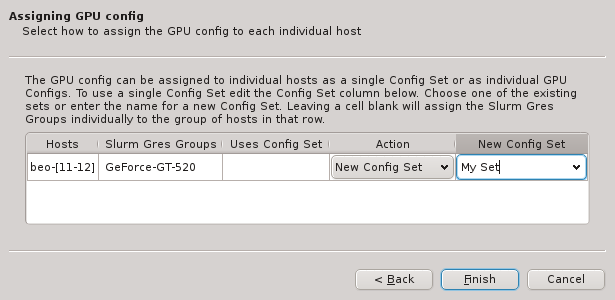

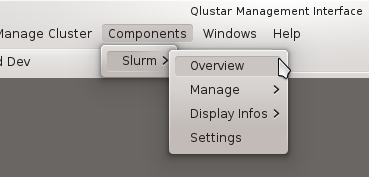

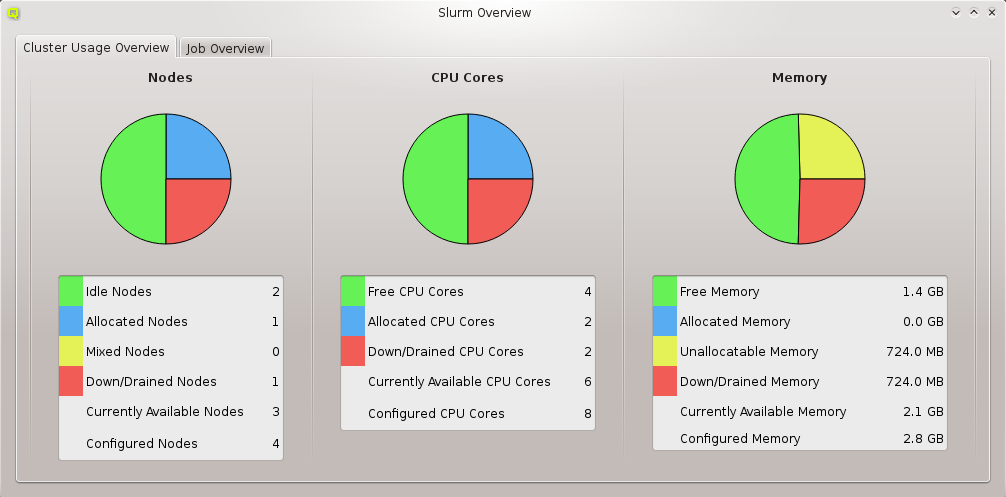

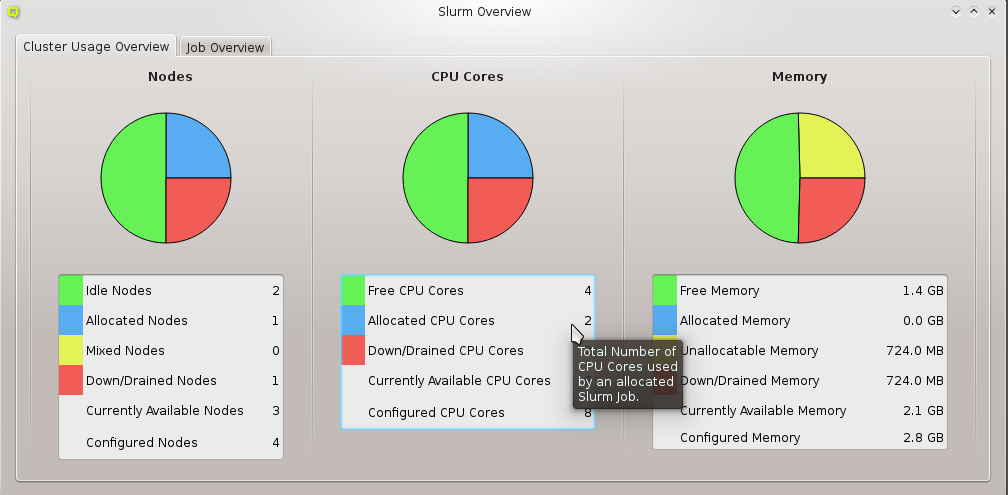

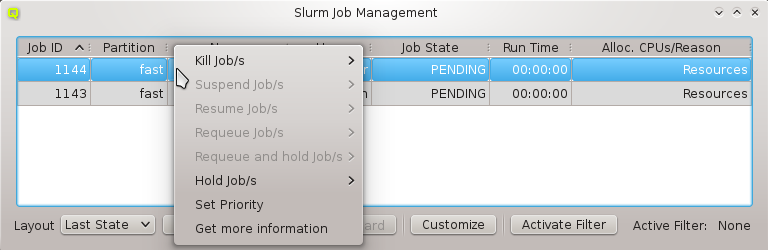

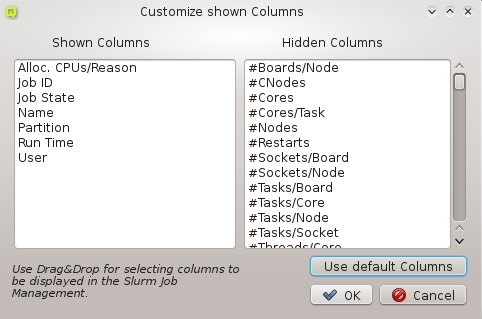

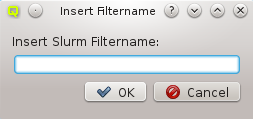

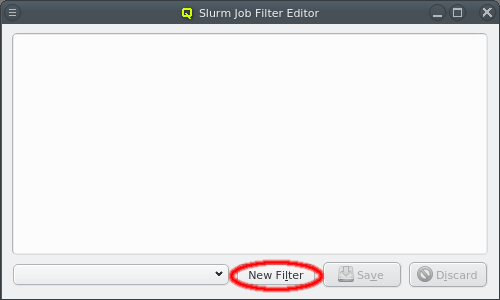

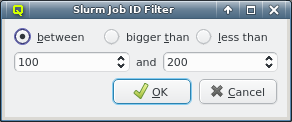

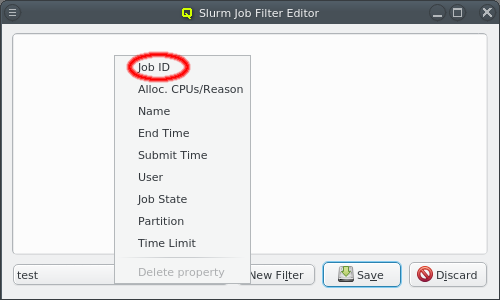

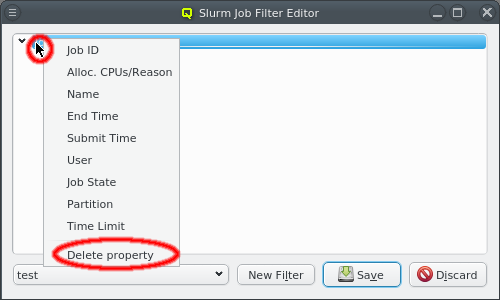

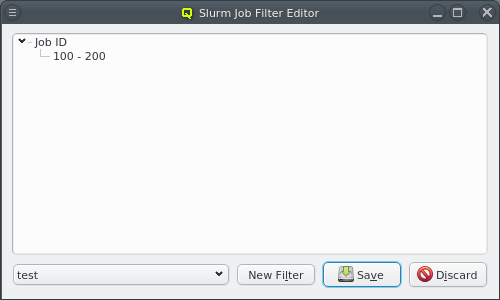

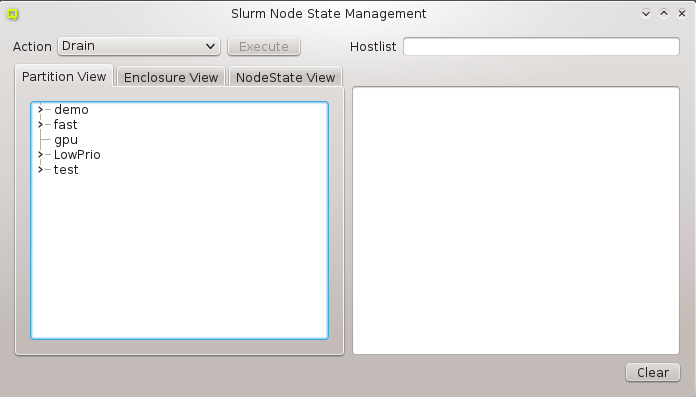

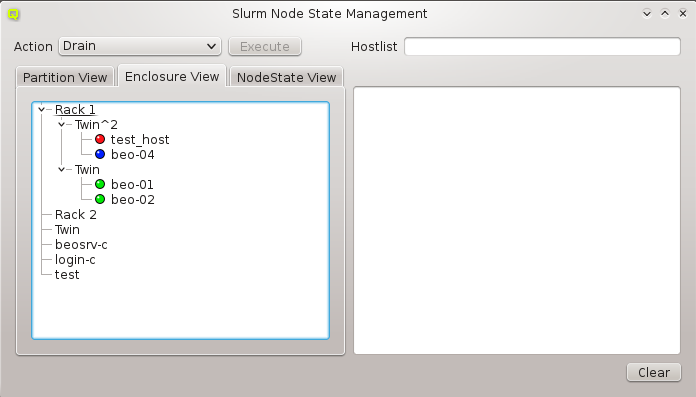

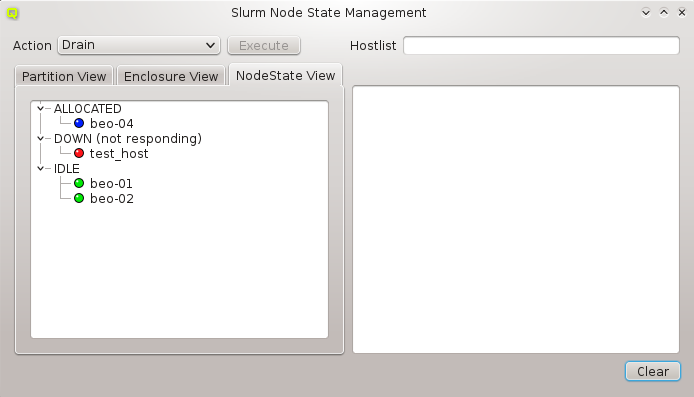

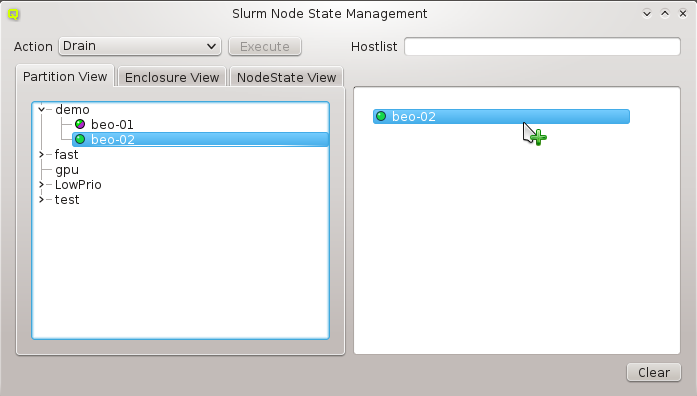

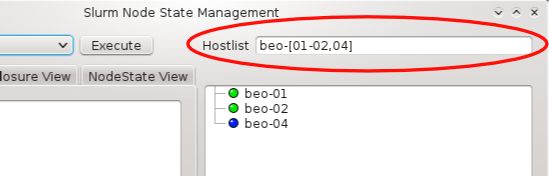

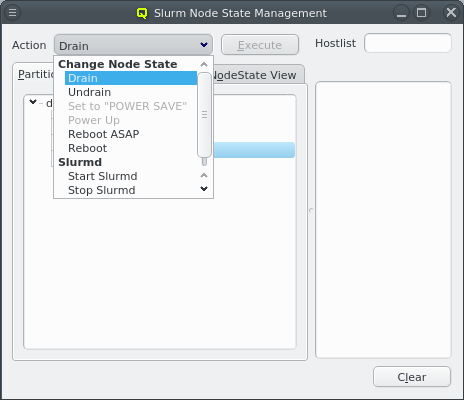

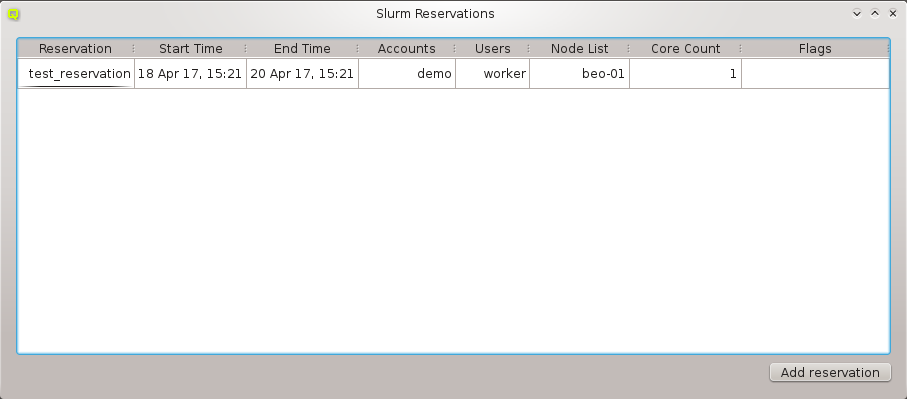

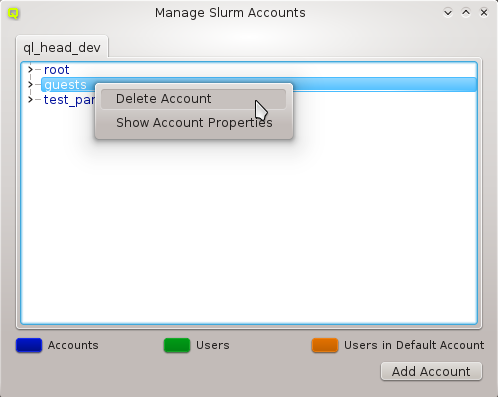

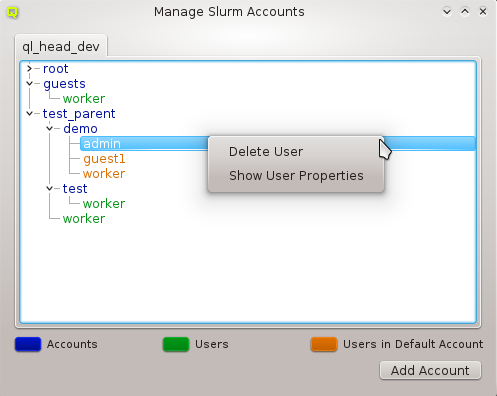

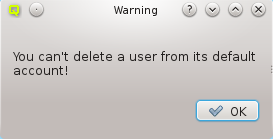

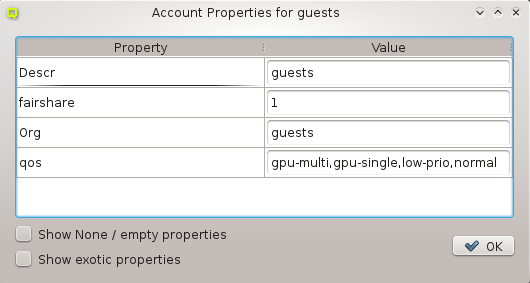

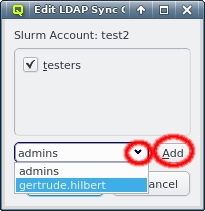

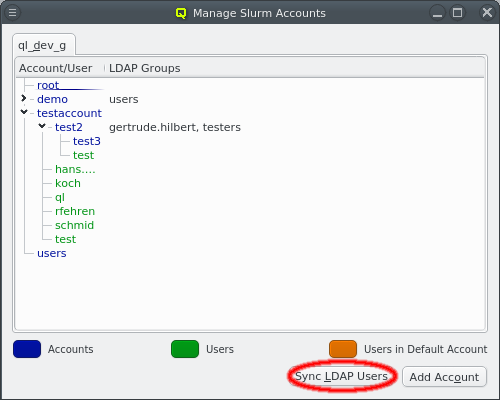

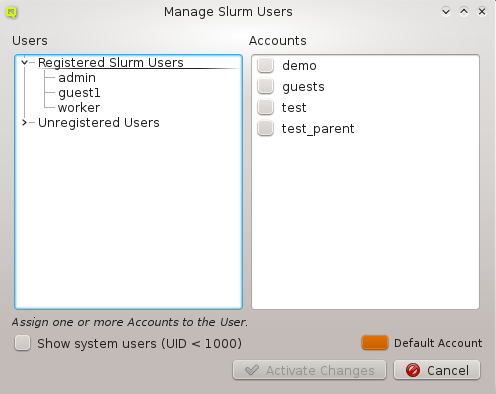

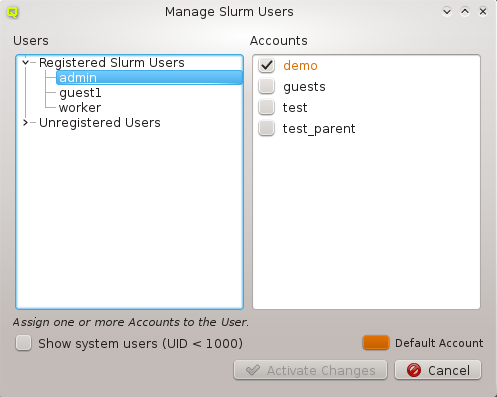

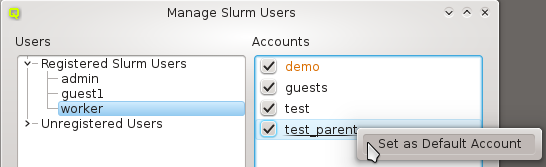

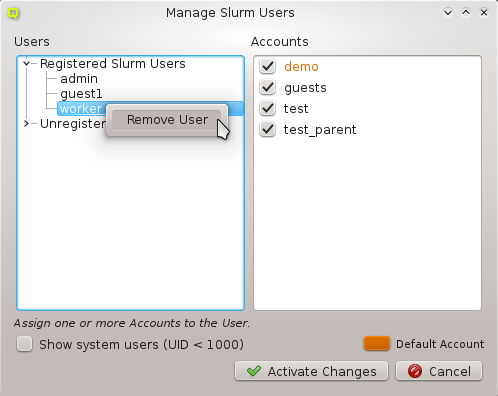

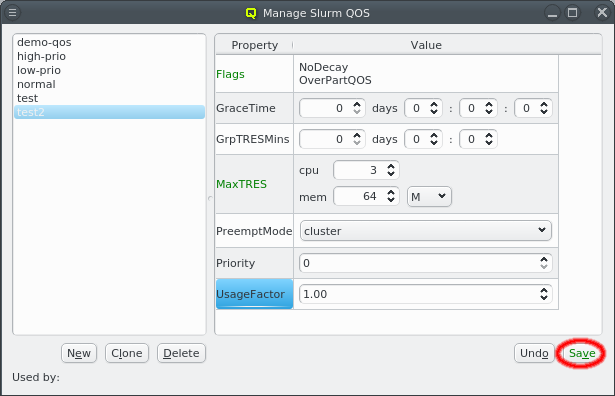

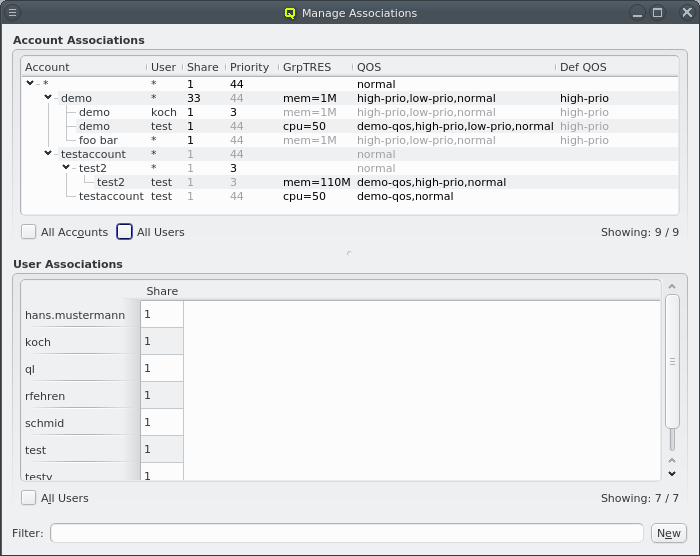

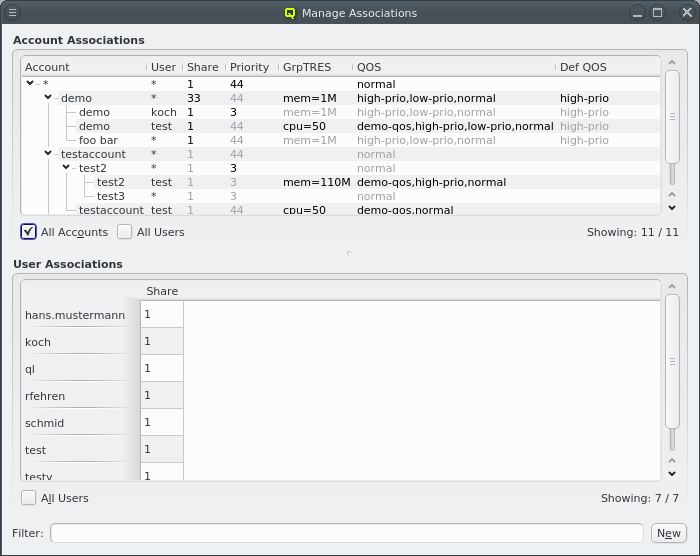

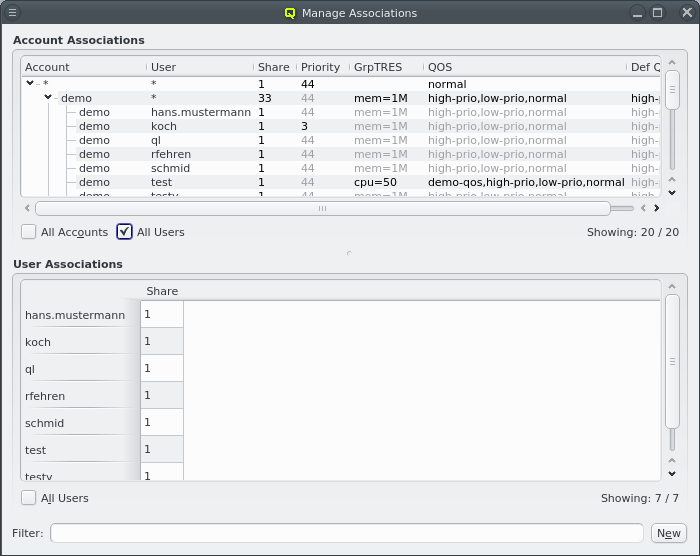

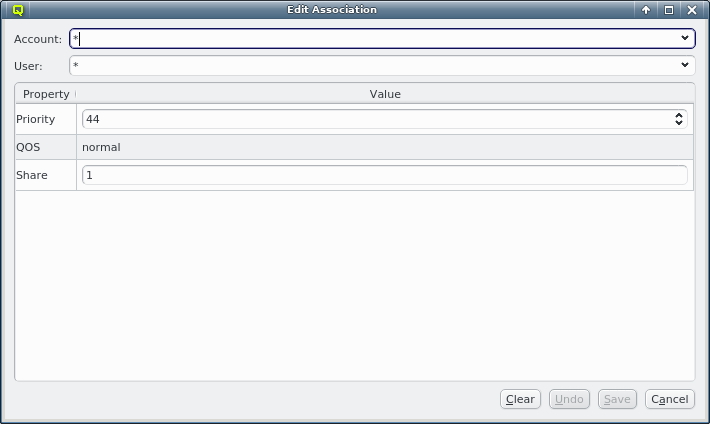

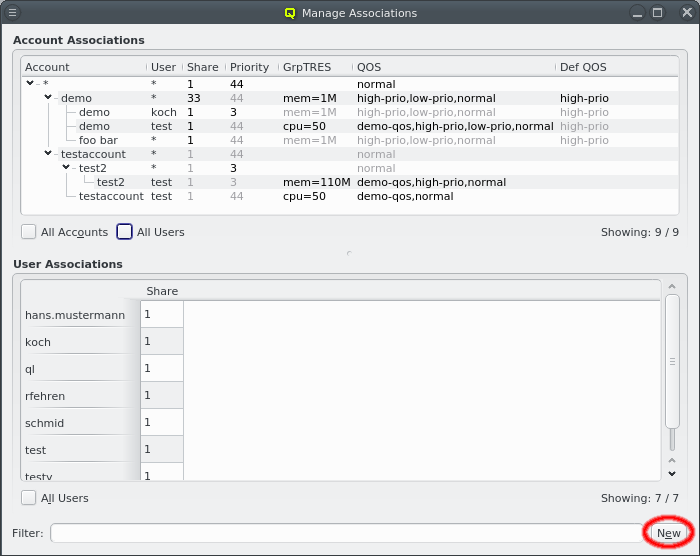

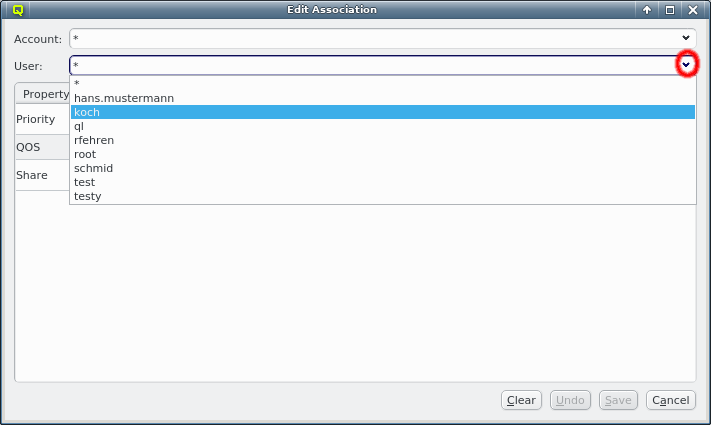

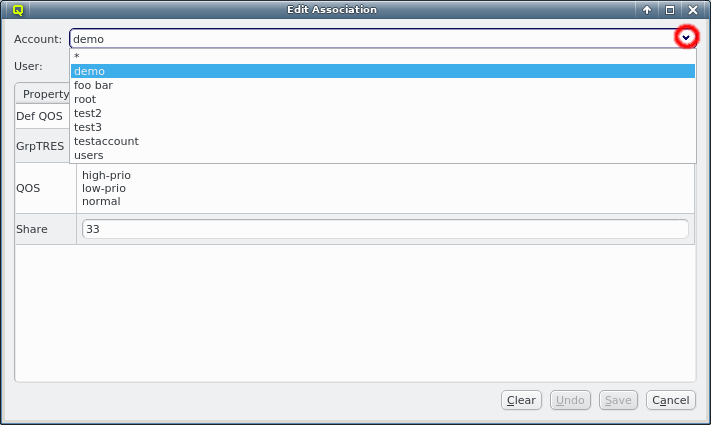

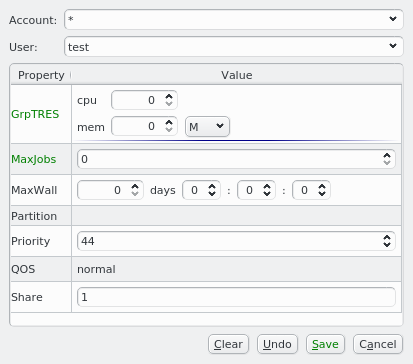

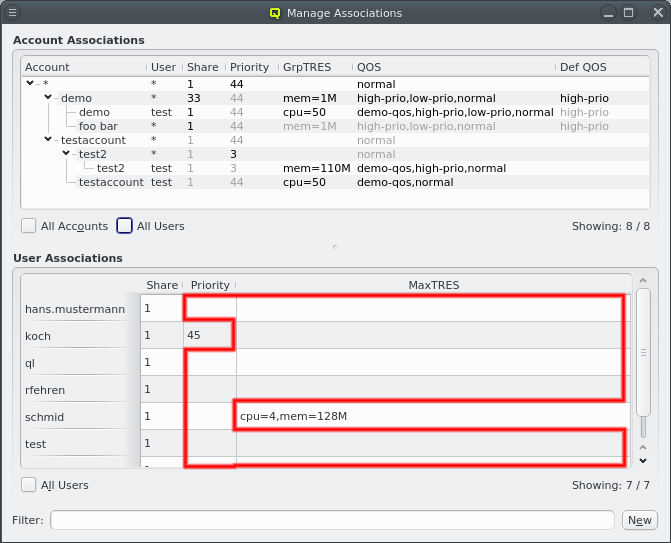

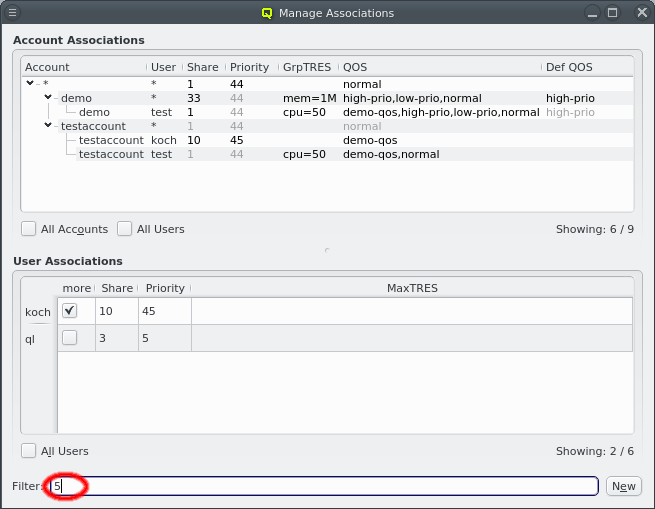

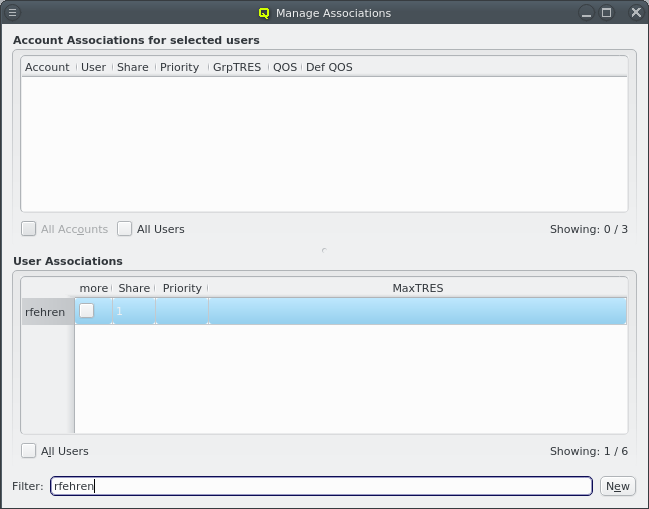

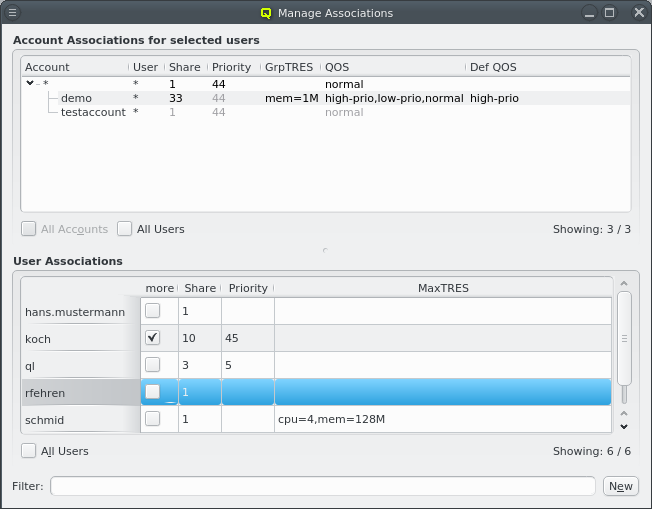

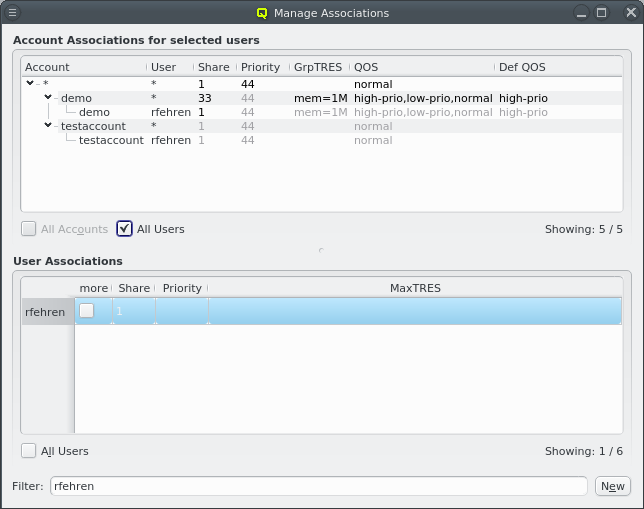

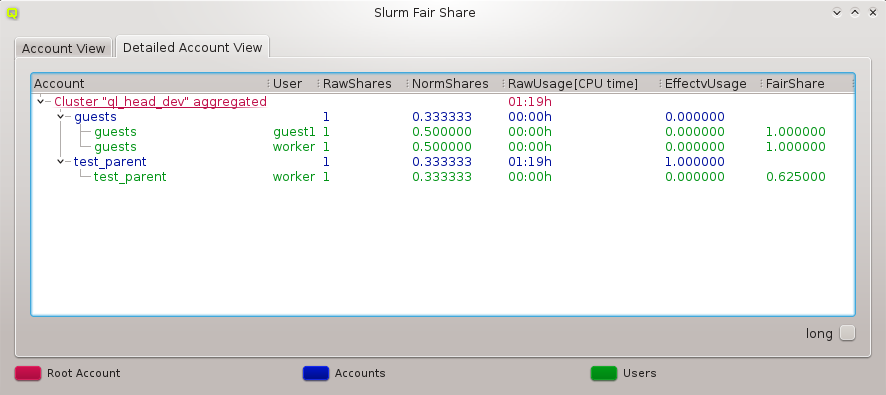

Template matches the detected hardware of at least one host perfectly. A yellow one means that